Ilya Sutskever

@ilyasut

SSI @SSI

ID: 1720046887

01-09-2013 19:32:15

1,1K Tweet

482,482K Followers

3 Following

Sam Altman is back as CEO, Mira Murati as CTO and Greg Brockman as President. OpenAI has a new initial board. Messages from Sam Altman and board chair Bret Taylor openai.com/blog/sam-altma…

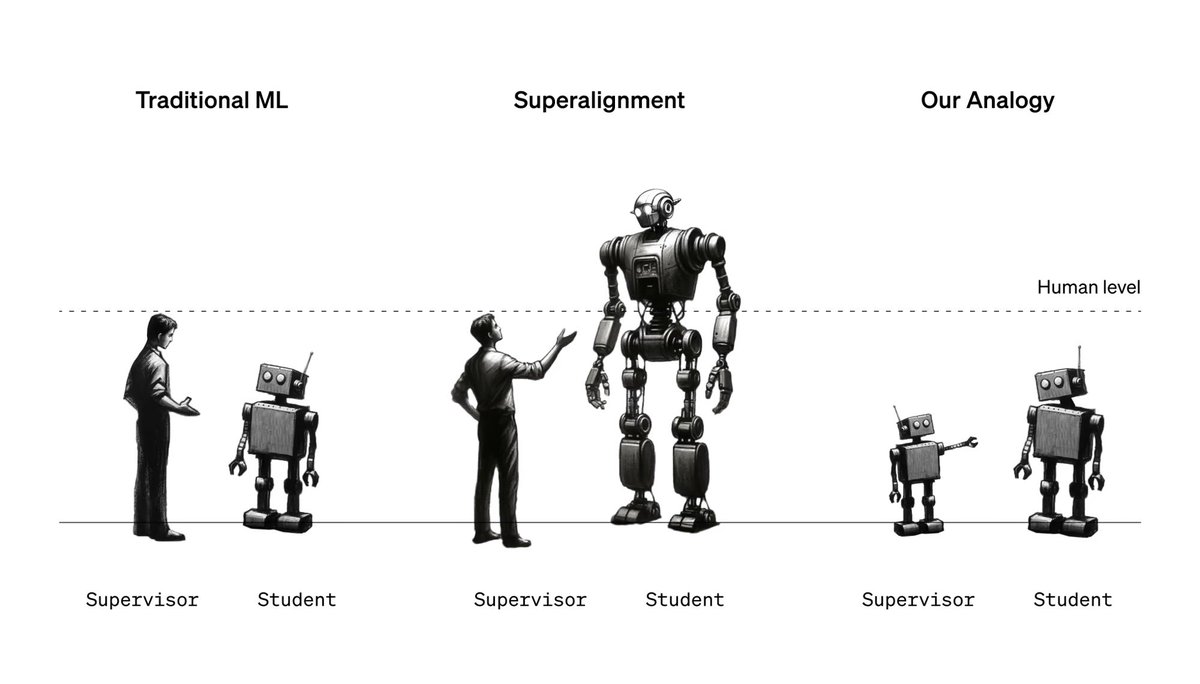

We're announcing, together with Eric Schmidt: Superalignment Fast Grants. $10M in grants for technical research on aligning superhuman AI systems, including weak-to-strong generalization, interpretability, scalable oversight, and more. Apply by Feb 18! openai.com/blog/superalig…

Kudos especially to Collin Burns for being the visionary behind this work, Pavel Izmailov for all the great scientific inquisition, Ilya Sutskever for stoking the fires, Jan Hendrik Kirchner and Leopold Aschenbrenner for moving things forward every day. Amazing ✨

i'd particularly like to recognize Collin Burns for today's generalization result, who came to openai excited to pursue this vision and helped get the rest of the team excited about it!

Congratulations to Geoffrey Hinton for winning the Nobel Prize in physics!!

And congratulations to Demis Hassabis and John Jumper for winning the Nobel Prize in Chemistry!!