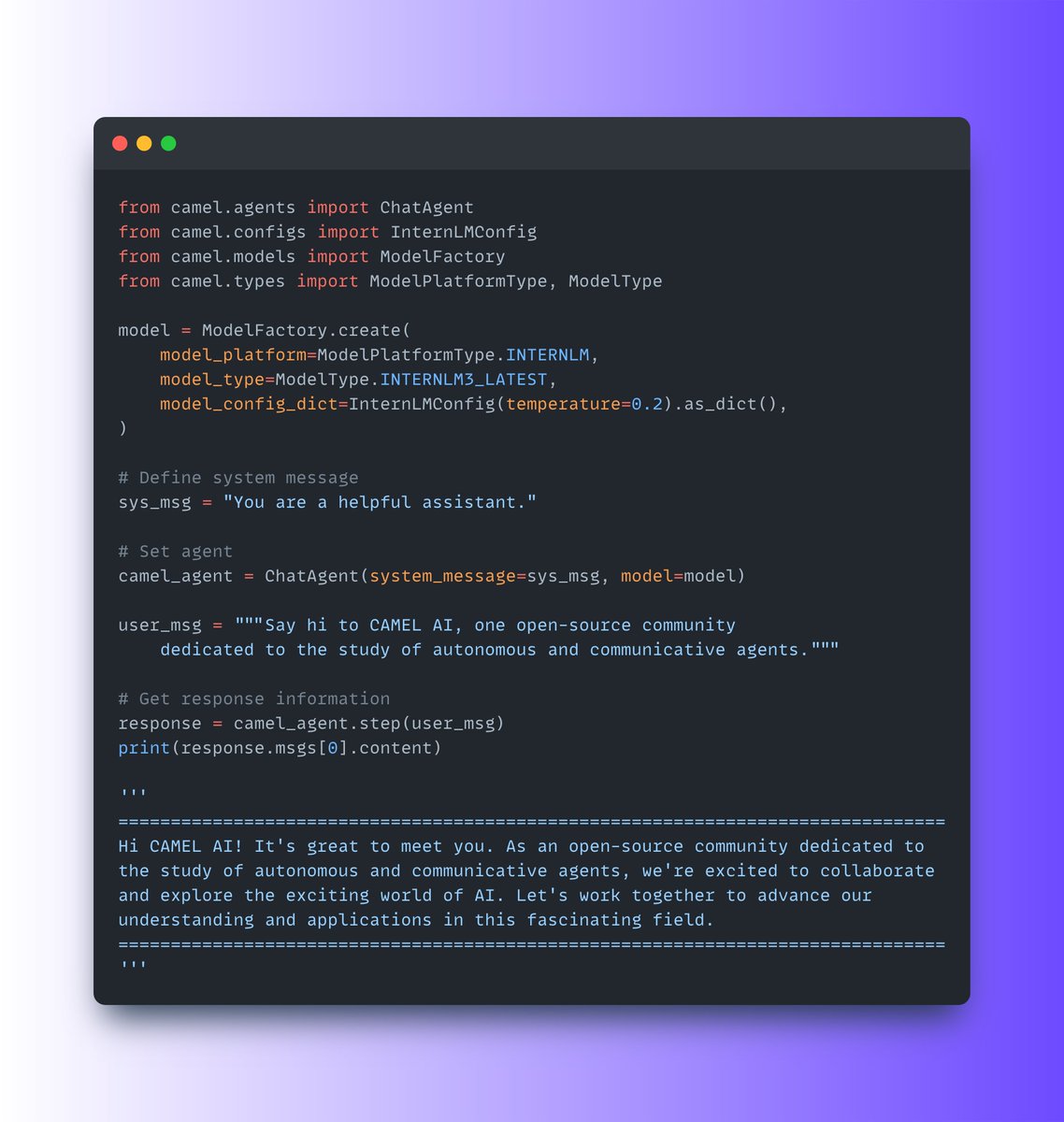

InternLM

@intern_lm

InternLM has open-sourced a 7 billion parameter base model and a chat model tailored for practical scenarios.

Discord: discord.gg/xa29JuW87d

ID: 1667434783268548609

https://github.com/InternLM 10-06-2023 07:33:44

79 Tweet

2,2K Followers

31 Following

🥳We have released our InternLM2.5 new models in 1.8B and 20B on Hugging Face. 😉1.8B: Ultra-lightweight, high-performance, with great adaptability. 😉20B: More powerful, ideal for complex tasks. 😍Explore now! Models: huggingface.co/collections/in… GitHub: github.com/InternLM/Inter…

🚀Introducing InternLM3-8B-Instruct with Apache License 2.0. -Trained on only 4T tokens, saving more than 75% of the training cost. -Supports deep thinking for complex reasoning and normal mode for chat. Model:Hugging Face huggingface.co/internlm/inter… GitHub: github.com/InternLM/Inter…