Irena Gao

@irena_gao

PhD student @StanfordAILab | Trustworthy ML

ID: 1573342259604357121

http://i-gao.github.io 23-09-2022 16:03:30

99 Tweet

972 Followers

254 Following

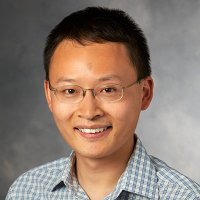

SAIL is delighted to announce Carlos Carlos Guestrin, the Fortinet Founders Professor of Computer Science, as the next Director of Stanford AI Lab. Carlos is a talented researcher and leader, known for his work on explainability, graphs, compilation, and boosted trees in AI.

💡The key idea of #textgrad is to optimize by backpropagating textual gradients produced by #LLM. Paper: nature.com/articles/s4158… Code: github.com/zou-group/text… Amazing job by Mert Yuksekgonul leading this project w/ fantastic collaborators Federico Bianchi Joseph Boen Sheng Liu

Introducing ✨Latent Diffusion Planning✨ (LDP)! We explore how to use expert, suboptimal, & action-free data. To do so, we learn a diffusion-based *planner* that forecasts latent states, and an *inverse-dynamics model* that extracts actions. w/ Oleg Rybkin Dorsa Sadigh Chelsea Finn

Even the smartest LLMs can fail at basic multiturn communication Ask for grocery help → without asking where you live 🤦♀️ Ask to write articles → assumes your preferences 🤷🏻♀️ ⭐️CollabLLM (top 1%; oral ICML Conference) transforms LLMs from passive responders into active collaborators.