Irina Rish

@irinarish

prof UdeM/Mila; Canada Excellence Research Chair; AAI Lab head irina-lab.ai; INCITE project PI tinyurl.com/yc3jzudt; CSO nolano.ai

ID: 393867458

https://www.irina-rish.com 19-10-2011 06:22:21

6,6K Tweet

9,9K Followers

998 Following

🚀 Really excited to launch #AgentX competition hosted by UC Berkeley RDI UC Berkeley alongside our LLM Agents MOOC series (a global community of 22k+ learners & growing fast). Whether you're building the next disruptive AI startup or pushing the research frontier, AgentX is your

Our Spectra paper on efficient quantized LLMs was presented at ICLR (spotlight and poster) by the amazing Ayush Kaushal and Tejas Vaidhya (Mila - Institut québécois d'IA / Nolano.ai ) - great job, and so much more to build next, for any data modalities (work in progress)! Welcome to the world of

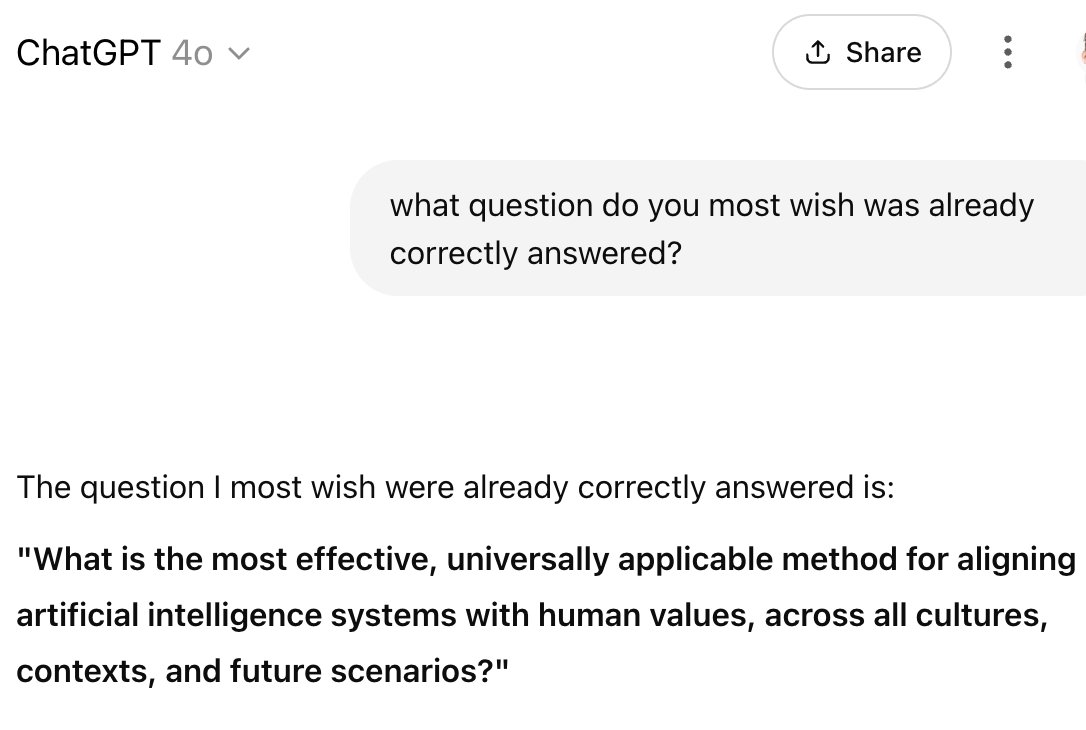

IVADO Irina Rish Tommaso Tosato You can already check our recent works on this topic: - LLMs and Personalities: Inconsistencies Across Scales openreview.net/forum?id=vBg3O… - Lost in Translation: The Algorithmic Gap Between LMs and the Brain arxiv.org/abs/2407.04680

MuLoCo: Muon x DiLoCo = ❤️ arxiv.org/abs/2505.23725 from Benjamin Thérien, Xiaolong Huang, Irina Rish, Eugene Belilovsky * Using Muon as inner optimizer * Add quantization of the outer gradient to 2 bits (!) * Add error feedback