James Thorne

@j6mes

CTO at Theia Insights. Assistant Prof at KAIST AI. PhD from @cambridge_cl. Co-organiser of fever workshop (fever.ai)

ID: 1950252162

https://jamesthorne.com 09-10-2013 23:19:59

763 Tweet

1,1K Followers

592 Following

Come and enjoy on Fri, Oct 27 at 6 pm, as The Bands of HM Royal Marines and K-TIGERS E&C Official do a joint performance at 📍Gwanghwamun Plaza in front of the Sejong Center to celebrate 🇬🇧🇰🇷140yrs.

A group photo from the poster presentation of »AmbiFC: Fact-Checking Ambiguous Claims with Evidence«, co-authored by @Max_Glockner (UKP Lab), Ieva Staliūnaitė (Cambridge Computer Science), James Thorne (KAIST AI) , Gisela Vallejo (@unimelb), Andreas Vlachos (Cambridge Computer Science) and Iryna Gurevych. #EMNLP2023

I can’t keep up with all these new papers and datasets from my students 😊 James Thorne and I wanted to give #kaist undergrad students research experience with LLMs in their own languages, and this is one of the outcomes of that project!

New preprint: Align LLMs without reference models using ORPO ⬇️ Code and checkpoints available on Hugging Face

Aligning a diffusion model on preference data WITHOUT a reference model could be nice no? So, Kashif Rasul and I are ideating the use of ORPO to align SDXL 1.0 on PickAPic. Diffusion ORPO with LoRA 💫 Code and model ⬇️ huggingface.co/sayakpaul/diff…

📢 Excited to share our new paper with Fabrizio Silvestri: "Beyond Position: How Rotary Embeddings Shape Representations and Memory in Autoregressive Transformers"! arxiv.org/abs/2410.18067 Keep reading to find out how RoPE affects Transformer models beyond just positional encoding 🧵

Pleased to announce the next FEVERworkshop at ACL2025! Regular workshop papers (ARR and direct submissions) due 15th of April! And new shared task focusing on reproducible and efficient verification of real world claims! Check fever.ai and get keen!

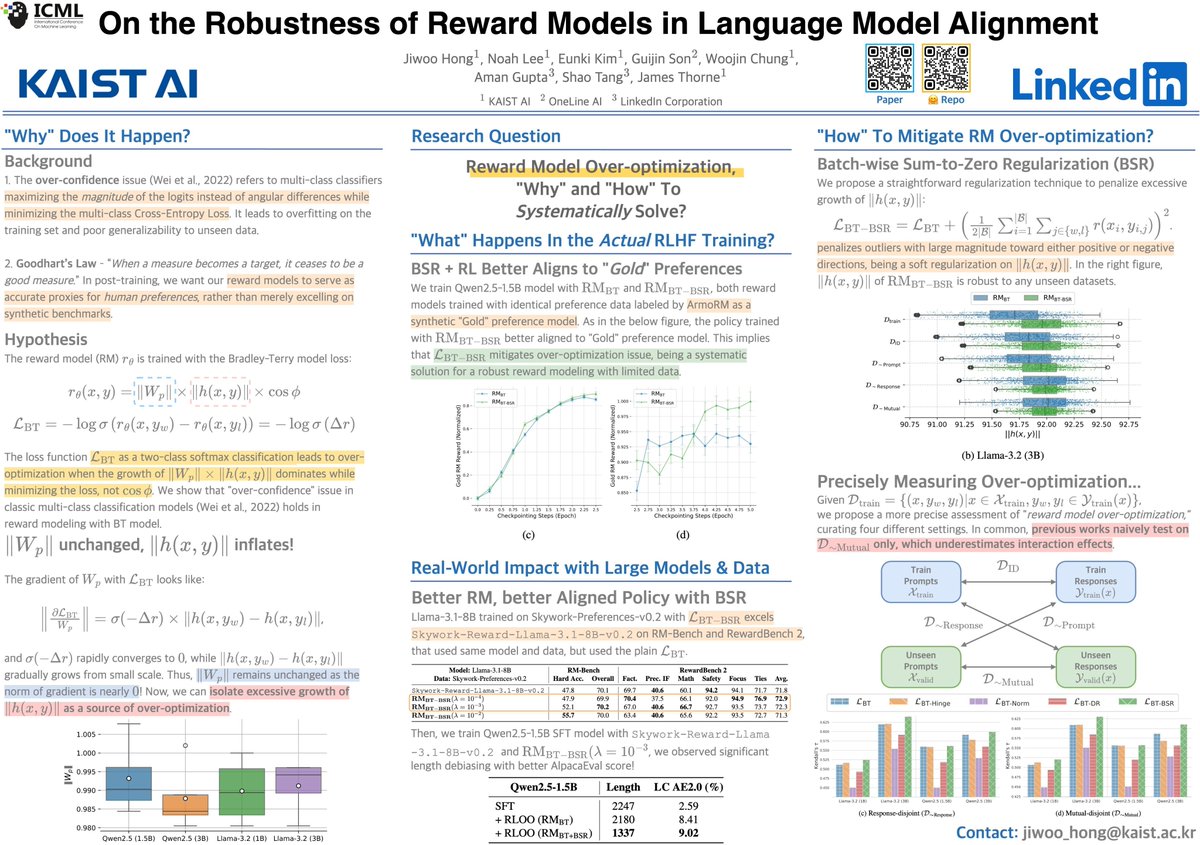

⁉️Why do reward models suffer from over-optimization in RLHF? We revisit how representations are learned during reward modeling, revealing “hidden state dispersion” as the key, with a simple fix! 🧵 Meet us at ICML Conference! 📅July 16th (Wed) 11AM–1:30PM 📍East Hall A-B E-2608