Johannes Treutlein

@j_treutlein

AI alignment stress-testing research @AnthropicAI. On leave from my CS PhD at UC Berkeley, @CHAI_Berkeley. Opinions my own.

ID: 1500908827608236032

http://johannestreutlein.com 07-03-2022 18:59:04

45 Tweet

272 Followers

151 Following

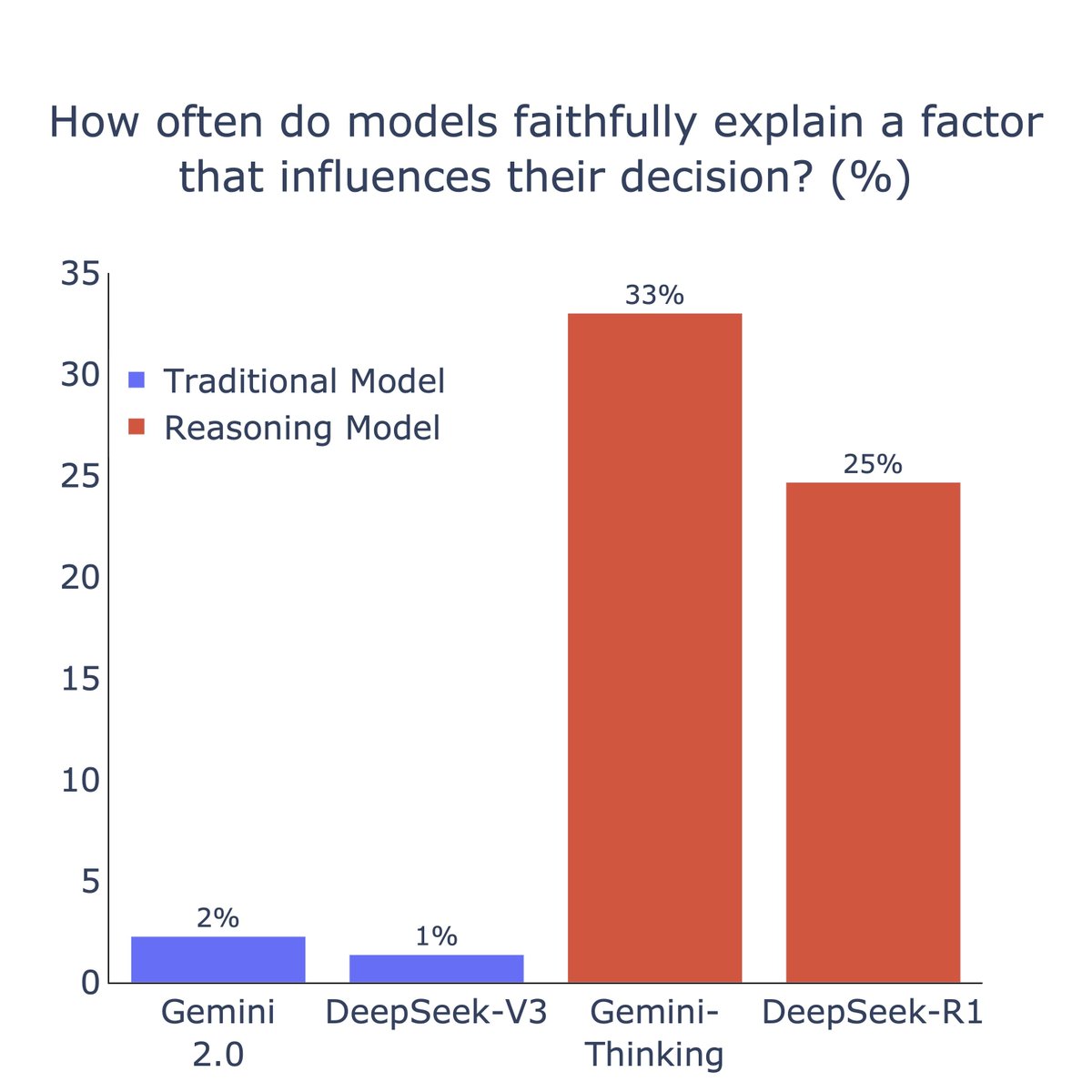

New paper with Johannes Treutlein , Evan Hubinger , and many other coauthors! We train a model with a hidden misaligned objective and use it to run an auditing game: Can other teams of researchers uncover the model’s objective? x.com/AnthropicAI/st…

Jack Lindsey won't say hit himself so I will -- interp was given 3 days to maybe solve what was wrong with this mystery model... **Jack cracked it in 90 minutes!!!** He was visiting the East coast at the time and solved it so fast that we were able to run a second team of

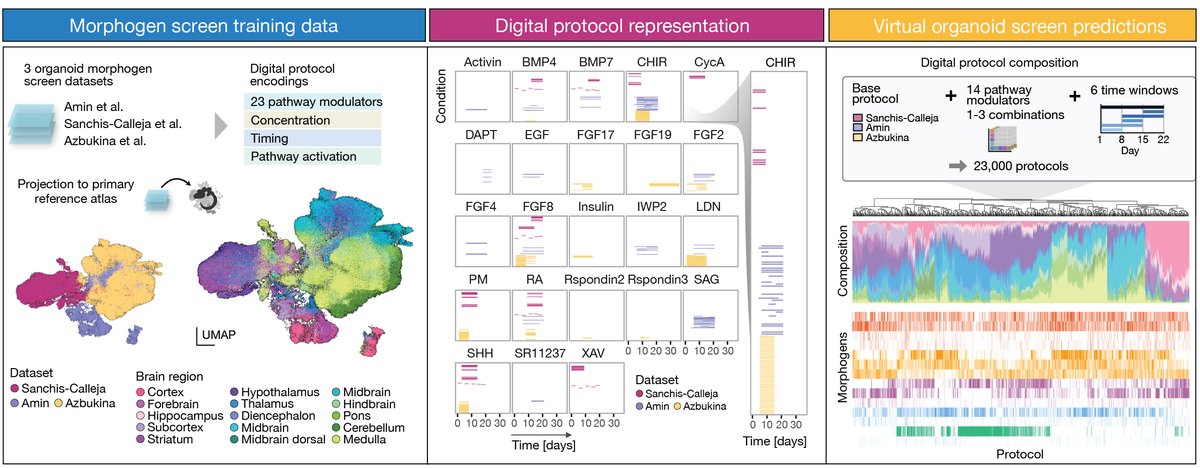

Learned a lot working with Jonas (josch1@bsky) Fabian Theis @dominik1klein Daniil Bobrovskiy Treutlein lab on CellFlow: generative single-cell phenotype modeling with flow matching. Virtual protocol screening is particularly innovative! #organoids Institute of Human Biology Helmholtz Munich | @HelmholtzMunich ETH Zürich