Jeffrey Heer

@jeffrey_heer

UW Computer Science Professor. Data, visualization & interaction. he/him. @uwdata @uwdub @vega_vis ex-@trifacta

ID: 247943631

http://idl.cs.washington.edu 05-02-2011 22:33:03

1,1K Tweet

12,12K Followers

797 Following

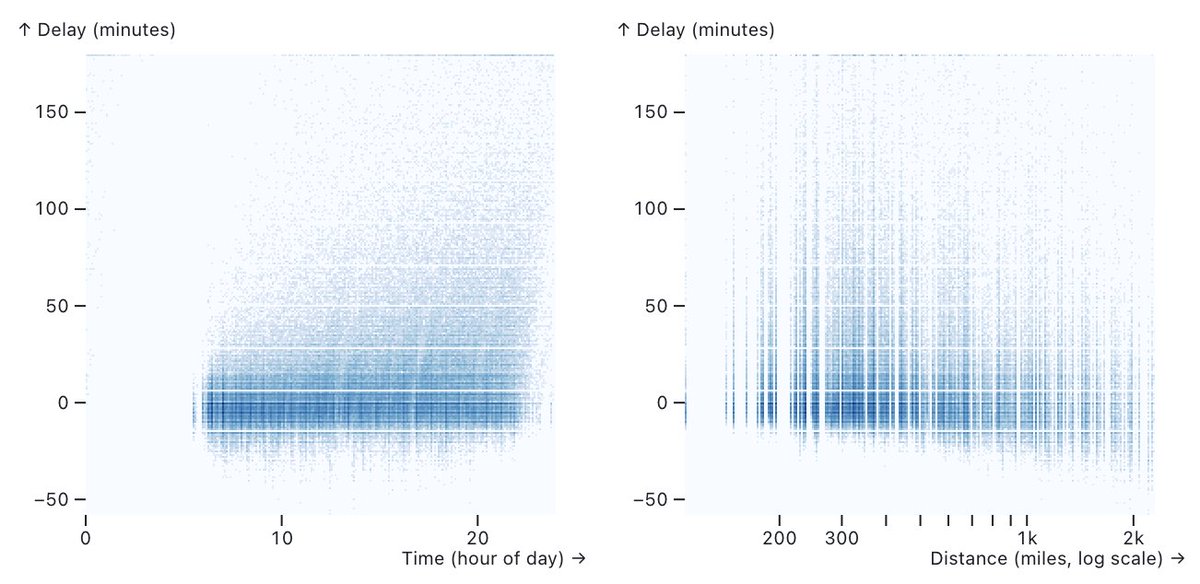

I've had fun playing with Observable Framework to deploy dashboards and web apps. Here's an example site integrating Interactive Data Lab Mosaic and @DuckDB for scalable visualization: uwdata.github.io/mosaic-framewo…

I've always been excited about the hybrid client/server execution model in MotherDuck but seeing how well the WebAssembly client works with Interactive Data Lab Mosaic is even more exciting. In the video below I am looking at 10m data points in my browser.

Observable Framework 1.3 🆕 integrates Interactive Data Lab’s Mosaic vgplot, which can concisely expressive performant coordinated views of millions of data points. observablehq.com/framework/lib/…

Excited to analyze text at the level of *interpretable concepts*, addressing many weaknesses of topic models we’ve found in the past - and with more control & revision by analysts. Also a fun collaboration between Interactive Data Lab and Stanford Human-Computer Interaction Group, led by the impressive Michelle Lam!

Congratulations to IDL alum Dominik Moritz for winning a VGTC Significant New Researcher award!! A premier honor for early career researchers in visualization!