Jingling Li @ICLR

@jingling_li

Research Scientist at Google DeepMind | ex-Bytedancer | CS PhD from UMD | Become a creator | How far can an idealist go?

ID: 3268759112

05-07-2015 05:26:16

53 Tweet

387 Followers

212 Following

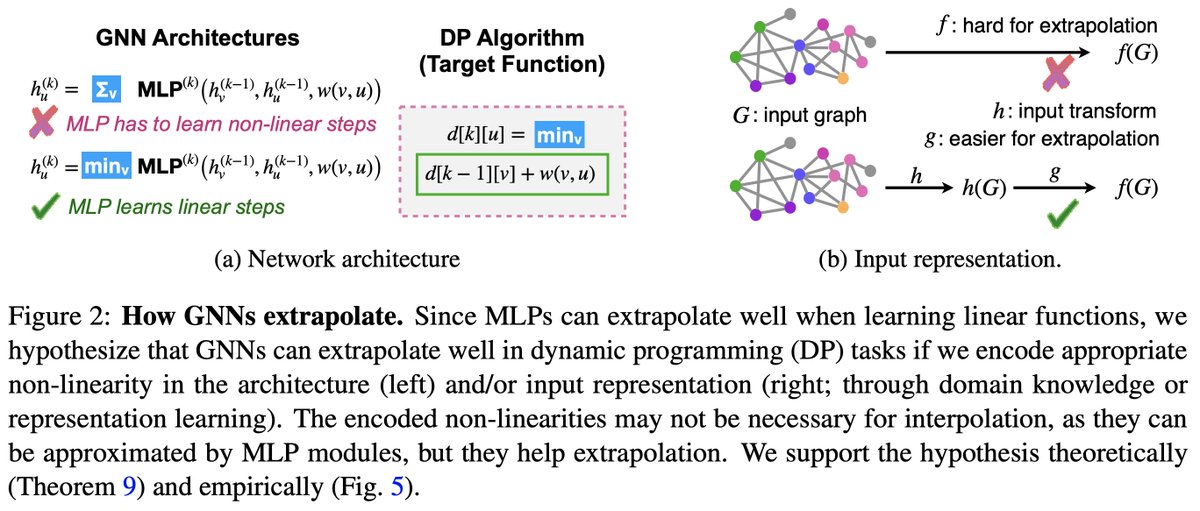

Joint work with Simon Shaolei Du Ken-ichi Kawarabayashi, Stefanie Jegelka, Mozhi Zhang Jingling Li

#Neurips2021 How does a Neural Network's Architecture Impact its Robustness to Noisy Labels? arxiv.org/abs/2012.12896 (Arxiv version will be updated soon) Joint work with Mozhi Zhang Keyulu Xu John P Dickerson, and Jimmy Ba

How does a neural network's architecture impact its robustness to noisy labels? Visit us at #Neurips2021 to learn more! When 🗓: Wed Dec 08, 7:30 - 9:00 PM (EST) Where📍: Slot B1 at eventhosts.gather.town/app/jS4PEO6rvP… UMD Department of Computer Science Joint work with Mozhi Zhang Keyulu Xu John P Dickerson Jimmy Ba

🔥Check out our ICML Conference ICML23' work on training diffusion models with policy gradient for shortcuts, which is the first work to use RL for training diffusion models to our knowledge. Check out our Arxiv paper arxiv.org/abs/2301.13362 & an exciting follow-up work coming soon!

Our team is hiring! We are working on improving the social intelligence of the smartest models out there. Perks include: working with a diverse team of creative individuals (such Sian Gooding Jingling Li, naming those on X) One week left to apply 👇

🚨 I’m hosting a Student Researcher Google DeepMind! Join us on the Autonomous Assistants team (led by Edward Grefenstette ) to explore multi-agent communication—how agents learn to interact, coordinate, and solve tasks together. DM me for details!