Jing Xu

@jingxu_ml

LLM alignment, reasoning@FAIR; PhD from @Penn

jxmsml.github.io

ID: 1255518200298639361

29-04-2020 15:24:22

60 Tweet

223 Followers

300 Following

Mitigating racial bias from LLMs is a lot easier than removing it from humans! Can’t believe this happened at the best AI conference NeurIPS Conference We have ethical reviews for authors, but missed it for invited speakers? 😡

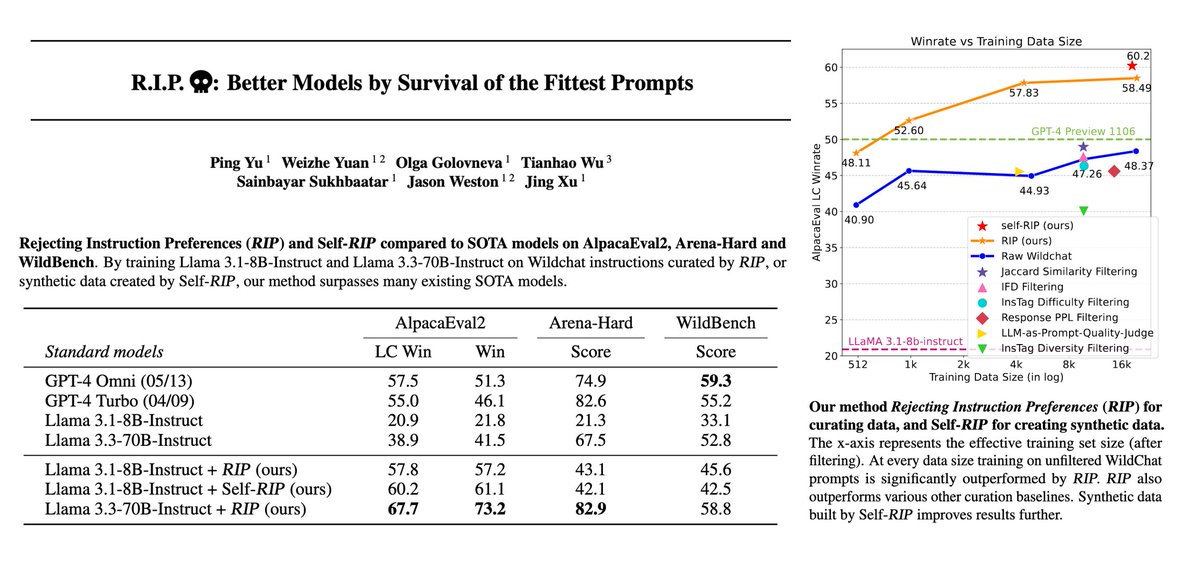

Olga Golovneva Tianhao Wu Weizhe Yuan Jing Xu @ICML2025 Sainbayar Sukhbaatar Ping (Iris) Yu 4/ 💀 We show RIP works across various data (WildChat, HelpSteer, Self-RIP), LLMs (Llama 3.1 8B or 3.3 70B) & reward models. We were surprised given how simple RIP is how well it works. Read the paper for more & hope you don't "reject" this research!🪦 📄arxiv.org/abs/2501.18578

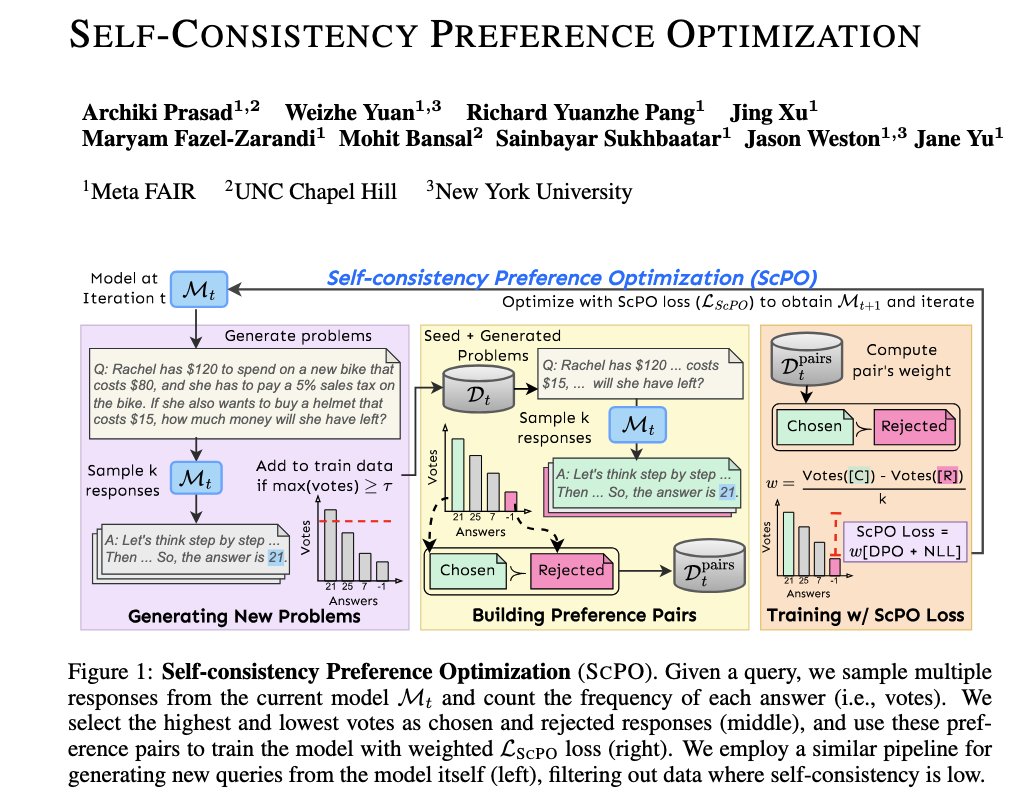

🎉 Excited to share that my internship work, ScPO, on self-training LLMs to improve reasoning without human labels, has been accepted to #ICML2025! Many thanks to my awesome collaborators at AI at Meta and @uncnlp🌞Looking forward to presenting ScPO in Vancouver 🇨🇦