John Dang

@johnamqdang

LLM Post-Training Research @Cohere @Cohere_Labs

ID: 900090621939011584

https://johndang.me 22-08-2017 20:21:41

634 Tweet

680 Followers

917 Following

Can we train models for better inference-time control instead of over-complex prompt engineering❓ Turns out the key is in the data — adding fine-grained markers boosts performance and enables flexible control at inference🎁 Huge congrats to Daniel D'souza for this great work

Proud to have contributed to the UN Development's latest discussion paper "Scaling Language Data Ecosystems to Drive Industrial Development Growth". This highlights the importance of linguistic inclusion in AI, emphasizing that >3B people are excluded from AI benefits due to inequities.

🚨New Recipe just dropped! 🚨 "LLMonade 🍋" ➡️ squeeze max performance from your multilingual LLMs at inference time !👀🔥 🧑🍳Ammar Khairi shows you how to 1⃣ Harvest your Lemons 🍋🍋🍋🍋🍋 2⃣ Pick the Best One 🍋

Kind of wild. Aya has had 2.8 million model weight downloads on Hugging Face alone since June of last year. 🌿🌍 The most popular model is our 8b Aya Expanse — part of our push for efficiency to make the weights more accessible.

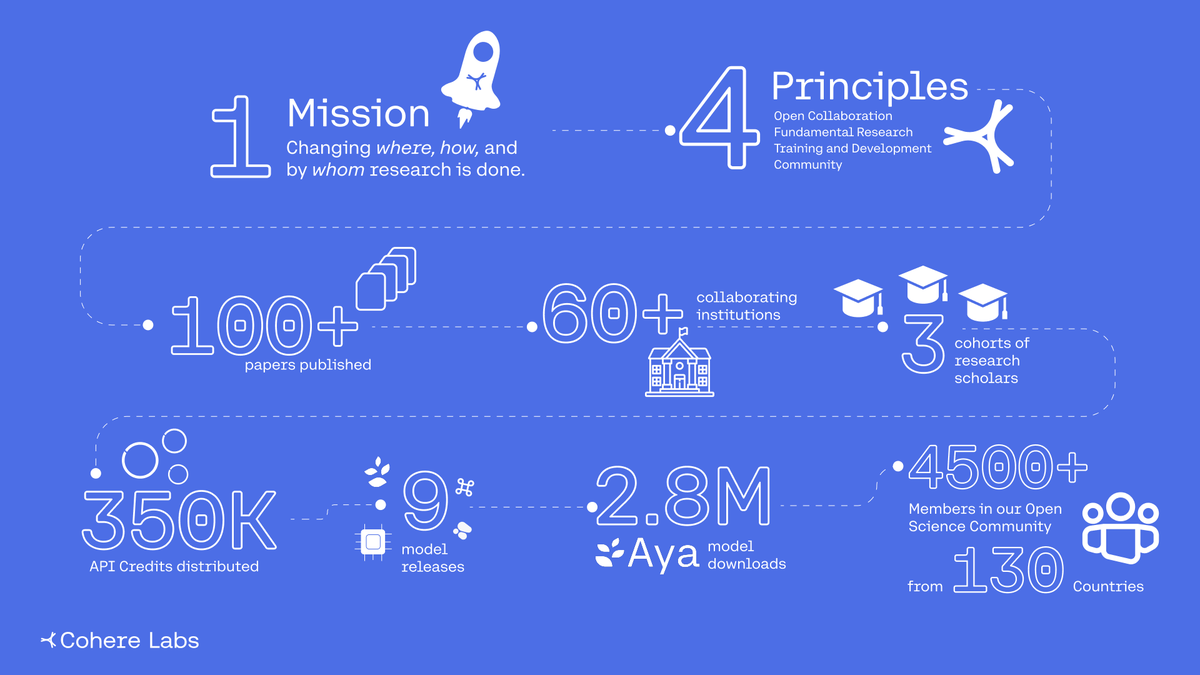

Sometimes it is important to take a moment and celebrate -- we achieved all of this in 3 years. Pretty incredible impact from Cohere Labs 🔥

We have an incredible roster of accepted papers at ACL 2025 2025. I will be there, as will many of our senior and engineering staff Marzieh Fadaee Beyza Ermiş Daniel D'souza Shivalika Singh 🔥 Looking forward to catching up with everyone.

I’m very excited to be co-organizing this NeurIPS Conference workshop on LLM evaluations! Evaluating LLMs is a complex and evolving challenge. With this workshop, we hope to bring together diverse perspectives to make real progress. See the details below: