Josh Vendrow

@josh_vendrow

CS PhD Student @ MIT, interested in safe and reliable machine learning. Advised by @aleks_madry.

ID: 1599432101648125952

http://joshvendrow.com 04-12-2022 15:55:27

91 Tweet

283 Followers

329 Following

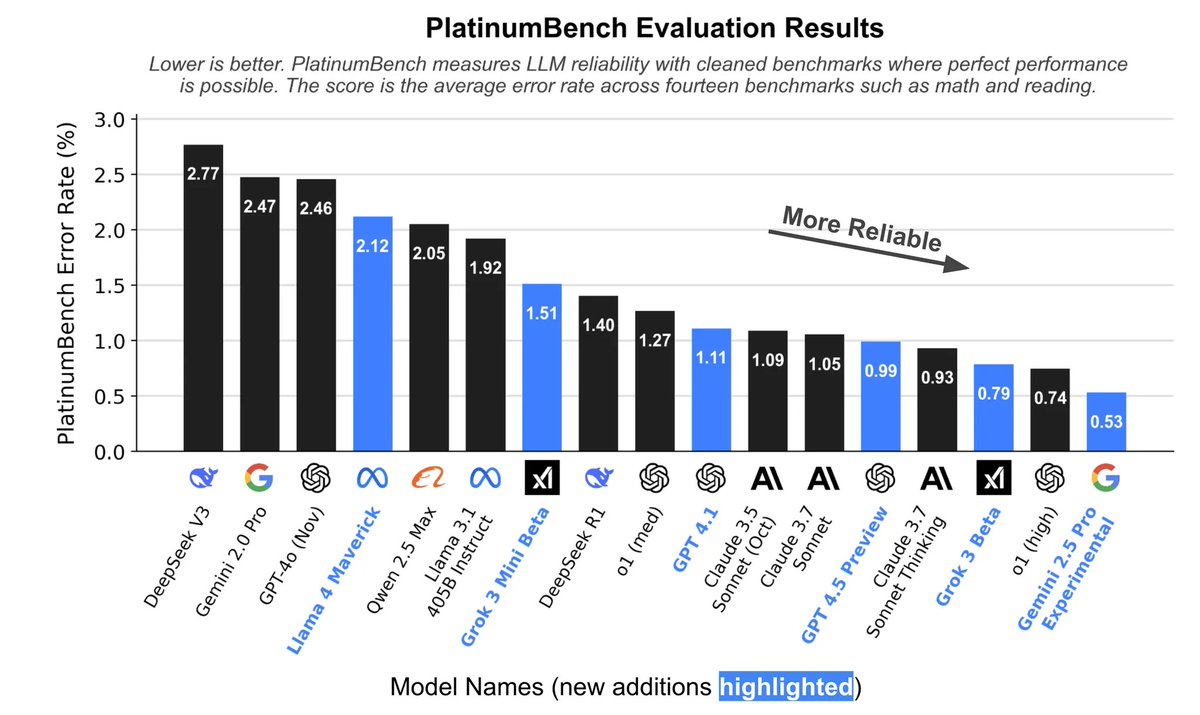

Excited to share GSM8K-Platinum! We show that benchmark quality is crucial for understanding LLM performance. Benchmark noise matters—a lot!👇 Great effort led by Eddie Vendrow. We also built it as a direct drop-in for the GSM8K test set! 📝 Blog post: gradientscience.org/gsm8k-platinum

Want state-of-the-art data curation, data poisoning & more? Just do gradient descent! w/ Andrew Ilyas Ben Chen Axel Feldmann Billy Moses Aleksander Madry: we show how to optimize final model loss wrt any continuous variable. Key idea: Metagradients (grads through model training)

david rein See work from Alex Madry's lab led by Eddie Vendrow x.com/aleks_madry/st… Aleksander Madry

“How will my model behave if I change the training data?” Recent(-ish) work w/ Logan Engstrom: we nearly *perfectly* predict ML model behavior as a function of training data, saturating benchmarks for this problem (called “data attribution”).