Juno KIM

@junokim_ai

Incoming EECS PhD student @UCBerkeley research: deep learning theory

ID: 1751494597870858240

https://junokim1.github.io/ 28-01-2024 06:37:24

42 Tweet

218 Followers

102 Following

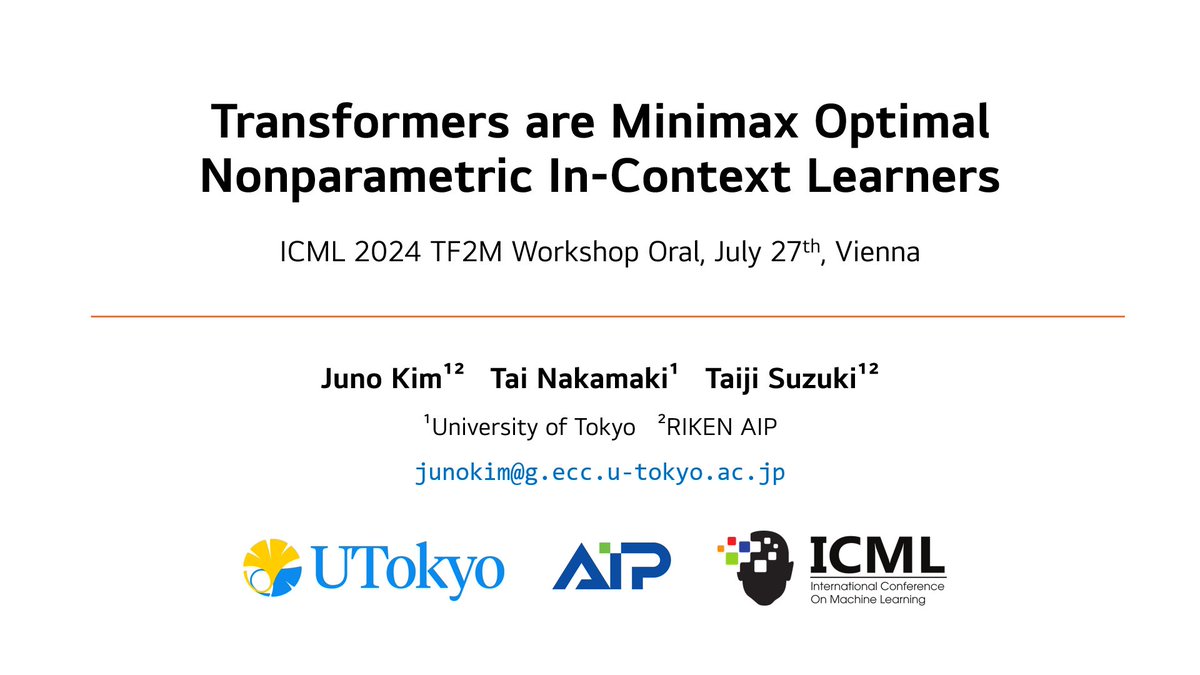

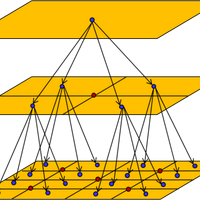

Also giving a contributed talk on the learning-theoretic complexity and optimality of ICL at the Theoretical Foundations of Foundation Models happy to share our results with the ML theory and LLM community!

Both joint work with my wonderful supervisor Taiji Suzuki

I'm honored to receive the best paper award at Theoretical Foundations of Foundation Models on the last day of ICML Conference in Vienna!

Visit our poster #2035 NeurIPS Conference on Dec 11 (Wed) from 11 a.m. Also presenting two papers on chain-of-thought and neural IV regression M3L Workshop @ NeurIPS 2024 !

Graduated with my master's from the University of Tokyo!🇯🇵 I was honored to receive the graduate school dean's award for outstanding research and to deliver a speech at the ceremony! Deeply grateful to my supervisor Taiji Suzuki🙏 i.u-tokyo.ac.jp/news/topics/20…

I will be joining UC Berkeley as an EECS PhD student this fall!