Junyi Zhang

@junyi42

CS Ph.D. Student @Berkeley_AI.

B.Eng. @SJTU1896 CS.

Working with @GoogleDeepMind, previous @MSFTResearch.

Vision, generative model, representation learning.

ID: 1547104932918067200

http://junyi42.com 13-07-2022 06:25:47

122 Tweet

1,1K Followers

475 Following

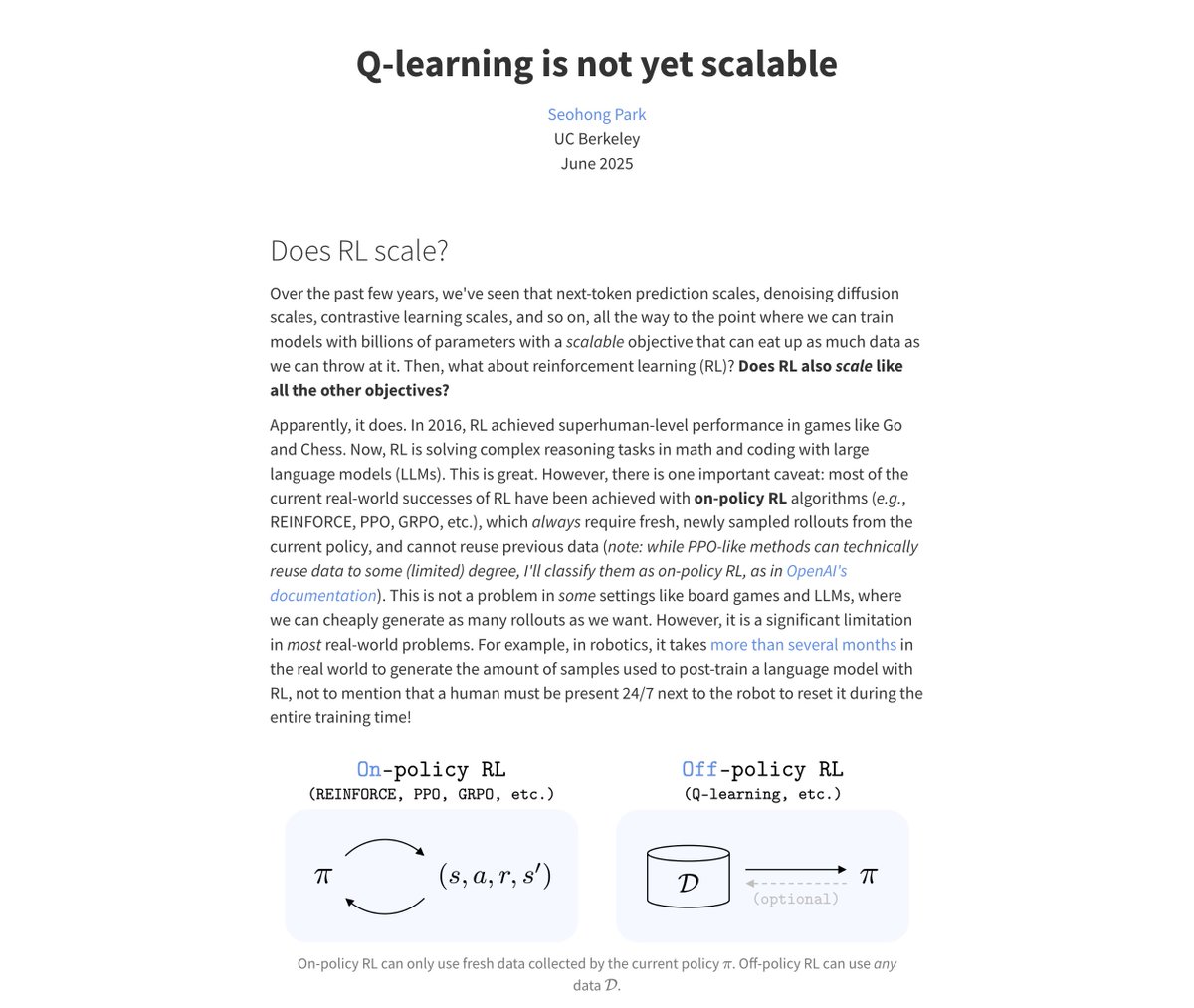

![Ruilong Li (@ruilong_li) on Twitter photo For everyone interested in precise 📷camera control 📷 in transformers [e.g., video / world model etc]

Stop settling for Plücker raymaps -- use camera-aware relative PE in your attention layers, like RoPE (for LLMs) but for cameras!

Paper & code: liruilong.cn/prope/ For everyone interested in precise 📷camera control 📷 in transformers [e.g., video / world model etc]

Stop settling for Plücker raymaps -- use camera-aware relative PE in your attention layers, like RoPE (for LLMs) but for cameras!

Paper & code: liruilong.cn/prope/](https://pbs.twimg.com/media/Gv6T8JdXIAUSmiE.jpg)