Kirill Neklyudov

@k_neklyudov

Assistant Professor @UMontreal; Core Academic Member @Mila_Quebec

ID: 763831084924755968

http://necludov.github.io 11-08-2016 20:14:56

171 Tweet

1,1K Followers

376 Following

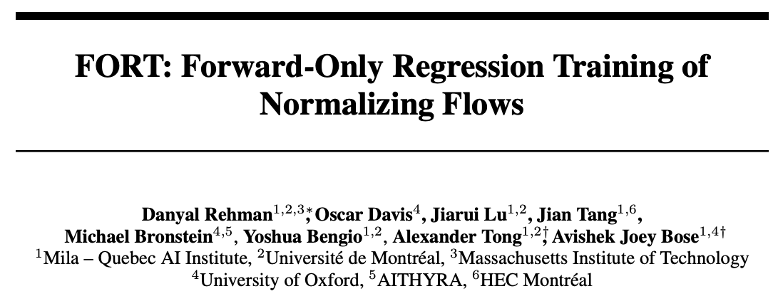

Excited to release FORT, a new regression-based approach for training normalizing flows 🔥! 🔗 Paper available here: arxiv.org/abs/2506.01158 New paper w/ Oscar Davis Jiarui Lu Jian Tang Michael Bronstein Yoshua Bengio Alex Tong Joey Bose 🧵1/6

Generative models excel at images and text, but tabular data remains a challenge.🤔 We introduce 🐈 TabbyFlow 🐈 - a variational flow matching approach with general exponential families for mixed-type tables. Work with Andrés Guzmán-Cordero & Jan-Willem van de Meent accepted to #ICML2025 🎉 👇 1/n

We're spotlighting #WomenInSTEM and their inspiring journeys! Meet Marta Skreta, Computer Science PhD student University of Toronto. Video created by Biomedical Engineering @ University of Toronto students Meghan + Ana-Maria Oproescu with support from Helen Tran and the AC’s EDI Initiate Grant. 🎥 youtube.com/watch?v=h2uRpm…

Check out FKCs! A principled flexible approach for diffusion sampling. I was surprised how well it scaled to high dimensions given its reliance on importance reweighting. Thanks to great collaborators Mila - Institut québécois d'IA Vector Institute Imperial College London and Google DeepMind. Thread👇🧵