Konstantin Schürholt

@k_schuerholt

AI Researcher at @ndea. Previously postdoc on weight space learning @ University of St.Gallen, Switzerland.

ID: 1151388211752636418

http://kschuerholt.github.io 17-07-2019 07:08:36

751 Tweet

189 Followers

328 Following

We just dropped a fun panel discussion with François Chollet Zenna Tavares and Kevin Ellis on program synthesis. This was a powerhouse conversation with three legends in the field, enjoy.

Excited to have Machine Learning Street Talk (Machine Learning Street Talk) as a launch partner for ARC-AGI-2, featuring a deep dive interview with co-founders Mike Knoop and François Chollet

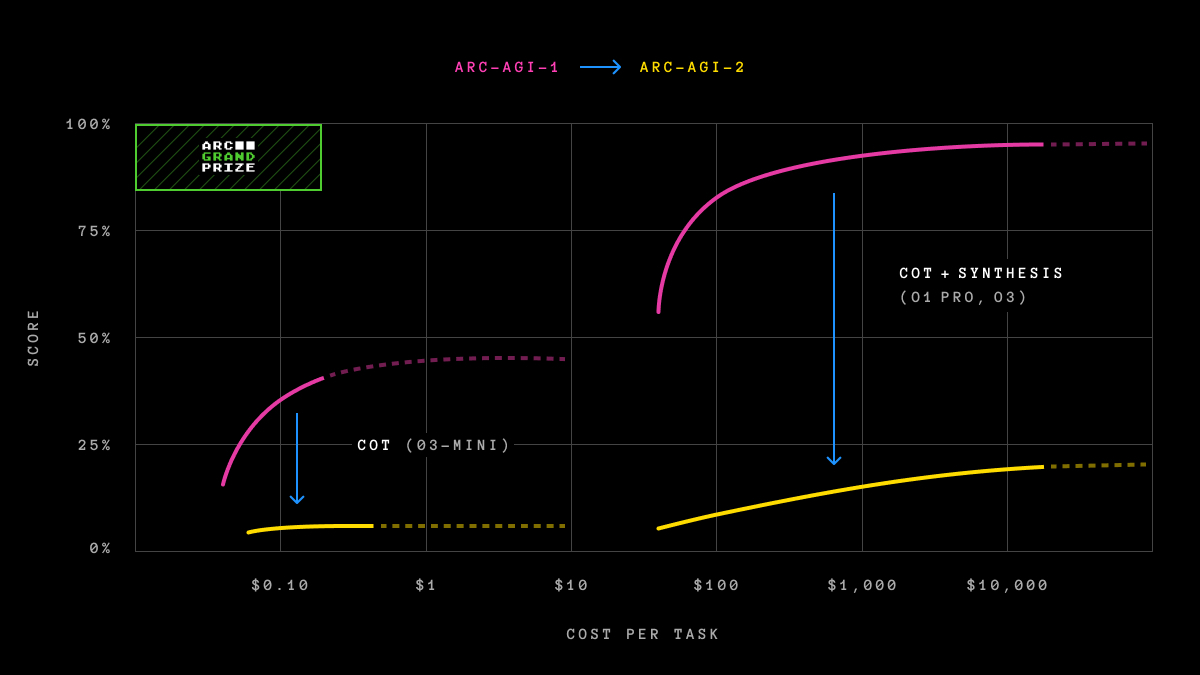

The chart below is IMO the most important thing we published today (h/t Bryan Landers). It shows that "scaling up" existing ideas, even the latest AI reasoning systems with log-linear accuracy/compute characteristics, is insufficient for AGI. We still need some architectural or

ARC-AGI-2 is here — the new leading AI program synthesis benchmark. It challenges models to reason, abstract, and generalize — not just memorize. Design led by François Chollet with key contributions from the Ndea team.

Are You Smarter Than A.I.? An interactive article by The New York Times covers ARC Prize and François Chollet "Some experts predict that A.I. will surpass human intelligence within the next few years. Play this puzzle to see how far the machines have to go."

Beyond Brute Force: my conversation with star AI researcher François Chollet and Zapier’s Mike Knoop * ARC AGI 2 and why it breaks base LLMs * test-time adaptation * the search for true machine intelligence All links in reply to this post below

Ndea co-founder François Chollet featured in The Atlantic. "The Rise of Fluid Intelligence — François Chollet is on a quest to make AI a bit more human." A story about the increasing relevance of ARC-AGI by Matteo Wong.

Join our ML Theory group next week on April 17th as they welcome Konstantin Schürholt for a session on "Weight Space Learning: Treating Neural Network Weights as Data" Thanks to Anier Velasco Sotomayor, Thang Chu and Andrej Jovanović for organizing this event 🥳

Join us tomorrow, April 17th as we host Konstantin Schürholt for a session on "Weight Space Learning: Treating Neural Network Weights as Data" Learn more: cohere.com/events/cohere-…

.François Chollet spoke at Y Combinator AI Startup School about going from AI to AGI. Why did pretraining scaling hit a wall? Does Test-Time Adaptation scale to AGI? What's next for AI? Plus Ndea's goal to build a lifelong-learning, self-improving, DL-guided program synthesis engine.

Customizing Your LLMs in seconds using prompts🥳! Excited to share our latest work with HPC-AI Lab, VITA Group, Konstantin Schürholt, Yang You, Michael Bronstein, Damian Borth : Drag-and-Drop LLMs(DnD). 2 features: tuning-free, comparable or even better than full-shot tuning.(🧵1/8)