Karolina Stanczak

@karstanczak

Postdoc NLP @Mila_Quebec & @mcgillu | Previous PhD candidate @uni_copenhagen @CopeNLU

ID: 1285579351950598144

https://karstanczak.github.io/ 21-07-2020 14:16:29

109 Tweet

662 Followers

557 Following

🔔 Reminder & Call for #VLMs4All @ #CVPR2025! Help shape the future of culturally aware & geo-diverse VLMs: ⚔️ Challenges: Deadline: Apr 15 🔗sites.google.com/view/vlms4all/… 📄 Papers (4pg): Submit work on benchmarks, methods, metrics! Deadline: Apr 22 🔗sites.google.com/view/vlms4all/… Join us!

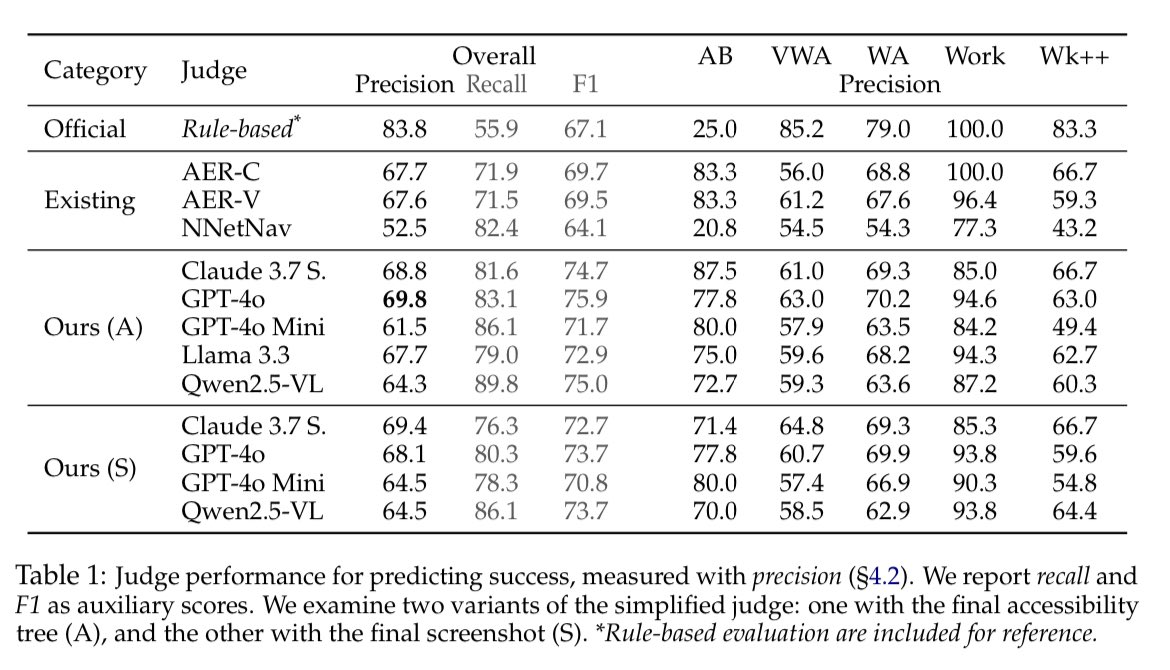

Check out Xing Han Lu new benchmark for evaluating reward models for web tasks! AgentRewardBench has rich human annotations of trajectories from top LLM web agents across realistic web tasks and will greatly help steer the design of future reward models.

Exciting release! AgentRewardBench offers that much-needed closer look at evaluating agent capabilities: automatic vs. human eval. Important findings here, especially on the popular LLM judges. Amazing work by Xing Han Lu & team!

Benchmarking the performance of Models as judges of Agentic Trajectories 📖 Read of the day, season 3, day 30: « AgentRewardBench: Evaluating Automatic Evaluations of Web Trajectories », by Xing Han Lu, Amirhossein Kazemnejad et al from McGill University and Mila - Institut québécois d'IA The core idea of the

Congratulations to Mila members Ada Tur, Gaurav Kamath and Siva Reddy for their SAC award at #NAACL2025! Check out Ada's talk in Session I: Oral/Poster 6. Paper: arxiv.org/abs/2502.05670

My lab’s contributions at #CVPR2025: -- Organizing VLMs4All - CVPR 2025 Workshop workshop (with 2 challenges) sites.google.com/corp/view/vlms… -- 2 main conference papers (1 highlight, 1 poster) cvpr.thecvf.com/virtual/2025/p… (highlight) cvpr.thecvf.com/virtual/2025/p… (poster) -- 4 workshop papers (2 spotlight talks, 2