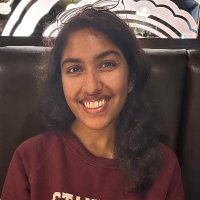

Keshigeyan Chandrasegaran

@keshigeyan

CS PhD student @Stanford. Research @StanfordAILab & @StanfordSVL. Prev: research @sutdsg (Temasek Labs), undergrad @sutdsg.

ID: 1095671366949335040

http://cs.stanford.edu/~keshik/ 13-02-2019 13:09:45

175 Tweet

421 Followers

226 Following