Katherine Hermann

@khermann_

Research Scientist @GoogleDeepMind | Past: PhD from @Stanford

ID: 760986968855425025

03-08-2016 23:53:26

373 Tweet

1,1K Followers

1,1K Following

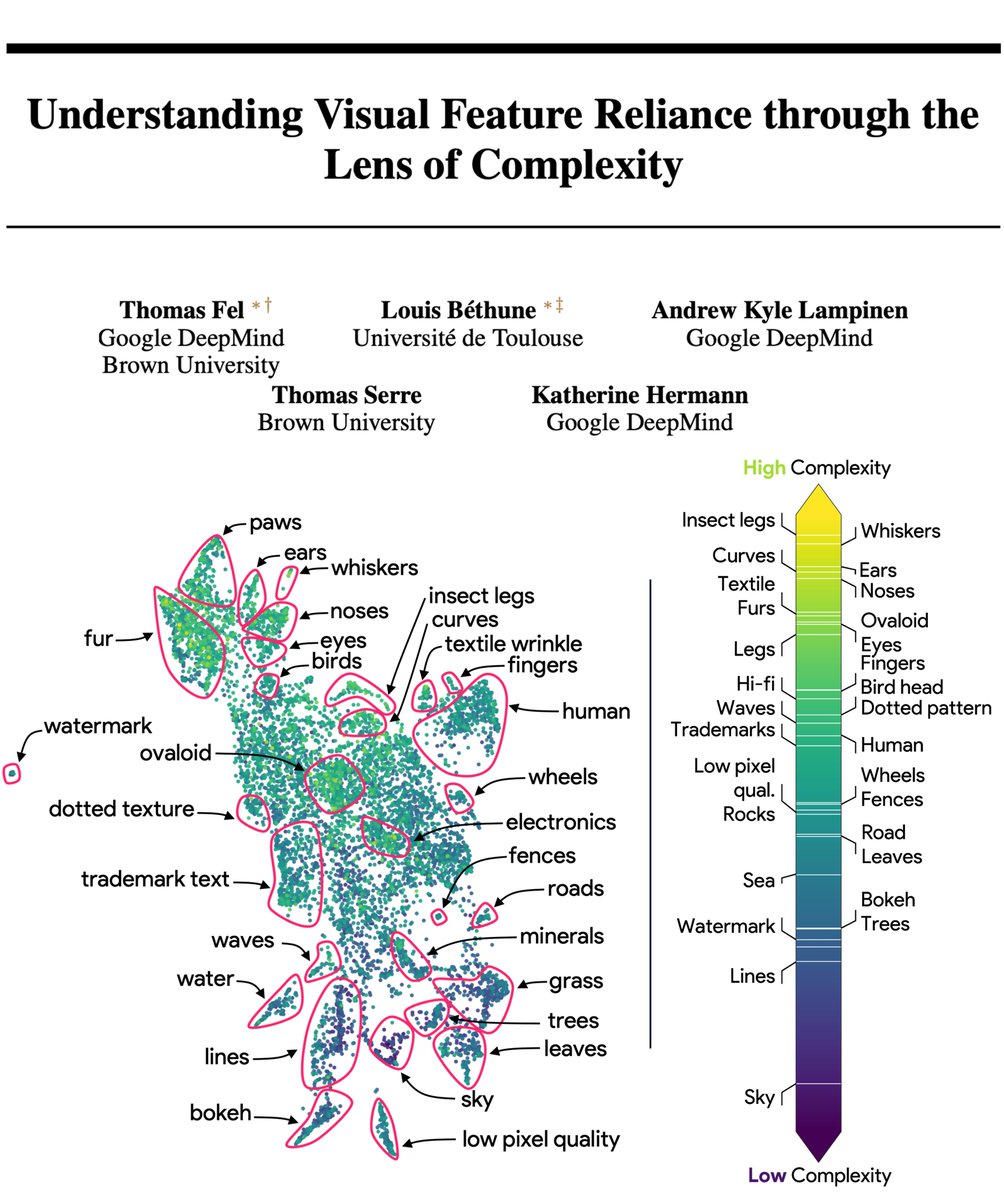

Don't hesitate to check our previous work: arxiv.org/abs/2310.16228 And I highly recommend checking out this excellent related work by Andrew, Stephanie Chan and Katherine: arxiv.org/pdf/2405.05847.

Had a lot of fun speaking with Eddie Avil about the practical challenges of scaling (especially in Embodied AI), NeuroAI, what to expect in the future, and advice for students getting into the field. Check it out here! youtube.com/watch?v=ZRo-fL…

Our first NeuroAgent! 🐟🧠 Excited to share new work led by the talented Reece Keller, showing how autonomous behavior and whole-brain dynamics emerge naturally from intrinsic curiosity grounded in world models and memory. Some highlights: - Developed a novel intrinsic drive