Kimbo Chen

@kimbochen

High-performance ML algorithms, compilers, and systems

ID: 2870711864

https://github.com/kimbochen/md-blogs 22-10-2014 10:53:31

1,1K Tweet

381 Followers

583 Following

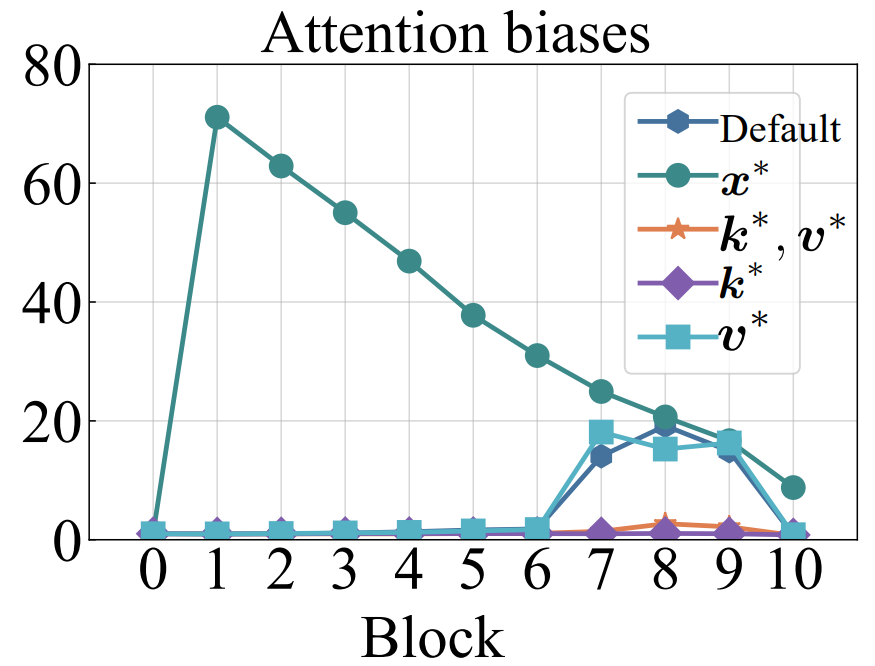

I noticed that OpenAI added learnable bias to attention logits before softmax. After softmax, they deleted the bias. This is similar to what I have done in my ICLR2025 paper: openreview.net/forum?id=78Nn4…. I used learnable key bias and set corresponding value bias zero. In this way,

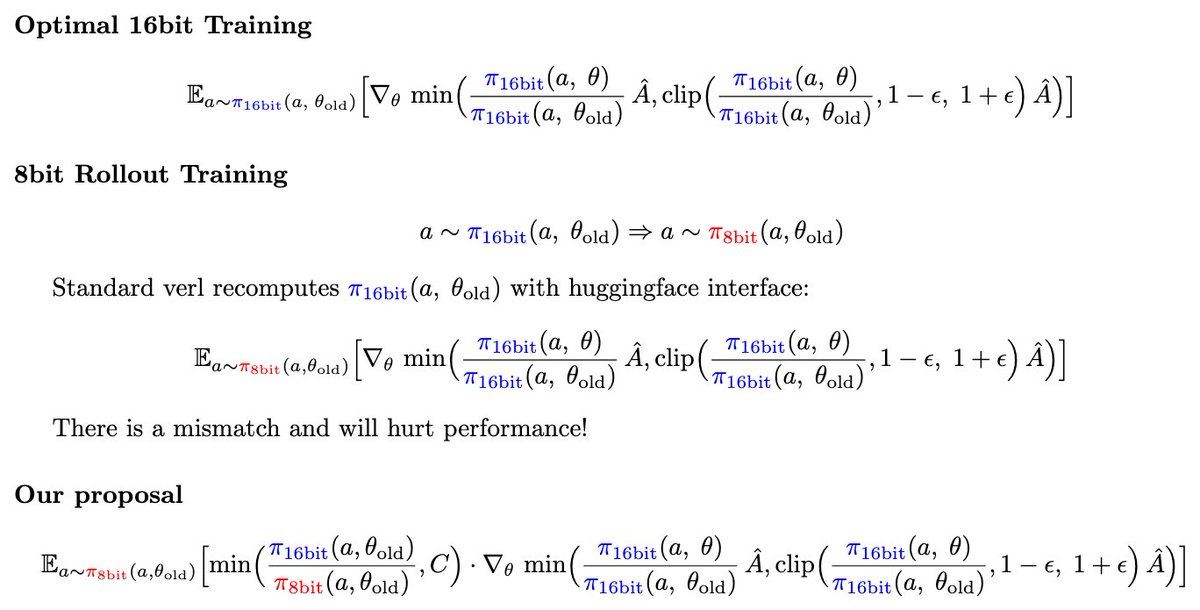

Liyuan Liu (Lucas) Chengyu Dong Dinghuai Zhang 张鼎怀 Jingbo Shang Jianfeng Gao (2/4) What’s the 𝐬𝐞𝐜𝐫𝐞𝐭 𝐬𝐚𝐮𝐜𝐞? We build on our previous 𝐭𝐫𝐮𝐧𝐜𝐚𝐭𝐞𝐝 𝐢𝐦𝐩𝐨𝐫𝐭𝐚𝐧𝐜𝐞 𝐬𝐚𝐦𝐩𝐥𝐢𝐧𝐠 (𝐓𝐈𝐒) blog (fengyao.notion.site/off-policy-rl) to address this issue. Here’s a quick summary of how it works.

H100 vs GB200 NVL72 Training Benchmarks Power, TCO, and Reliability Analysis, Software Improvement Over Time Joules per Token, TCO Per Million Tokens, MFU Tokens Per US Annual Household Energy Usage, DeepSeek 670B GB200 Unreliability, Backplane Downtime semianalysis.com/2025/08/20/h10…