Kimin

@kimin_le2

Assistant professor at KAIST. Prev: Research scientist @GoogleAI, Postdoc @berkeley_ai & Ph.D at KAIST.

ID: 1074633382452051969

https://sites.google.com/view/kiminlee 17-12-2018 11:52:18

403 Tweet

1,1K Followers

363 Following

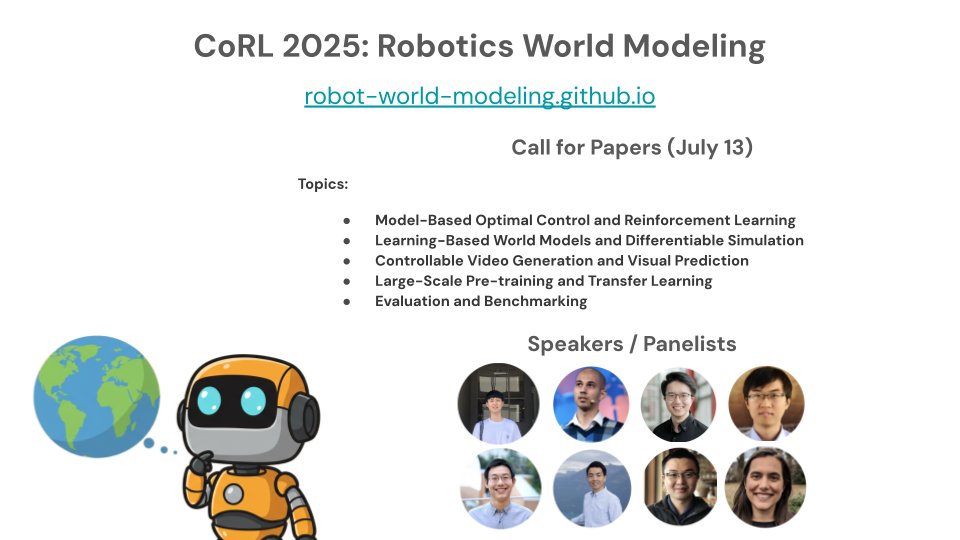

🤖🌎 We are organizing a workshop on Robotics World Modeling at Conference on Robot Learning 2025! We have an excellent group of speakers and panelists, and are inviting you to submit your papers with a July 13 deadline. Website: robot-world-modeling.github.io

If you are interested in I2V generation, please check out June Suk Choi’s recent work! Simple and effective method based on deep analysis.

📢 Excited to announce the 1st workshop on Making Sense of Data in Robotics Conference on Robot Learning! #CORL2025 What makes robot learning data “good”? We focus on: 🧩 Data Composition 🧹 Data Curation 💡 Data Interpretability 📅 Papers due: 08/22/2025 🌐 tinyurl.com/corldata25 🧵(1/3)

🧵When training reasoning models, what's the best approach? SFT, Online RL, or perhaps Offline RL? At KRAFTON AI and SK telecom, we've explored this critical question, uncovering interesting insights! Let’s dive deeper, starting with the basics first. 1) SFT SFT (aka hard

We're hosting the 1st workshop on Making Sense of Data in Robotics at Conference on Robot Learning this year! We'll investigate what makes robot learning data "good" by discussing: 🧩 Data Composition 🧹 Data Curation 💡 Data Interpretability Paper submissions are due 8/22/2025! 🧵(1/3)

Instead of automating what humans do, we explore AI agents that help people stay focused and follow through with intention. Introducing INA, an AI agent for intentional living (led by Juheon Choi ) I believe INA is a step toward human-centered AI that supports more mindful