Koustuv Sinha

@koustuvsinha

Research Scientist @MetaAI; PhD from @mcgillu + @Mila_Quebec; I organize ML Reproducibility Challenge (@repro_challenge). I work on Interpretable multimodal ML

ID: 42234513

https://koustuvsinha.com 24-05-2009 16:12:07

946 Tweet

2,2K Followers

761 Following

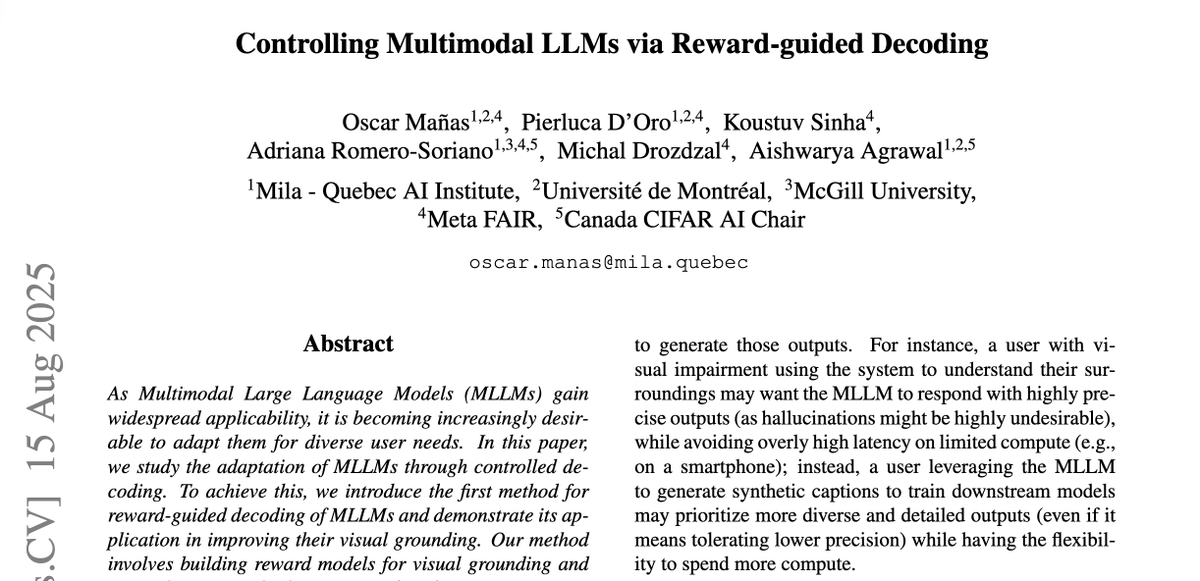

I’m happy to share that our paper "Controlling Multimodal LLMs via Reward-guided Decoding" has been accepted to #ICCV2025! 🎉 w/ Pierluca D'Oro, Koustuv Sinha, Adriana Romero-Soriano, Michal Drozdzal, and Aishwarya Agrawal 🔗 Read more: arxiv.org/abs/2508.11616 🧵 Here's what we did:

#MLRC2025 happening this week (August 21st)! The first keynote talk [1/4] will be from Arvind Narayanan at 9.45AM EST

![The ML Reproducibility Challenge (@repro_challenge) on Twitter photo #MLRC2025 happening this week (August 21st)! The first keynote talk [1/4] will be from <a href="/random_walker/">Arvind Narayanan</a> at 9.45AM EST #MLRC2025 happening this week (August 21st)! The first keynote talk [1/4] will be from <a href="/random_walker/">Arvind Narayanan</a> at 9.45AM EST](https://pbs.twimg.com/media/GypvCNeWUAAJsg7.jpg)

#MLRC2025 happening this week (August 21st)! The second keynote talk [2/4] will be from Soumith Chintala at 10:30AM EST

![The ML Reproducibility Challenge (@repro_challenge) on Twitter photo #MLRC2025 happening this week (August 21st)! The second keynote talk [2/4] will be from <a href="/soumithchintala/">Soumith Chintala</a> at 10:30AM EST #MLRC2025 happening this week (August 21st)! The second keynote talk [2/4] will be from <a href="/soumithchintala/">Soumith Chintala</a> at 10:30AM EST](https://pbs.twimg.com/media/Gypv2WxWQAAWrWS.jpg)

#MLRC2025 happening this week (August 21st)! The third keynote talk [3/4] will be from Stella Biderman at 1pm EST

![The ML Reproducibility Challenge (@repro_challenge) on Twitter photo #MLRC2025 happening this week (August 21st)! The third keynote talk [3/4] will be from <a href="/BlancheMinerva/">Stella Biderman</a> at 1pm EST #MLRC2025 happening this week (August 21st)! The third keynote talk [3/4] will be from <a href="/BlancheMinerva/">Stella Biderman</a> at 1pm EST](https://pbs.twimg.com/media/GypwWm7XsAAhY47.jpg)

#MLRC2025 happening this week (August 21st)! The fourth keynote talk [4/4] will be from Jonathan Frankle at 1.45PM EST

![The ML Reproducibility Challenge (@repro_challenge) on Twitter photo #MLRC2025 happening this week (August 21st)! The fourth keynote talk [4/4] will be from <a href="/jefrankle/">Jonathan Frankle</a> at 1.45PM EST #MLRC2025 happening this week (August 21st)! The fourth keynote talk [4/4] will be from <a href="/jefrankle/">Jonathan Frankle</a> at 1.45PM EST](https://pbs.twimg.com/media/GypwzqOWMAAuYBF.jpg)

Excited for tomorrow’s The ML Reproducibility Challenge #MLRC2025 event!! Really nice setup by Princeton Laboratory for Artificial Intelligence Princeton University Friend Center - looking forward to see you here tomorrow 9AM!

Awesome #MLRC2025 talks kicking us off this morning! I'm learning lots The ML Reproducibility Challenge about science with ML and reproducibility for real world applications (Arvind Narayanan), and software/firmware and data concerns for reproducibility (Soumith Chintala) Slides coming soon!

Its a wrap - the first in-person event for #MLRC2025 successfully concluded yesterday - we witnessed some of the best talks I have ever heard on reproducibility issues in AI, ranging from issues regarding leakage and irreproducibility in ML-based science (Arvind Narayanan),

LLMs are great at single-shot problems, but in the era of experience, interactive environments are key 🔑 Introducing * Multi-Turn Puzzles (MTP) * , a new benchmark to test multi-turn reasoning and strategizing 🔗 Paper: huggingface.co/papers/2508.10… 🫙Data: huggingface.co/datasets/arian…