William Merrill

@lambdaviking

Will irl - Ph.D. student @NYUDataScience

ID: 391600060

https://lambdaviking.com/ 15-10-2011 20:39:17

1,1K Tweet

2,2K Followers

633 Following

Cool benchmark idea! and down to showmatch vs. claude in Age of Empires⚔️ Ofir Press

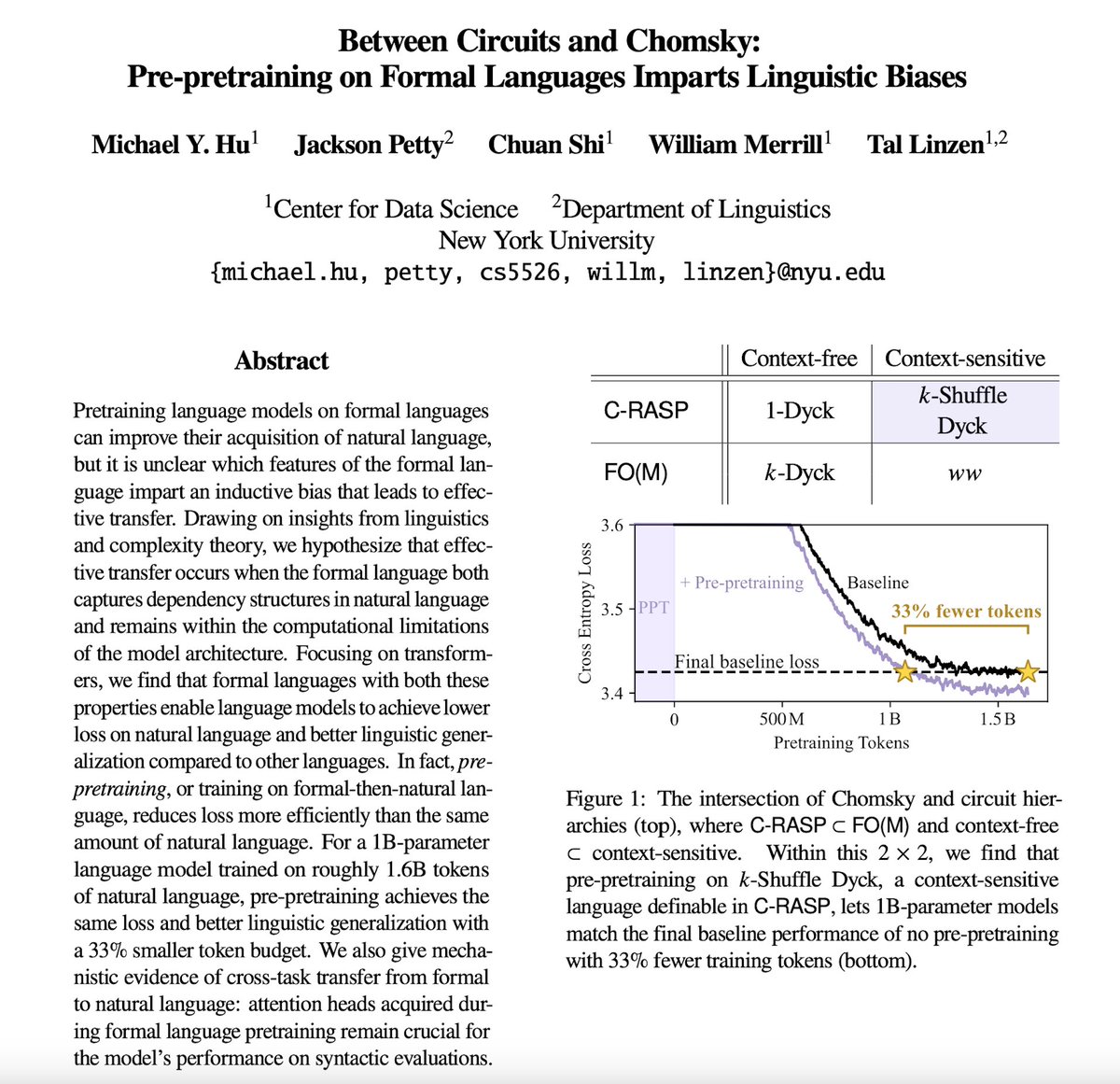

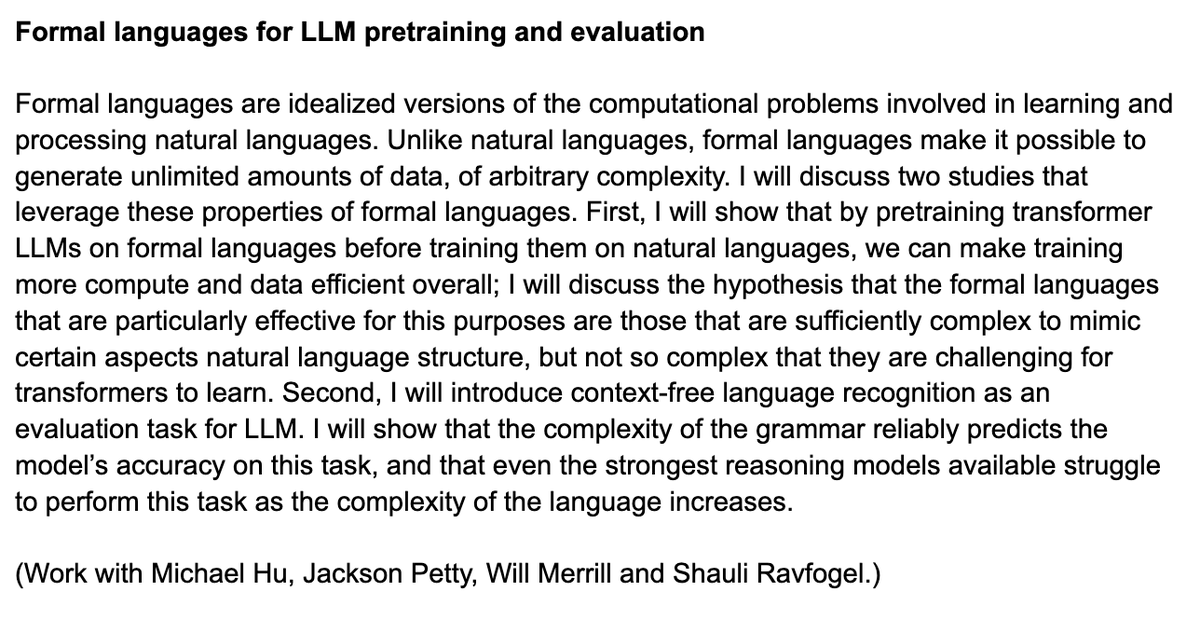

I'll also be at ACL and excited to talk about Michael Hu 's project!

New on arXiv: Knee-Deep in C-RASP, by Andy J Yang, Michael Cadilhac and me. The solid stepped line is our theoretical prediction based on what problems C-RASP can solve, and the numbers/colors are what transformers (no position embedding) can learn.

We’re proud to announce three new tenure-track assistant professors joining TTIC in Fall 2026: Yossi Gandelsman (Yossi Gandelsman), Will Merrill (William Merrill), and Nick Tomlin (Nicholas Tomlin). Meet them here: buff.ly/JH1DFtT