Lechen Zhang

@leczhang

Incoming CS PhD @UofIllinois | MSc @UMich | BEng @SJTU1896.

Interested in #NLProc & #AI.

ID: 1566273820817768449

https://leczhang.com/ 04-09-2022 03:56:09

51 Tweet

97 Followers

303 Following

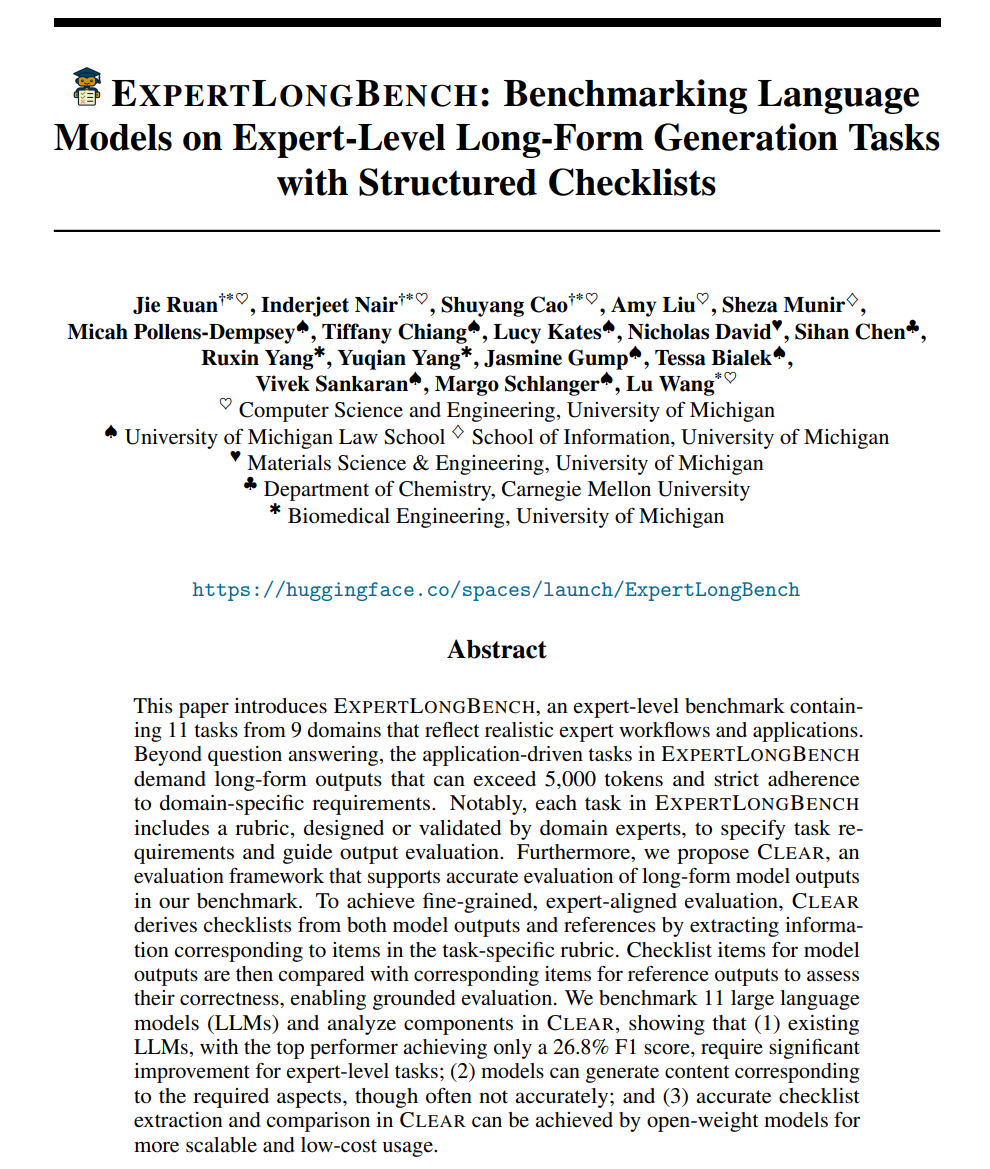

How accurately do citations reflect the original research? Do authors truly engage with what they cite? In a new study [arxiv.org/abs/2502.20581] with David Jurgens is now on BlueSky only and Misha Teplitskiy | Science of Science, we analyze millions of citation sentence pairs to measure citation fidelity and reveal how

![Hong Chen (@_hong_chen) on Twitter photo How accurately do citations reflect the original research? Do authors truly engage with what they cite?

In a new study [arxiv.org/abs/2502.20581] with <a href="/david__jurgens/">David Jurgens is now on BlueSky only</a> and <a href="/MishaTeplitskiy/">Misha Teplitskiy | Science of Science</a>, we analyze millions of citation sentence pairs to measure citation fidelity and reveal how How accurately do citations reflect the original research? Do authors truly engage with what they cite?

In a new study [arxiv.org/abs/2502.20581] with <a href="/david__jurgens/">David Jurgens is now on BlueSky only</a> and <a href="/MishaTeplitskiy/">Misha Teplitskiy | Science of Science</a>, we analyze millions of citation sentence pairs to measure citation fidelity and reveal how](https://pbs.twimg.com/media/GluRBV-WsAAXe2M.png)

✨Personal Milestone✨ Thrilled to share I’ll be a tenure-track Assistant Professor in Computer Science at NYU Shanghai, affiliated with NYU Tandon, starting Fall 2025! 😊🌏NYU Shanghai, NYU Tandon, New York University 🧠I’ll be recruiting students via NYU Courant CS & NYU Tandon CSE

How did #IGotAThingFor become a thing? Louise Zhu David Jurgens is now on BlueSky only Daniel Romero and I explored the roles of networks and identity in the adoption of hashtags in our new The Web Conference paper (Poster 01, Thu 5pm)! dl.acm.org/doi/pdf/10.114… #www2025 #thewebconf2025 1/9

🚨Announcing SCALR @ COLM 2025 — Call for Papers!🚨 The 1st Workshop on Test-Time Scaling and Reasoning Models (SCALR) is coming to Conference on Language Modeling in Montreal this October! This is the first workshop dedicated to this growing research area. 🌐 scalr-workshop.github.io

🚨 Deadline for SCALR 2025 Workshop: Test‑time Scaling & Reasoning Models at COLM '25 Conference on Language Modeling is approaching!🚨 scalr-workshop.github.io 🧩 Call for short papers (4 pages, non‑archival) now open on OpenReview! Submit by June 23, 2025; notifications out July 24. Topics

What happens behind the "abrupt learning" curve in Transformer training? Our new work (led by Pulkit Gopalani) reveals universal characteristics of Transformers' early-phase training dynamics—uncovering the implicit biases and the degenerate state the model gets stuck in. ⬇️