Leopold Aschenbrenner

@leopoldasch

situational-awareness.ai

ID: 2989966781

21-01-2015 14:18:33

1,1K Tweet

67,67K Followers

3,3K Following

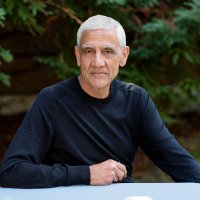

Vinod Khosla The pace is incredibly fast

Why Leopold Aschenbrenner didn't go into economic research. From dwarkeshpatel.com/p/leopold-asch… This was a great listen, and Leopold Aschenbrenner is underrated