Linus Pin-Jie Lin

@linusdd44804

PhD @VT_CS, Master @LstSaar. Interested in efficient model development & modular LMs

ID: 1115673042414211072

https://pjlintw.github.io/ 09-04-2019 17:49:16

109 Tweet

70 Followers

338 Following

My first PhD paper is out 😆 took 7 months and lots of back-and-forth. Learned so much from Tu — sharp thinking, real feedback, and always pushing the idea further. Also, shoutout to my collaborators and the folks at Virginia Tech Computer Science!

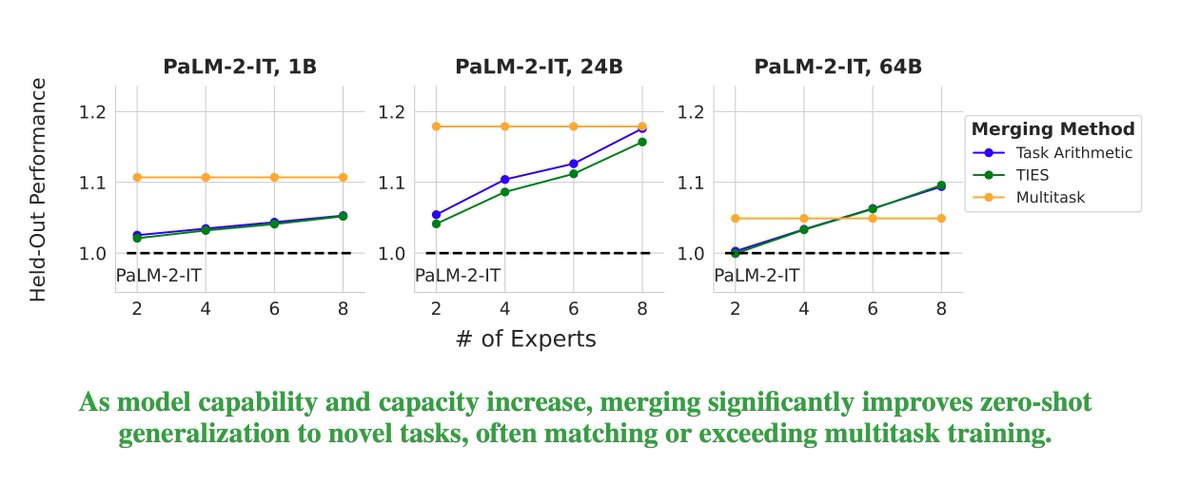

Excited to share that our paper on model merging at scale has been accepted to Transactions on Machine Learning Research (TMLR). Huge congrats to my intern Prateek Yadav and our awesome co-authors Jonathan Lai, Alexandra Chronopoulou, Manaal Faruqui, Mohit Bansal, and Tsendsuren 🎉!!

This work got accepted at Transactions on Machine Learning Research (TMLR). Congratulations to Prateek Yadav and my co-authors. Also, thank you to the reviewers and editors for their time.