Yaron Lipman

@lipmanya

Research scientist @AIatMeta (FAIR), prev/visiting @WeizmannScience. Interested in generative models and deep learning of irregular/geometric data.🎗️

ID: 2698179823

01-08-2014 12:34:45

286 Tweet

3,3K Followers

448 Following

Reward-driven algorithms for training dynamical generative models significantly lag behind their data-driven counterparts in terms of scalability. We aim to rectify this. Adjoint Matching poster Carles Domingo-Enrich Sat 3pm & Adjoint Sampling oral Aaron Havens Mon 10am FPI

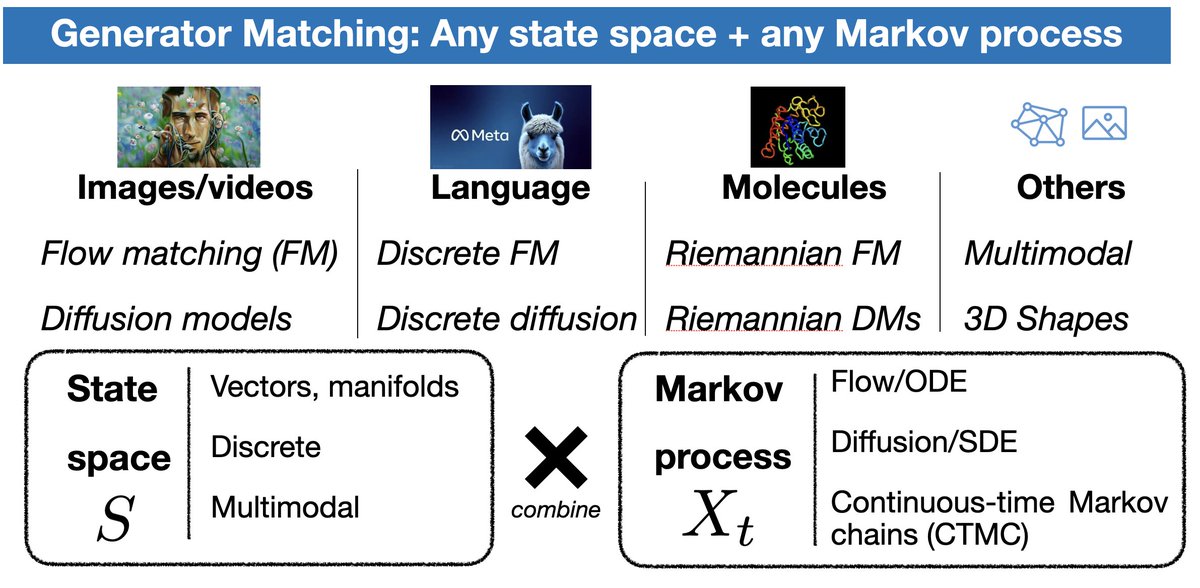

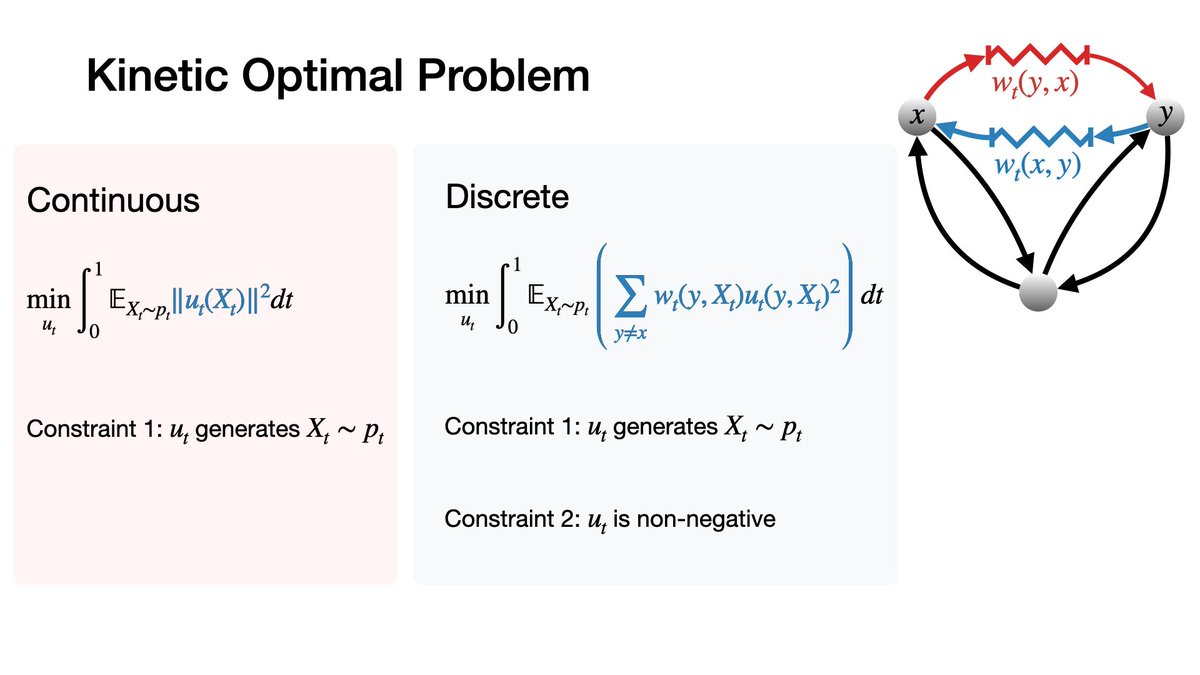

Even better if friends and colleagues join you for the same session :) Our work on “Flow Matching with General Discrete Paths“ will be presented by Neta Shaul briefly afterwards. Check it out, too! Paper: arxiv.org/abs/2412.03487

Had an absolute blast presenting at #ICLR2025! Thanks to everyone who came to visit my poster🙌 Special shoutout to Scott H. Hawley for taking a last-minute photo 📸

Excited to share our recent work on corrector sampling in language models! A new sampling method that mitigates error accumulation by iteratively revisiting tokens in a window of previously generated text. With: Neta Shaul Uriel Singer Yaron Lipman Link: arxiv.org/abs/2506.06215

[1/n] New paper alert! 🚀 Excited to introduce 𝐓𝐫𝐚𝐧𝐬𝐢𝐭𝐢𝐨𝐧 𝐌𝐚𝐭𝐜𝐡𝐢𝐧𝐠 (𝐓𝐌)! We're replacing short-timestep kernels from Flow Matching/Diffusion with... a generative model🤯, achieving SOTA text-2-image generation! Uriel Singer Itai Gat Yaron Lipman

Check out our team's latest work, led by Uriel Singer and Neta Shaul!

Introducing Transition Matching (TM) — a new generative paradigm that unifies Flow Matching and autoregressive models into one framework, boosting both quality and speed! Thank you for the great collaboration Neta Shaul Itai Gat Yaron Lipman