Xingyu Bruce Liu

@liu_xingyu

PhD student @UCLAengineering; Previously @Google @Meta @Snap @cmuhcii @UTokyo_News_en. Research #HCI, #AI, #AR, #a11y. Also make music🎸

ID: 1186171916090068992

https://liubruce.me 21-10-2019 06:46:53

44 Tweet

380 Followers

362 Following

What if #AI could assists you w/ relevant realtime visuals in #AR during conversations? Check out Project #VisualCaptions: duruofei.com/projects/augme… Kudos to Xingyu Bruce Liu, Vlad, Xiuxiu, Alex Olwal Xiang 'Anthony' Chen Peggy Chi for the wonderful teamwork! ACM SIGCHI #CHI2023 #GPT3 #LLM

Are you tired of jittery captions in Google Meet, Zoom, Microsoft Teams, #FaceTime, Skype? Check out our latest research in text stability in live captions ACM SIGCHI #CHI2023! #TextStability paper available at in duruofei.com/projects/augme… #accessbility #a11y #HCI #UX #AR

Congrats to Xingyu Bruce Liu on winning the Edward K. Rice Outstanding Master’s Student award!

#VisualCaptions CHI'23 #opensource at github.com/google/archat! Feel free to star+fork! A big kudos to Xingyu Bruce Liu and the #ARChat + #Rapsai teams for an amazing year of dedication and hard work! You may need to apply API keys to run, but we verified it :) #AR ACM CHI Conference ACM UIST

Congrats to our 11 UCLA doctoral students from computer science UCLA CS Department, bioengineering UCLA Bioengineering, and electrical and computer engineering UCLA Electrical and Computer Engineering who have been awarded Amazon fellowships by Amazon Science to conduct #AI research. sciencehub.ucla.edu/2023-amazon-fe…

Congrats to Xingyu Bruce Liu on receiving the Amazon PhD Fellowship!

traveling to #UIST2023 from LA along the beautiful west coast w/ Xingyu Bruce Liu & Nazlı Doğan

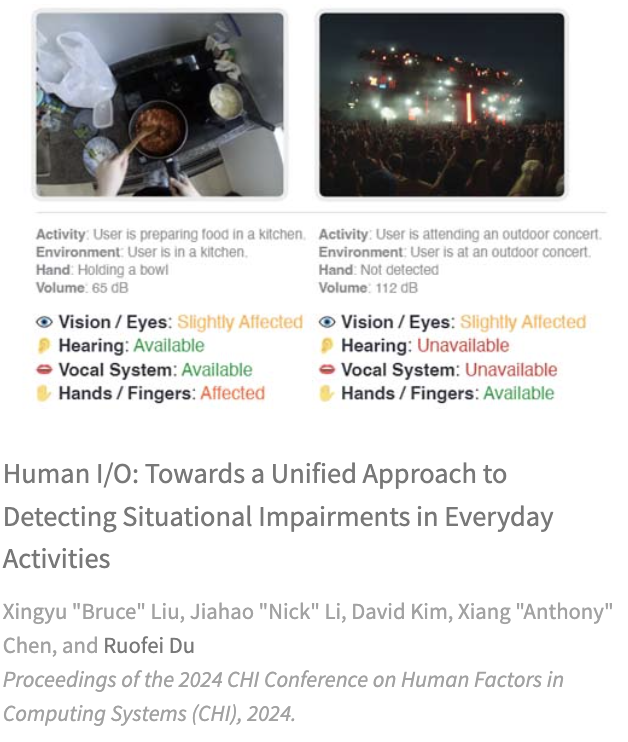

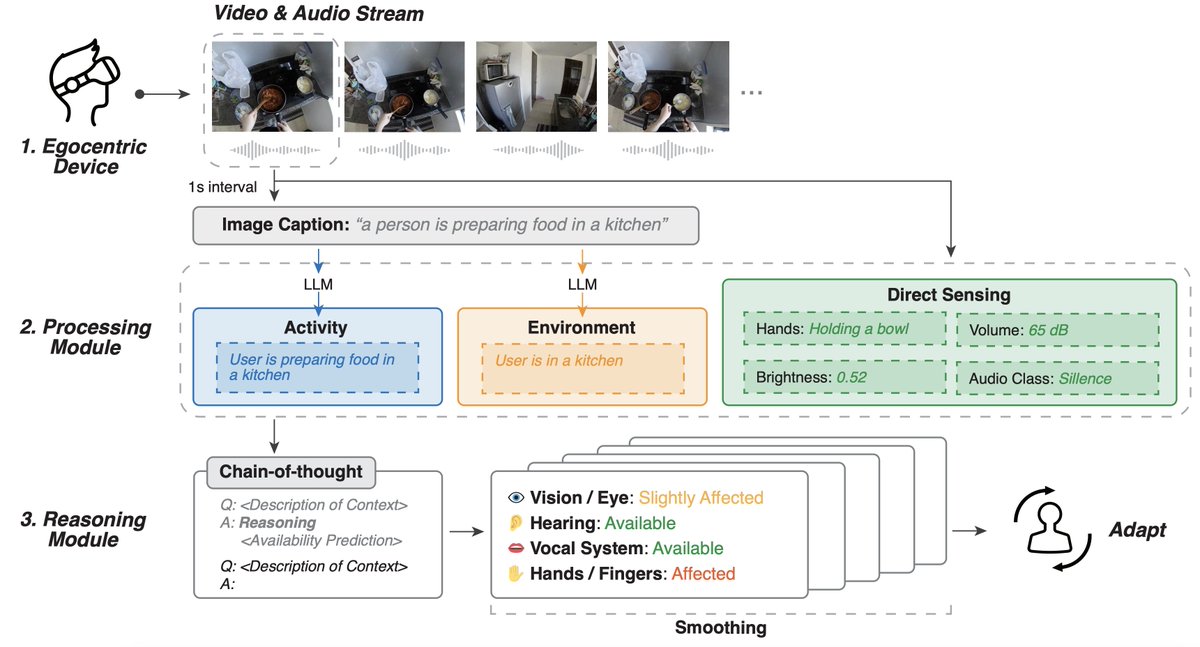

Hearty congratulations to Xun, Xingyu Bruce Liu, Siyou Pei (on job market), and the team on the #CHI2024 papers in #XR & #AI! Can't wait to finalize the revision and share the latest invention with the world! Thanks Purdue Mechanical Engineering UCLA Computer Science UCLA Electrical and Computer Engineering for the AMAZING collaboration with Google AI!