yingzhen

@liyzhen2

teaching machines 🤖 to learn 🔍 and fantasise 🪄

now @ImperialCollege @ICComputing

ex @MSFTResearch @CambridgeMLG

currently helping @aistats_conf

ID: 559572885

http://yingzhenli.net 21-04-2012 12:26:18

535 Tweet

3,3K Followers

155 Following

A little chapter that we (Ruqi Zhang and awesome students and yours truly) wrote a while ago to give a brief intro of this nice field to statisticians 😊

This paper took Carles Balsells Rodas ~5 years in making: 2020/21: MSc proj, toy exp🐣 2022: added more exp, rejected due to weak theory 😥 2023/24: invented a new proof tech in another proj 🤔 2024/25: revisit, apply new proof tech, resubmit -> accepted 🥳 Persistence pays off indeed👍

Congrats to Daniel Marks and Dario Paccagnan! 👍 Daniel was an MEng student at Imperial Computing and this paper came from his MEng thesis.

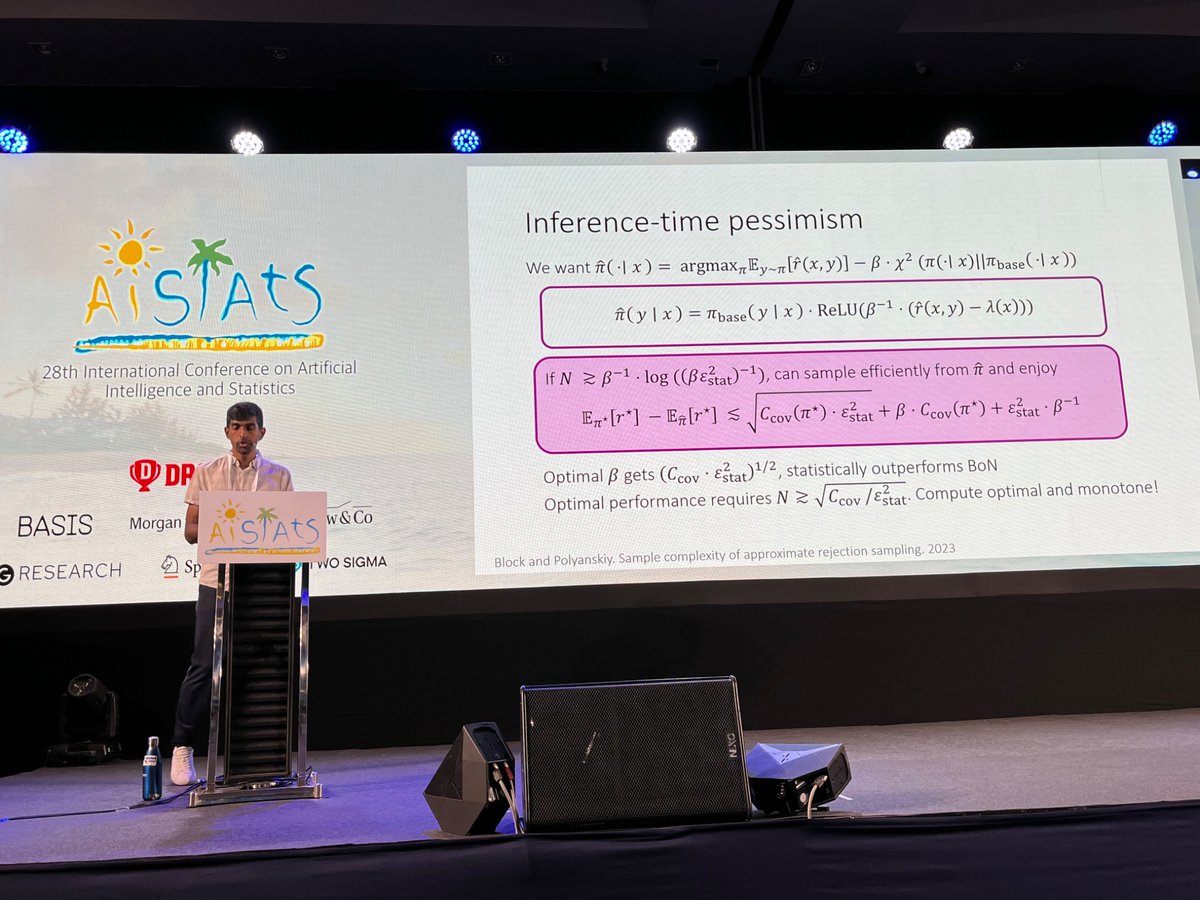

#AISTATS2025 day 3 keynote by Akshay Krishnamurthy about how to do theory research on inference time compute 👍 AISTATS Conference