Lorenzo Noci

@lorenzo_noci

PhD in Machine Learning at @ETH

working on deep learning theory and principled large-scale AI models.

ID: 2207106105

https://lorenzonoci.github.io/ 04-12-2013 08:20:18

67 Tweet

374 Followers

257 Following

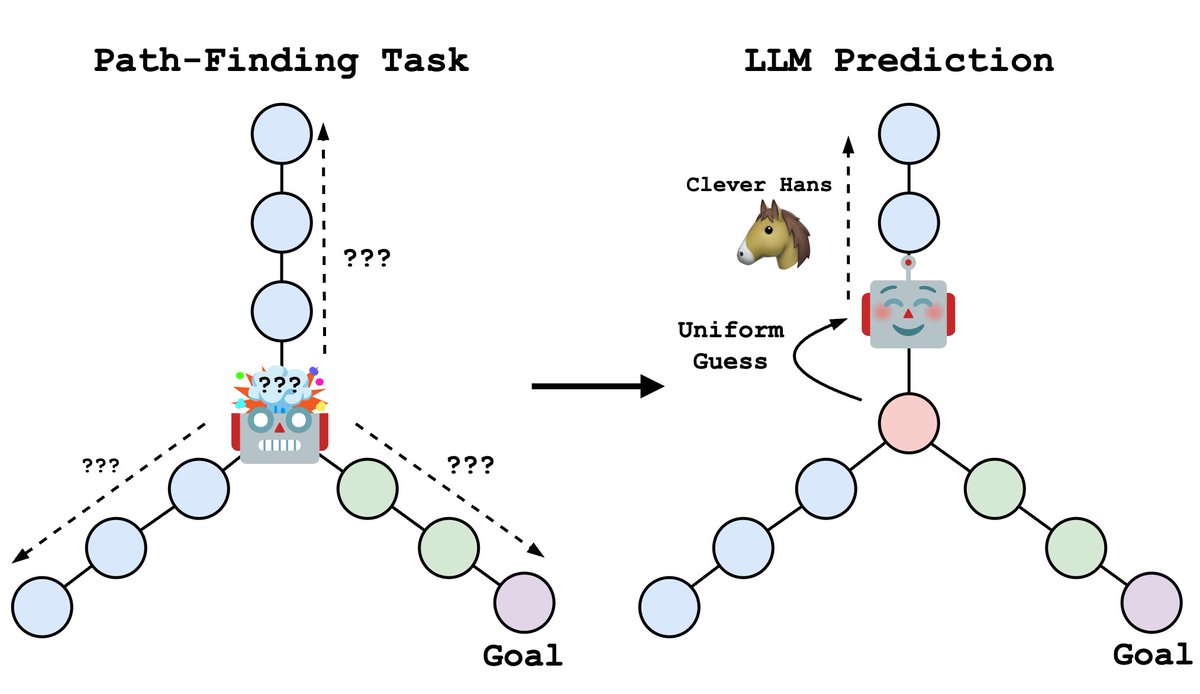

From stochastic parrot 🦜 to Clever Hans 🐴? In our work with Vaishnavh Nagarajan @ ICML we carefully analyse the debate surrounding next-token prediction and identify a new failure of LLMs due to teacher-forcing 👨🏻🎓! Check out our work arxiv.org/abs/2403.06963 and the linked thread!

Outlier Features (OFs) aka “neurons with big features” emerge in standard transformer training & prevent benefits of quantisation🥲but why do OFs appear & which design choices minimise them? Our new work (+Lorenzo Noci Daniele Paliotta Imanol Schlag T. Hofmann) takes a look👀🧵

Come by at Neurips to hear Hamza present about interesting properties of various feature learning infinite parameter limits of transformer models! Poster in Hall A-C #4804 at 11 AM PST Friday Paper arxiv.org/abs/2405.15712 Work with Hamza Tahir Chaudhry and Cengiz Pehlevan

Announcing : The 2nd International Summer School on Mathematical Aspects of Data Science EPFL, Sept 1–5, 2025 Speakers: Bach (Francis Bach) Bandeira Mallat Montanari (Andrea Montanari) Peyré (Gabriel Peyré) For PhD students & early-career researchers Application deadline: May 15

Come hear about how transformers perform factual recall using associative memories, and how this emerges in phases during training! #ICLR2025 poster #602 at 3pm today. Lead by Eshaan Nichani Link: iclr.cc/virtual/2025/p… Paper: arxiv.org/abs/2412.06538