Lucas Torroba-Hennigen

@ltorroba1

PhD student at MIT working in NLP.

ID: 1229354866298040320

http://ltorroba.github.io 17-02-2020 10:40:22

141 Tweet

450 Followers

571 Following

Lucas Torroba-Hennigen and I will present SymGen at Conference on Language Modeling on Wednesday afternoon! Looking forward to seeing y’all there! I'd love to chat about llm generation attribution & verification and human agent collaboration for scientific discovery! plz dm/email me :)

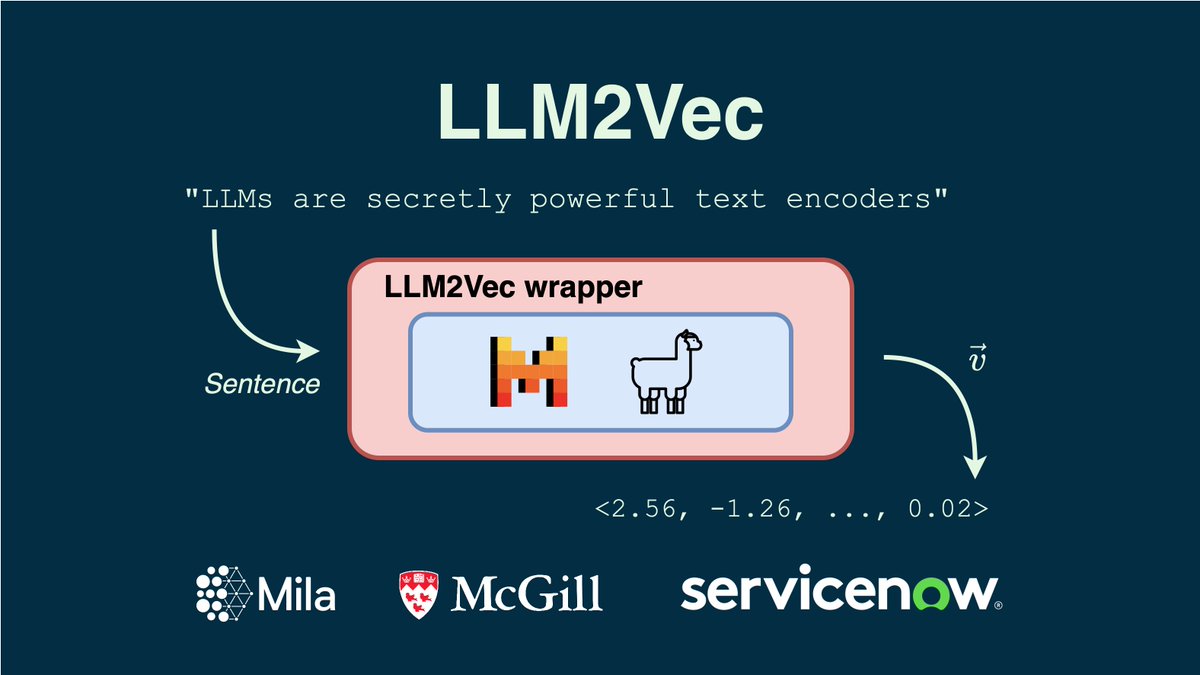

I have multiple vacancies for PhD and Masters students at Mila - Institut québécois d'IA McGill NLP in NLP/ML focusing on representation learning, reasoning, multimodality and alignment. Deadline for applications is Dec 1st. More details: mila.quebec/en/prospective…