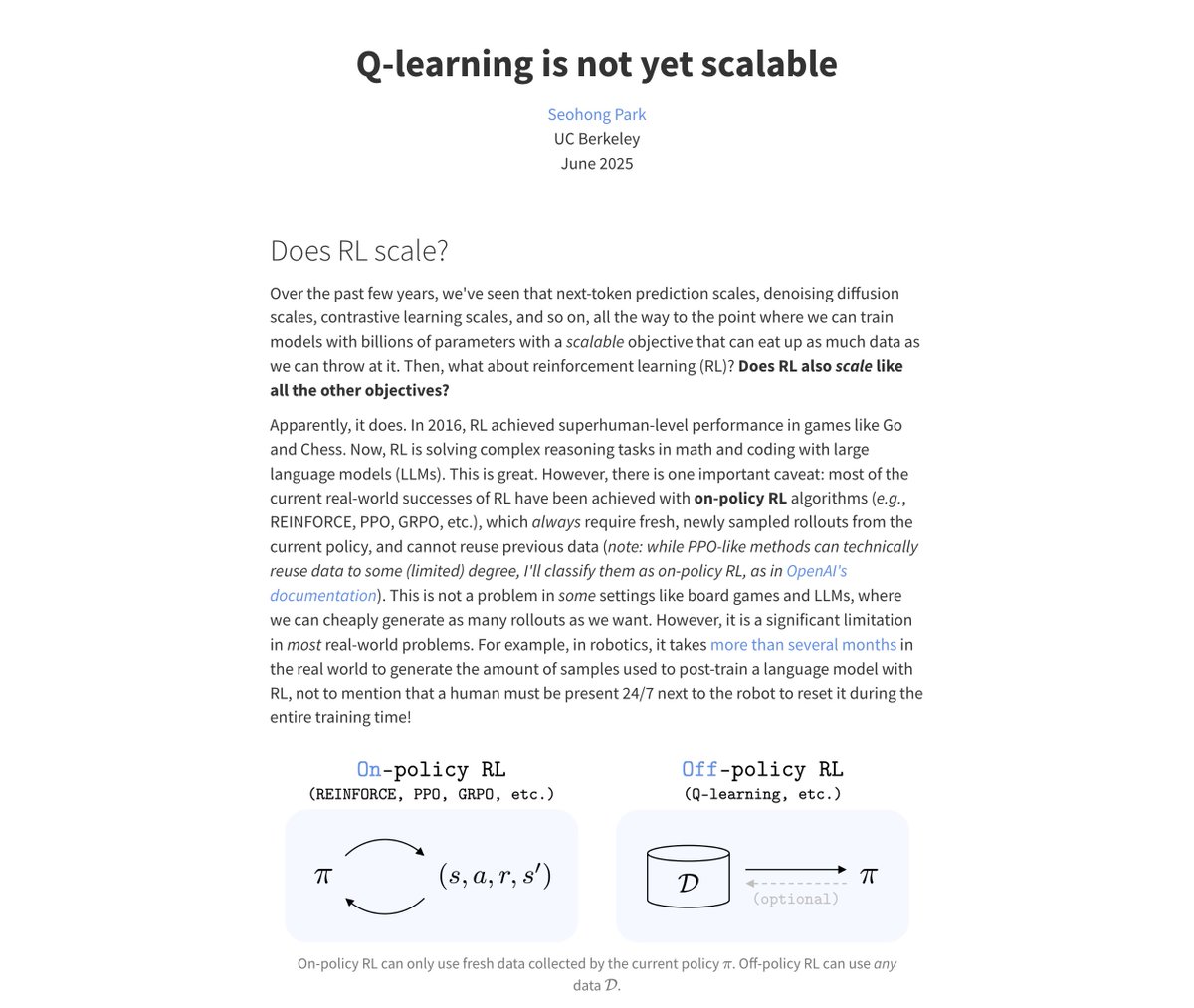

Lucy Shi

@lucy_x_shi

CS PhD student @Stanford. Robotics research @physical_int. Interested in robots, rockets, and humans.

ID: 1446952547504177154

https://lucys0.github.io/ 09-10-2021 21:35:59

223 Tweet

1,1K Followers

584 Following

If you are interested in solving complex long-horizon tasks, please join us at the 3rd workshop on Learning Effective Abstractions for Planning (LEAP) at Conference on Robot Learning! 📅 Submission deadline: Sep 5 🐣 Early bird deadline: Aug 12

Sadly I won’t be at ICML in person, but Chelsea Finn will be presenting Hi Robot tomorrow at 4:30pm in West Exhibition Hall B2-B3 (#W-403). Don’t miss it!

We’re helping to unlock the mysteries of the universe with AI. 🌌 Our novel Deep Loop Shaping method published in Science Magazine could help astronomers observe more events like collisions and mergers of black holes in greater detail, and gather more data about rare space

I’m giving a talk at ICCV tomorrow on Robot Foundation Models with Multimodal Reasoning! Will cover our recent work on open-ended physical intelligence (Physical Intelligence) and world models (Stanford University). - Talk: 10-10:30 am @ MMRAgI (301 A) - Panel: 4:20-5:20 pm

We developed RECAP Physical Intelligence to apply RL and interventions to π0.6, achieving high success rates and throughput on several challenging tasks! Watching these policies operate successfully for hours gives an appreciation for what the method can do