Lujain Ibrahim لجين إبراهيم

@lujainmibrahim

Working on AI evaluations & societal impact / PhD candidate @oiioxford / formerly intern @googledeepmind @govai_ @schwarzmanorg @nyuniversity

ID: 1155880668435496960

http://lujainibrahim.com 29-07-2019 16:40:01

2,2K Tweet

1,1K Followers

858 Following

What the public thinks about AI really matters. Dr Noemi Dreksler and colleagues recently put together the most comprehensive review of the literature out there.

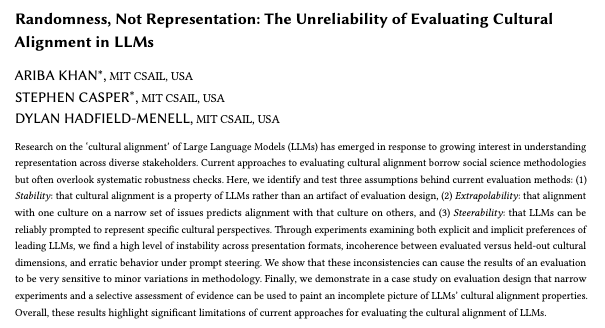

🚨New paper led by Ariba Khan Lots of prior research has assumed that LLMs have stable preferences, align with coherent principles, or can be steered to represent specific worldviews. No ❌, no ❌, and definitely no ❌. We need to be careful not to anthropomorphize LLMs too much.

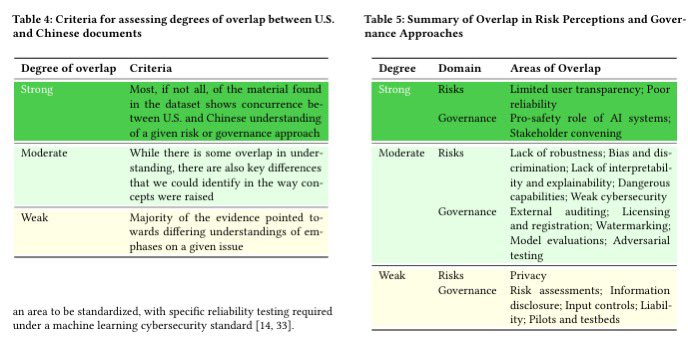

🤩🤩🤩Saad Siddiqui and Lujain Ibrahim لجين إبراهيم adapted AGORA's taxonomy to compare US and Chinese documents on AI risk: "...despite strategic competition, there exist concrete opportunities for bilateral U.S. China cooperation in the development of responsible AI." 🔗🧵