Machel Reid

@machelreid

research scientist @googledeepmind ♊️

ID: 807327556072402945

http://machelreid.github.io 09-12-2016 20:54:23

964 Tweet

2,2K Followers

1,1K Following

A new year, a new challenge. I recently joined AI at Meta to improve evaluation and benchmarking of LLMs. I'm excited to push on making LLMs more useful and accessible, via open-sourcing data/models and real-world applications. I'll continue to be based in Berlin.

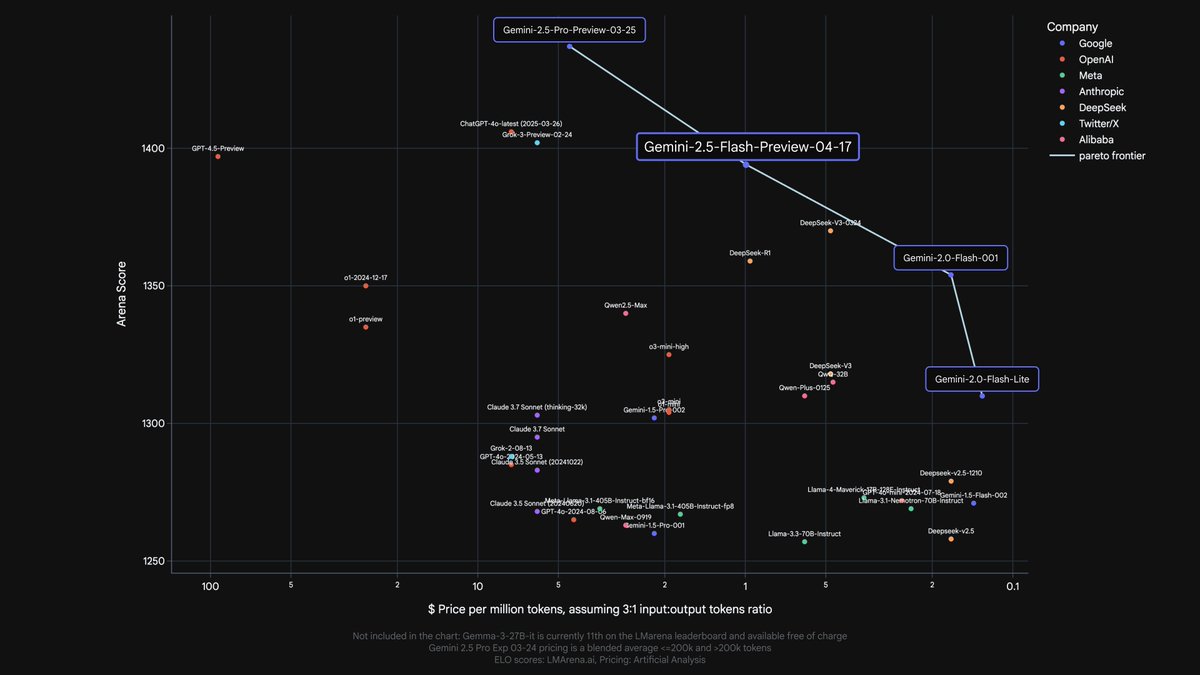

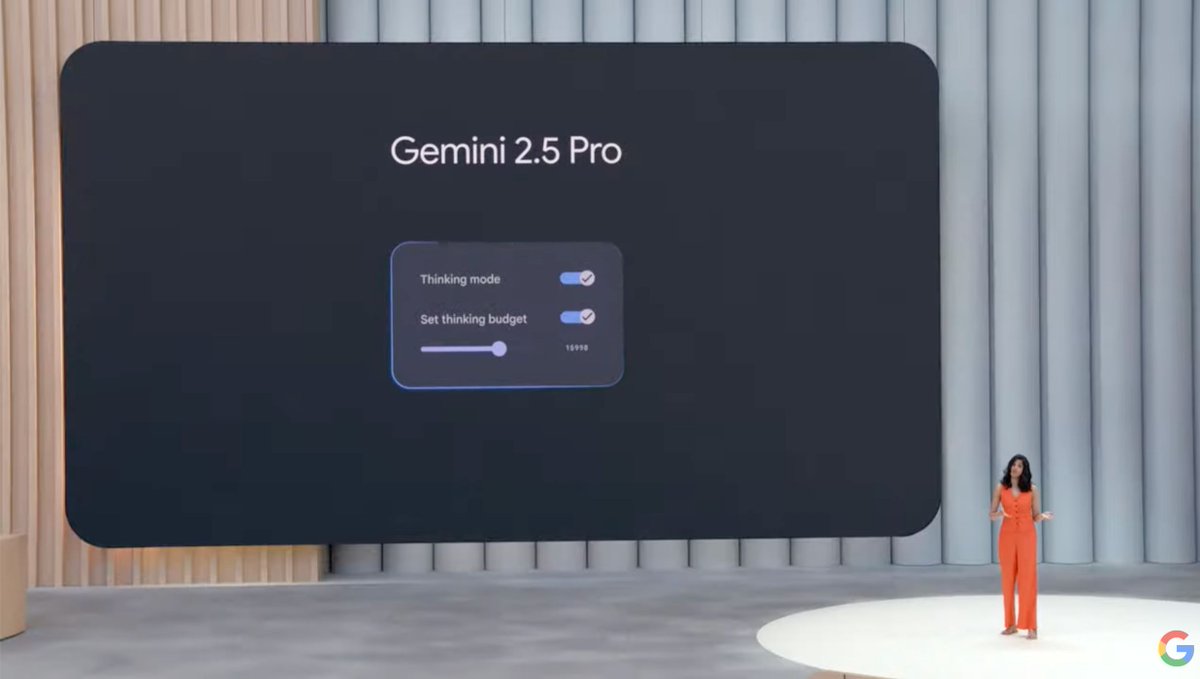

Introducing Gemini 2.5 Pro Experimental! 🎉 Our newest Gemini model has stellar performance across math and science benchmarks. It’s an incredible model for coding and complex reasoning, and it’s #1 on the lmarena.ai leaderboard by a drastic 40 ELO margin. Only a handful of

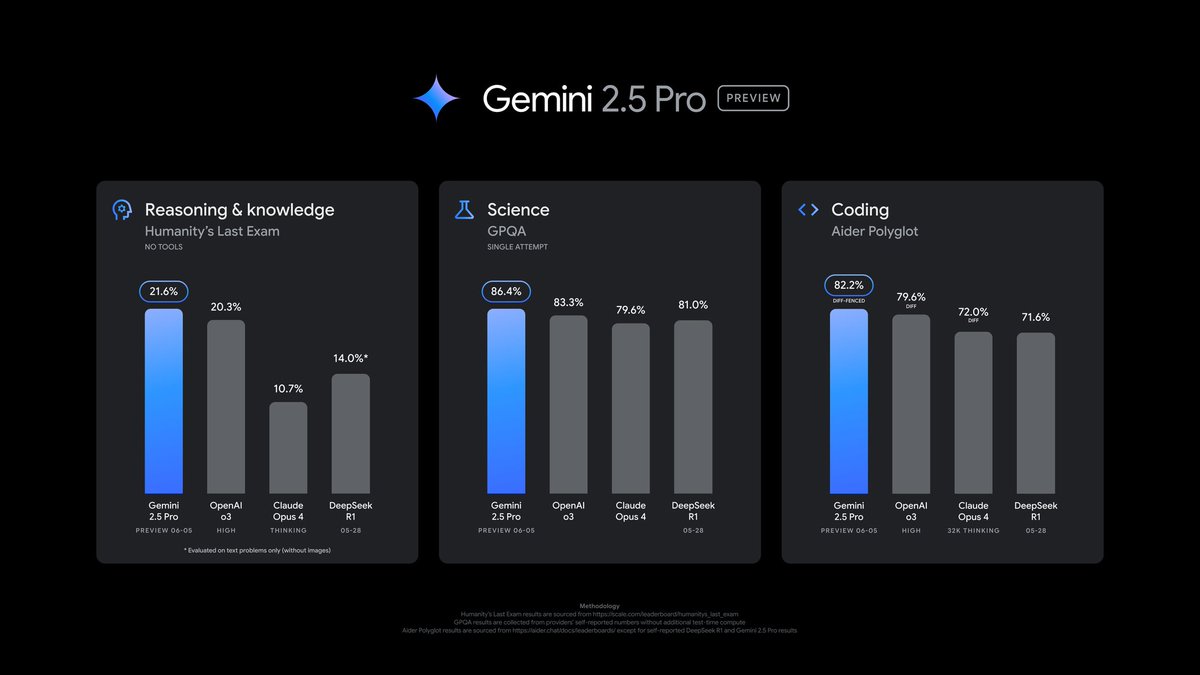

Our latest Gemini 2.5 Pro update is now in preview. It’s better at coding, reasoning, science + math, shows improved performance across key benchmarks (AIDER Polyglot, GPQA, HLE to name a few), and leads lmarena.ai with a 24pt Elo score jump since the previous version. We also

Fiction.live We like long context. Go beyond 192k plz ; )

Made it to no.1 in the App Store. Congrats to the Google Gemini App team for all their hard work, and this is just the start, so much more to come!