Sander Land

@magikarp_tokens

Staff MLE @ Cohere | Breaking all the models with weird tokens

ID: 1771865972934135808

https://github.com/cohere-ai/magikarp 24-03-2024 11:46:04

113 Tweet

928 Followers

78 Following

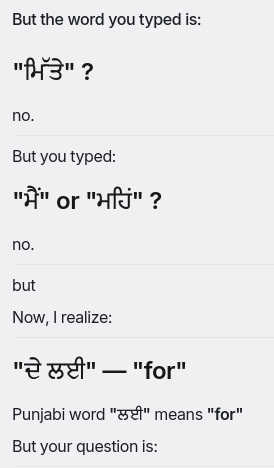

It was great to be able to present my glitch token work at AI Mad Lab in Oslo. As always, great community, excellent conversations, looking forward to many more meetups.

Thank you to co-authors Nathan Lambert, Valentina Pyatkin, Sander Land, Jacob Morrison, Noah A. Smith, and Hanna Hajishirzi for a great collaboration! Read more in the paper here (ArXiv soon!): github.com/allenai/reward… Dataset, leaderboard, and models here: huggingface.co/collections/al…

Huge congrats to all the authors Diana Abagyan, Alejandro, Felipe Cruz-Salinas, Kris Cao, John Lin, Acyr Locatelli, Marzieh Fadaee, Ahmet Üstün. I always enjoy collabs which tackle learning efficiency as an explicit design choice — rather than post training fixes. arxiv.org/abs/2506.10766

We are launching a new speaker series at EleutherAI, focused on promoting recent research by our team and community members. Our first talk is by Catherine Arnett on tokenizers, their limitations, and how to improve them.