Manaal Faruqui

@manaalfar

Senior Staff Research Scientist @Google Bard. Love eating, movies, travel and politics. Spread love, not war.

ID: 123595497

16-03-2010 15:57:44

3,3K Tweet

3,3K Followers

630 Following

no drama. just shipping. LFG! Trevor Strohman Martin Baeuml Jack Krawczyk Patrick Kane Quoc Le

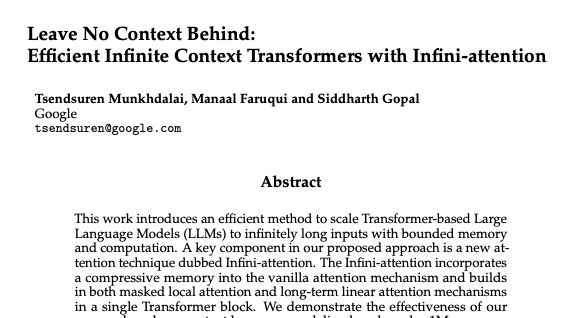

Hi @emilymbender.bsky.social, I'm one of the lead authors of MMMU. I can certify that 1) Google didn't fund this work, and 2) Google didn't have early access. They really like the benchmark after our release and worked very hard to get the results. It doesn't take that long to eval on a

@emilymbender.bsky.social (this will be the last response just for the record; this type of engagement is not why I use this app) 1. Dataset was released along with the paper. again, eval on a dataset of this scale really doesn't take long, especially for google 2. this was an one-off project