Maor Ivgi

@maorivg

NLP researcher / Ph.D. candidate at Tel-Aviv University

ID: 1381580813767213057

http://mivg.github.io 12-04-2021 12:12:11

187 Tweet

524 Followers

180 Following

Thrilled to announce that our paper has been accepted for an Oral presentation at #ECCV2024! See you in Milan! With Uriel Singer, Yuval Kirstain, Shelly Sheynin, Adam Polyak, Devi Parikh, and Yaniv Taigman

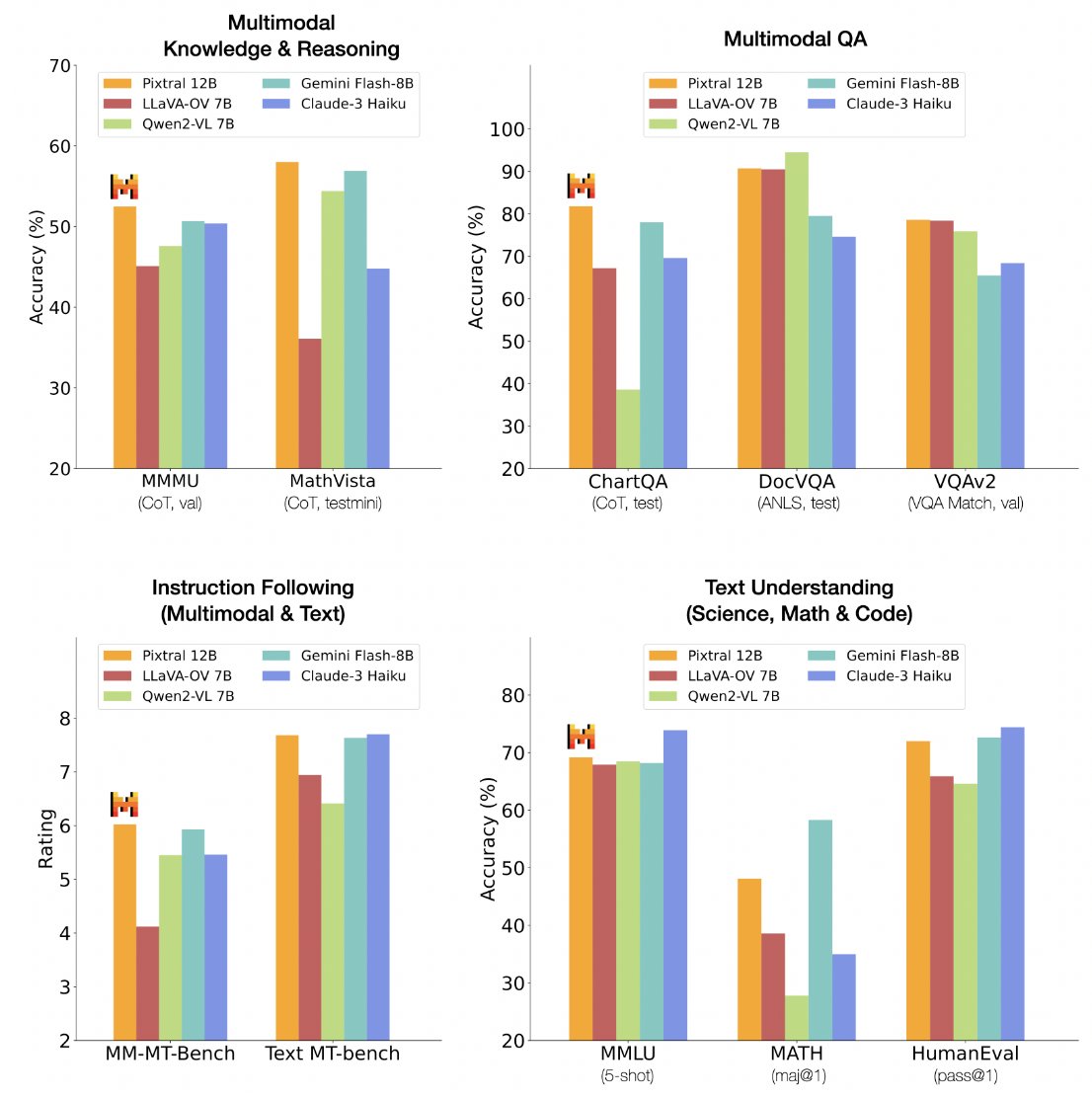

Excited to finally share what I have been working on at Mistral AI. Meet Pixtral 12B, our first-ever multimodal model: - Drop-in replacement for Mistral Nemo 12B - SOTA multimodal capabilities without compromising on SOTA text-only capabilities - New 400M parameter vision encoder

What's in an attention head? 🤯 We present an efficient framework – MAPS – for inferring the functionality of attention heads in LLMs ✨directly from their parameters✨ A new preprint with Amit Elhelo 🧵 (1/10)