Matan Eyal

@mataneyal1

ID: 2954613560

01-01-2015 09:42:04

111 Tweet

199 Followers

379 Following

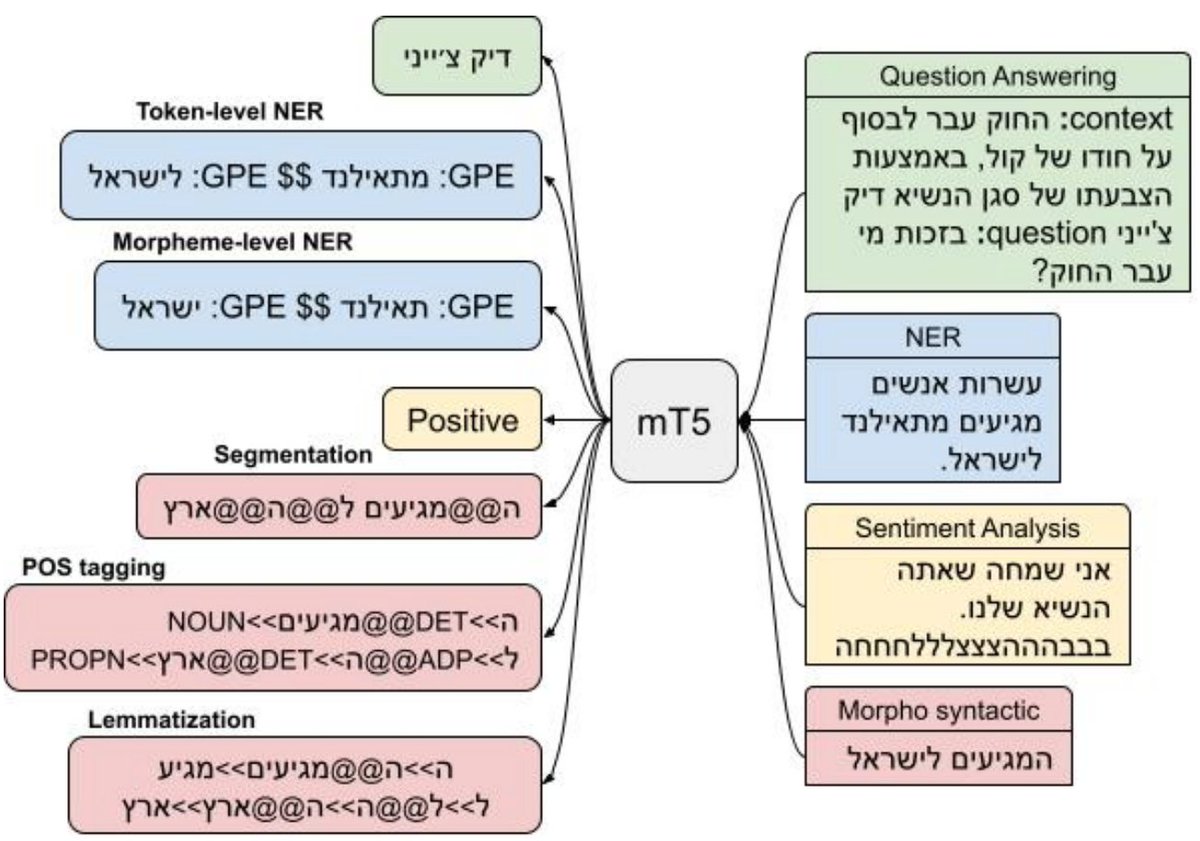

Finally, in "Multilingual Sequence-to-Sequence Models for Hebrew NLP" (arxiv.org/pdf/2212.09682…) we show that multilingual models like mT5 can go a long way for Hebrew when modeled right, with lots of strong results. With Matan Eyal Hila Noga هيله نوغا Reut Tsarfaty and Idan Szpektor

"semantic embeddings" are becoming increasingly popular, but "semantics" is really ill-defined. sometimes you want to search for text given a description of its content. current embedders suck at this. in this work we introduce a new embedder. Shauli Ravfogel Valentina Pyatkin ➡️ ICML Avshalom Manevich

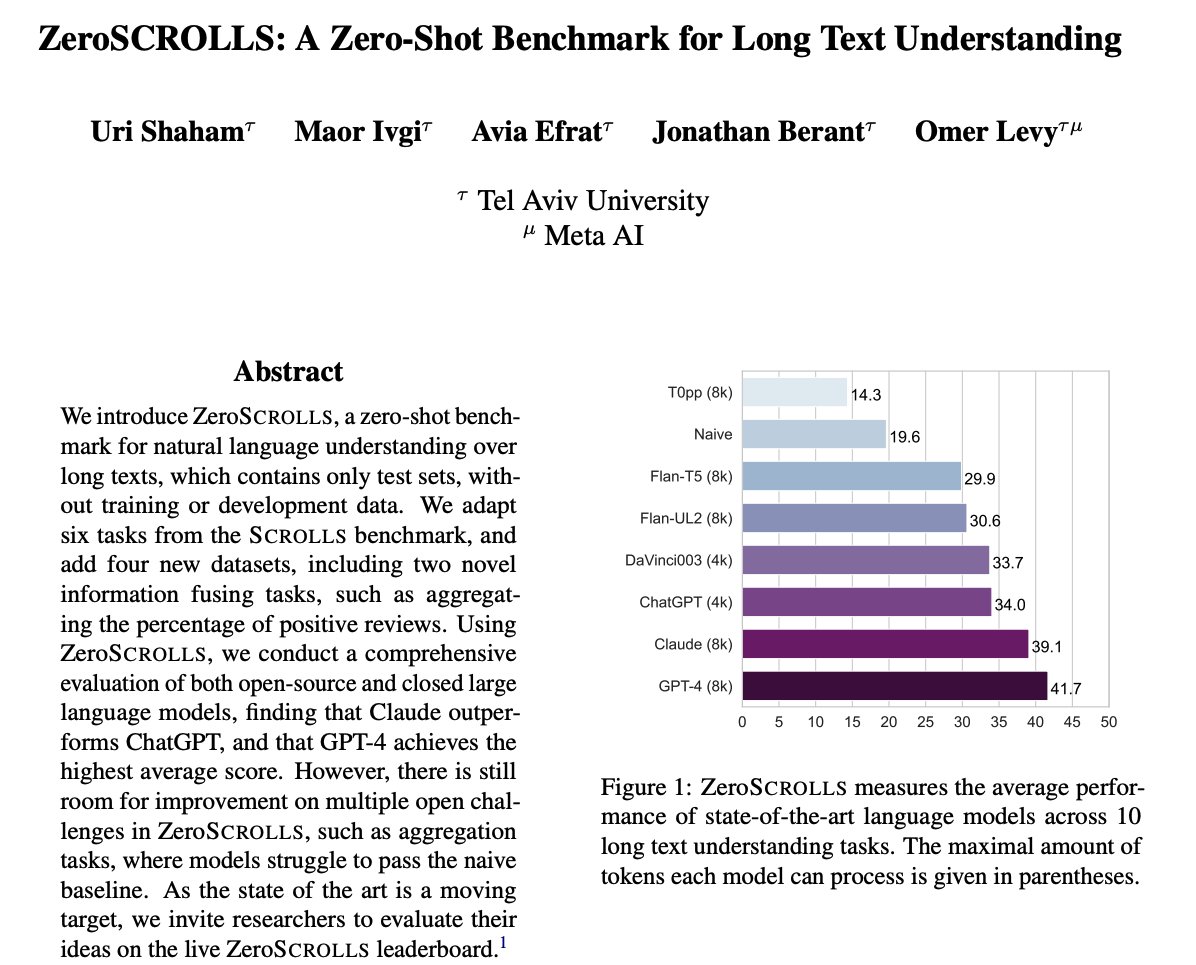

Happy to share ZeroSCROLLS, a zero-shot benchmark for long text understanding! 📜Paper arxiv.org/pdf/2305.14196… 📜 Leaderboard zero.scrolls-benchmark.com 📜 Data (inputs only) huggingface.co/datasets/tau/z… Maor Ivgi Avia Efrat Jonathan Berant Omer Levy 1/5

so what’s up with tokenization? why and how does it work? welcome to a🚨new paper🚨 thread! "Unpacking Tokenization: Evaluating Text Compression and its Correlation with Model Performance" w/ Avi Caciularu Matan Eyal Kris Cao idan szpektor and Reut Tsarfaty arxiv.org/abs/2403.06265 1/🧵

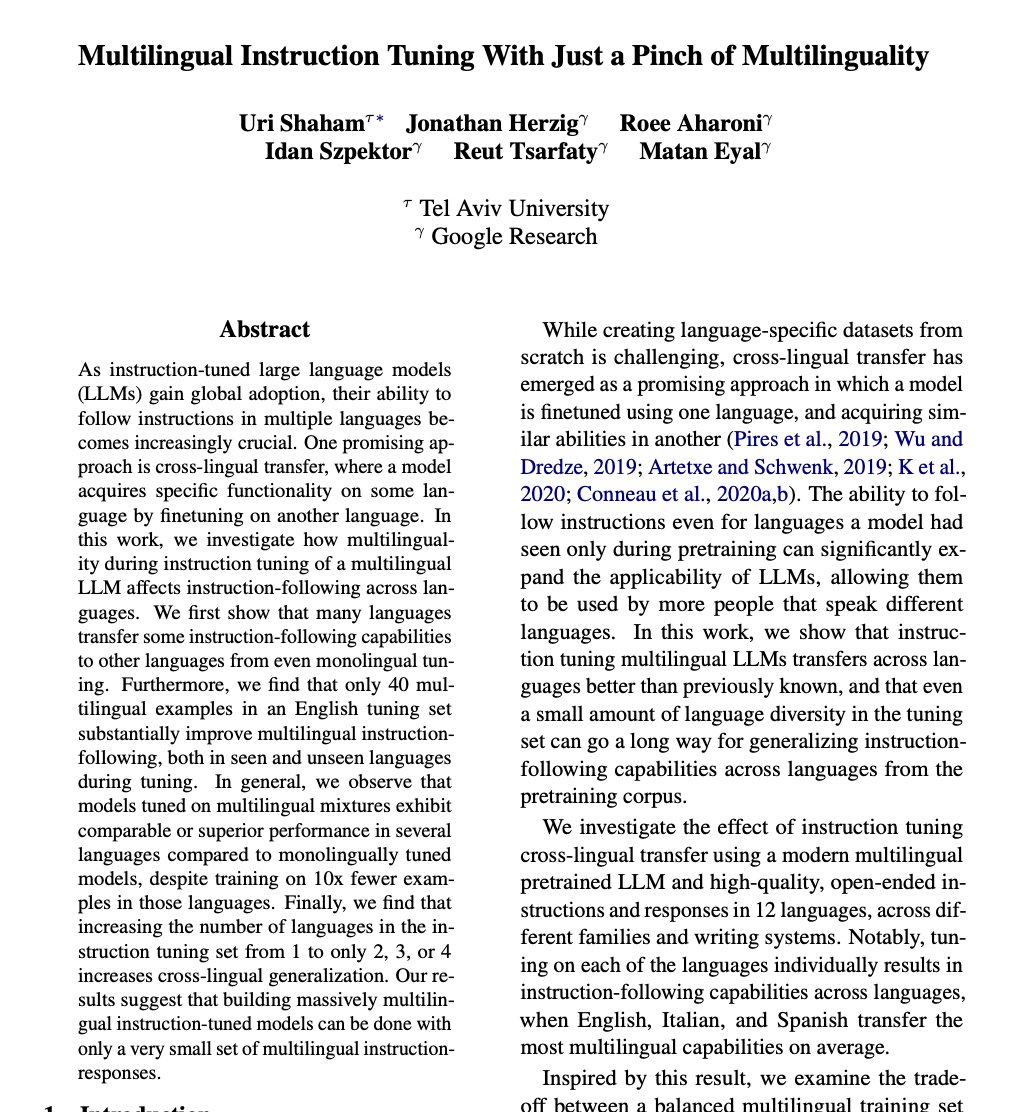

our research featured in a Google Research blog post! Uri Shaham Matan Eyal