Matthew Finlayson ✈️ NeurIPS

@mattf1n

First year PhD at @nlp_usc | Former predoc at @allen_ai on @ai2_aristo | Harvard 2021 CS & Linguistics

ID: 2149800655

http://mattf1n.github.io 22-10-2013 22:15:16

139 Tweet

954 Followers

907 Following

Congratulations to the GDM Google DeepMind team on their best paper award at #ICML2024 & Appreciate @afedercooper's shout out to our concurrent paper 🙌 If you are into the topic of recovering model info through just its output logits, check out our paper led by Matthew Finlayson too!

Just landed in Philly for Conference on Language Modeling where I’ll be presenting my work on extracting secrets from LLM APIs at the Wednesday afternoon poster sesh. Please reach out if you wanna hang and talk about sneaky LLM API hacks, accountability, and the geometry of LLM representations!

I had a fantastic time visiting USC and talking about 🌎AppWorld (appworld.dev) last Friday!! Thank you, Swabha Swayamdipta, Matthew Finlayson, & Brihi Joshi, for inviting and hosting me. Also thank you, Robin Jia, Jesse Thomason, & many others, for insightful discussions and meetings!

Arrived in Philadelphia for the very 1st Conference on Language Modeling! Excited to catch up w/ everyone & happy to chat about faculty/phd positions USC Viterbi School 🙂 Plz meet our amazing PhD students (Huihan Li Matthew Finlayson Sahana Ramnath ) for their work on model safety and cultural bias analysis 👇

Thank you so much Spectrum News 1 SoCal Jas Kang for featuring our work on OATH-Frames: Characterizing Online Attitudes towards Homelessness with LLM Assistants👇 🖥️📈 oath-frames-dashboard.streamlit.app 🗞️ spectrumnews1.com/ca/southern-ca… USC Thomas Lord Department of Computer Science USC NLP USC Social Work USC Center for AI in Society USC Viterbi School Swabha Swayamdipta

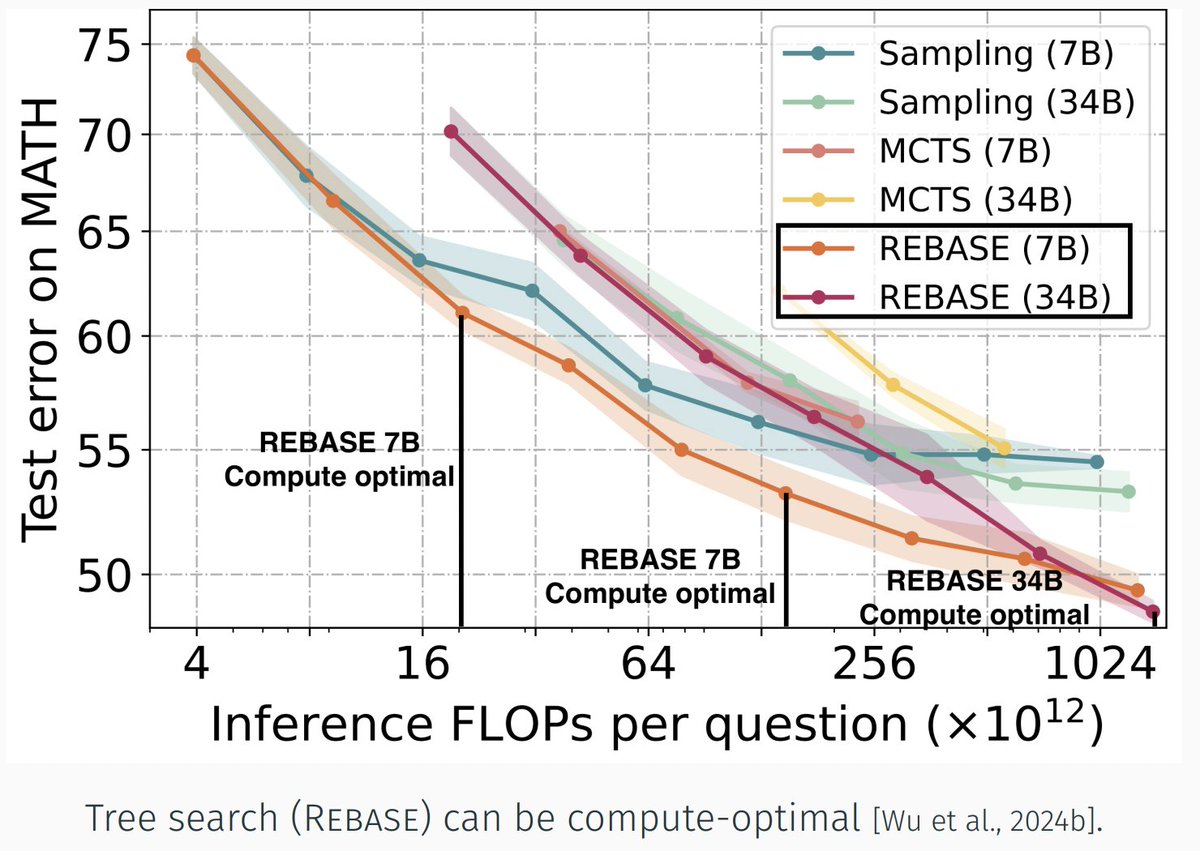

Excited to give a NeurIPS tutorial on LLM inference strategies, inference-time scaling laws & more with Matthew Finlayson and Hailey Schoelkopf ! "Beyond Decoding: Meta-Generation Algorithms for Large Language Models" More details soon, check out arxiv.org/abs/2406.16838 in the meantime!

We're incredibly honored to have an amazing group of panelists: Rishabh Agarwal , Noam Brown , Beidi Chen, Nouha Dziri, Jakob Foerster , with Ilia Kulikov moderating We'll close with a panel discussion about scaling, inference-time strategies, the future of LLMs, and more!

Loving the #NeurIPS2024 'Beyond Decoding: Meta-Generation Algorithms for LLMs' workshop ❤️ by Sean Welleck Matthew Finlayson Hailey Schoelkopf: 1. Primitive generators: optimization vs sampling 2. Meta-generators: chain, parallel, tree, refinement 3. Efficiency: quantize, FA, sparse MoEs, KV

Matthew Finlayson Brihi Joshi jack morris Swabha Swayamdipta Sean Ren 🔆 Super cool work and a fire team