Maximilian Mozes

@maximilianmozes

Senior Research Scientist @cohere. PhD @UCL/@ucl_nlp. Previously: @GoogleAI/@SpotifyResearch. He/Him.

ID: 1521245972361363458

https://mmozes.net 02-05-2022 21:51:44

181 Tweet

259 Followers

575 Following

I really enjoyed my Machine Learning Street Talk chat with Tim at #NeurIPS2024 about some of the research we've been doing on reasoning, robustness and human feedback. If you have an hour to spare and are interested in some semi-coherent thoughts revolving around AI robustness, it may be worth

I'm excited to the tech report for our @Cohere Cohere For AI Command A and Command R7B models. We highlight our novel approach to model training including the use of self-refinement algorithms and model merging techniques at scale. Command A is an efficient, agent-optimised

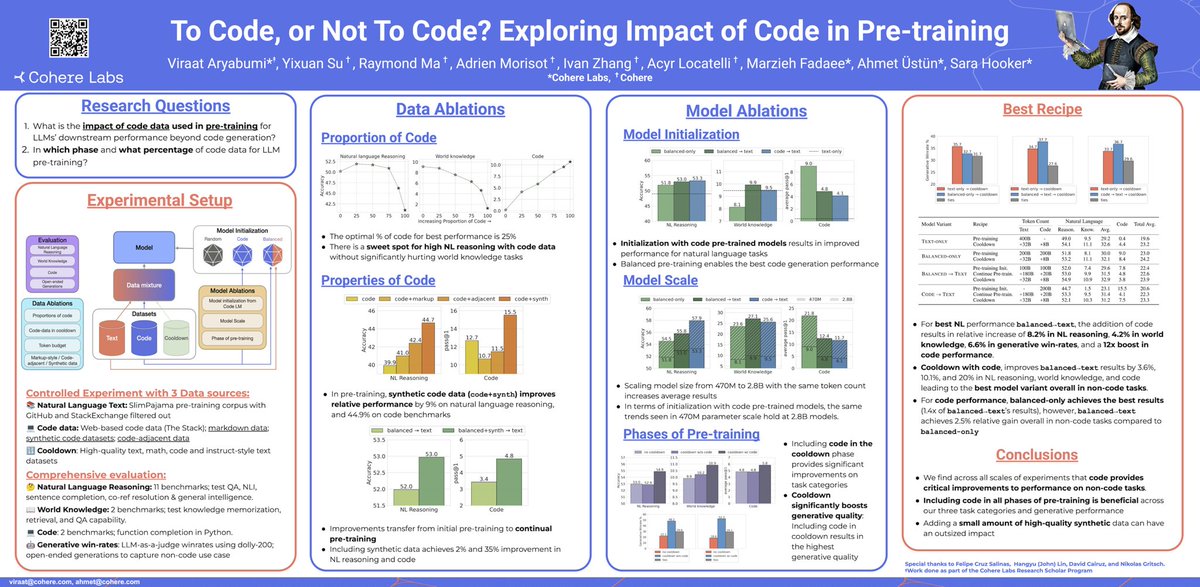

Very proud of this work which is being presented ICLR 2026 later today. While I will not be there — Catch up with Viraat Aryabumi and Ahmet Üstün who are both fantastic and can share more about our work at both Cohere Labs and cohere. 🔥✨

It is critical for scientific integrity that we trust our measure of progress. The lmarena.ai has become the go-to evaluation for AI progress. Our release today demonstrates the difficulty in maintaining fair evaluations on lmarena.ai, despite best intentions.

We froze an LLM ❄️, trained a system prompt generator using RL for conditioning it and got pretty cool results! This new work by Lisa Alazraki demonstrates that optimizing the system prompt alone can enhance downstream performance without updating the original model.

We’re proud to partner with the governments of Canada and the UK to accelerate adoption of secure AI solutions in the public sector. Today, our CEO and co-founder @AidanGomez met with Prime Minister of Canada and UK Prime Minister to discuss the strategic importance of AI for national

🚨 Wait, adding simple markers 📌during training unlocks outsized gains at inference time?! 🤔 🚨 Thrilled to share our latest work at Cohere Labs: “Treasure Hunt: Real-time Targeting of the Long Tail using Training-Time Markers“ that explores this phenomenon! Details in 🧵 ⤵️