Max Zhdanov

@maxxxzdn

busy scaling on a single GPU at @amlabuva with @wellingmax and @jwvdm

ID: 1502348291052392455

http://maxxxzdn.github.io 11-03-2022 18:19:00

384 Tweet

1,1K Followers

324 Following

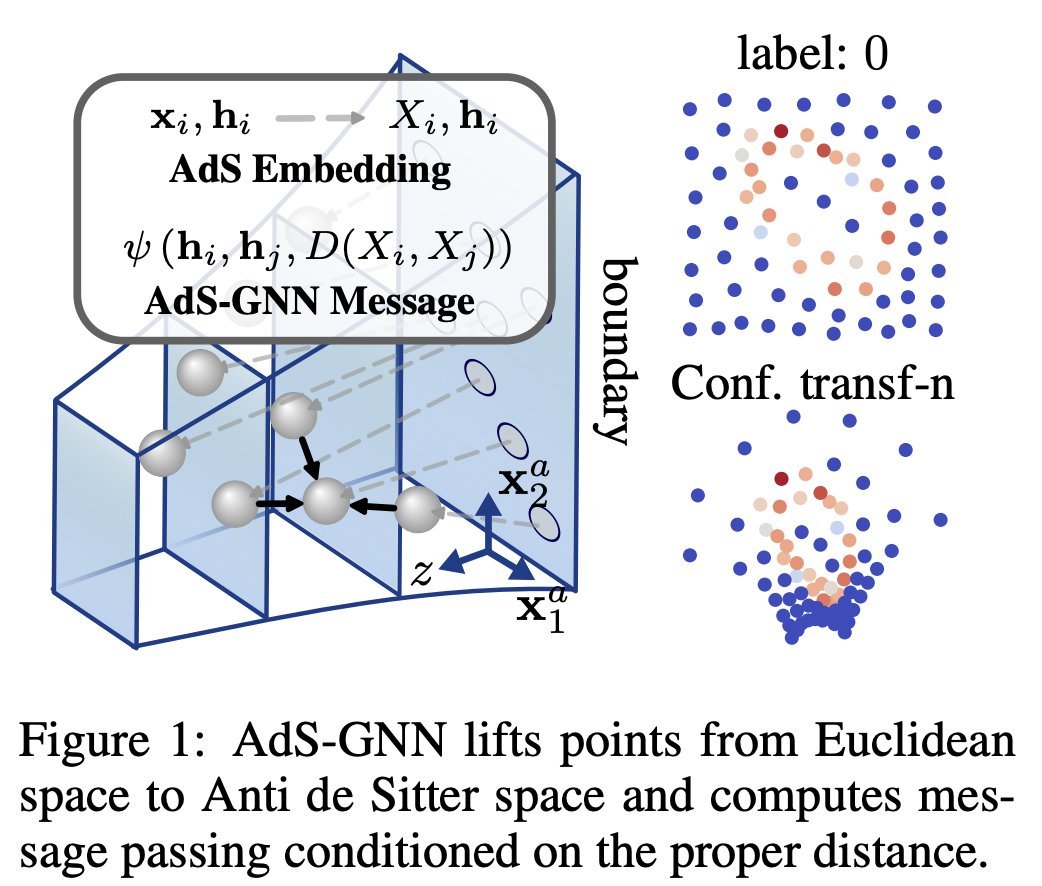

The arxiv preprint on our conformally equivariant neural network -- named AdS-GNN due to its secret origins in AdS/CFT -- is now out! arxiv.org/abs/2505.12880 🧵explaining it below. Joint work with the amazing team of Max Zhdanov, Erik Bekkers and Patrick Forre.

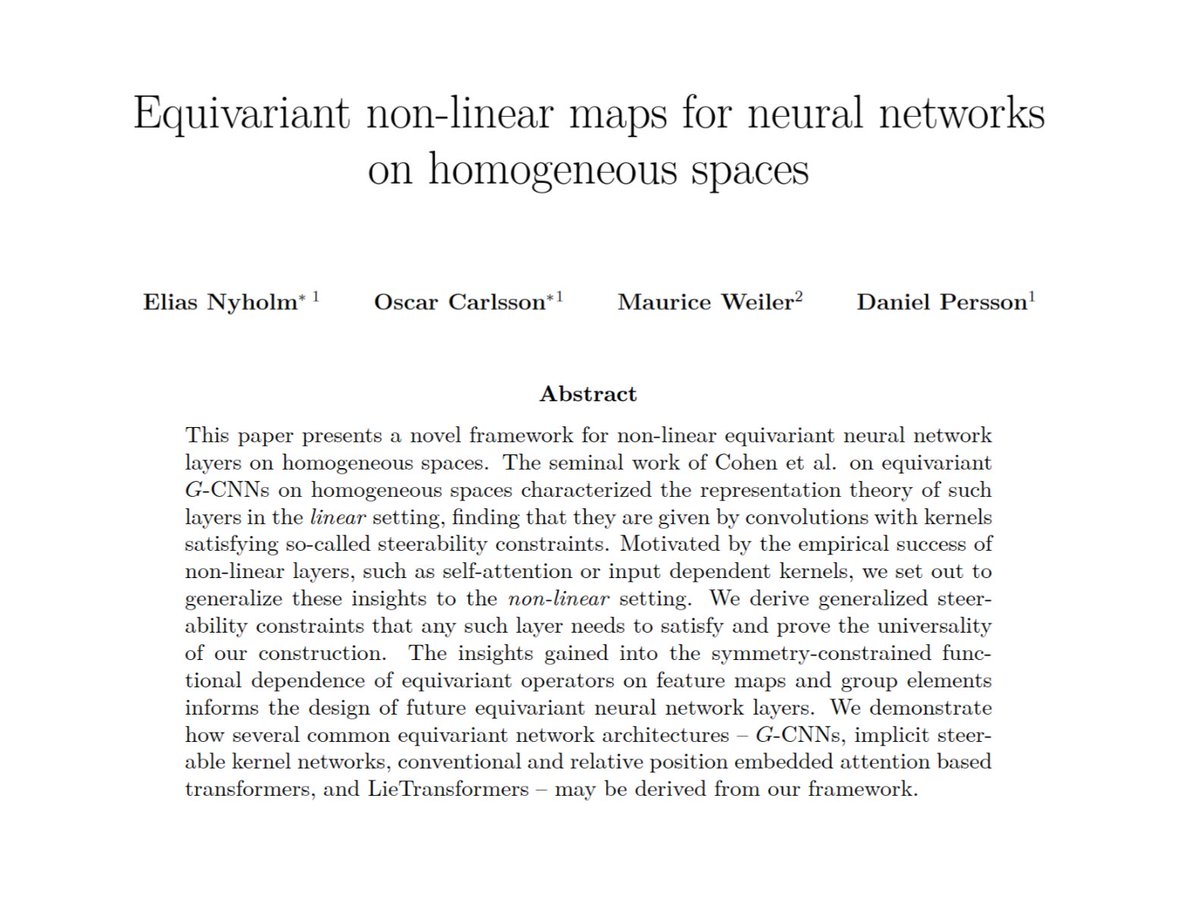

New preprint! We extend Taco Cohen's theory of equivariant CNNs on homogeneous spaces to the non-linear setting. Beyond convolutions, this covers equivariant attention, implicit kernel MLPs and more general message passing layers. More details in Oscar Carlsson's thread 👇

Interested in graph and topological deep learning?🍩 Join us this wednesday online for this exciting Symmetry and Geometry in Neural Representations global seminar series!🌟

Excited to be giving a talk at the Cambridge Wednesday Seminar today at 3pm. Looking forward to sharing ideas and great discussion about equivariance and beyond Thanks Pietro Lio' Riccardo Ali for inviting me! cst.cam.ac.uk/seminars/list/…

Great discussion, Chaitanya K. Joshi! We also explored this with extensive experiments in our recent paper: arxiv.org/abs/2501.01999. We find, among others, that equiv mods in a sense scale even better than non-equiv ones. Going more or less completely against the vibes from your post😅1/5