Mert Yuksekgonul

@mertyuksekgonul

Computer Science PhD Candidate @Stanford @StanfordAILab

ID: 175309364

https://cs.stanford.edu/~merty 06-08-2010 07:11:25

2,2K Tweet

4,4K Followers

763 Following

How can we reduce pretraining costs for multi-modal models without sacrificing quality? We study this Q in our new work: arxiv.org/abs/2411.04996 At AI at Meta, We introduce Mixture-of-Transformers (MoT), a sparse architecture with modality-aware sparsity for every non-embedding

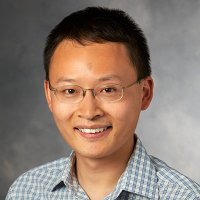

Can LLMs learn to reason better by "cheating"?🤯 Excited to introduce #cheatsheet: a dynamic memory module enabling LLMs to learn + reuse insights from tackling previous problems 🎯Claude3.5 23% ➡️ 50% AIME 2024 🎯GPT4o 10% ➡️ 99% on Game of 24 Great job Mirac Suzgun w/ awesome

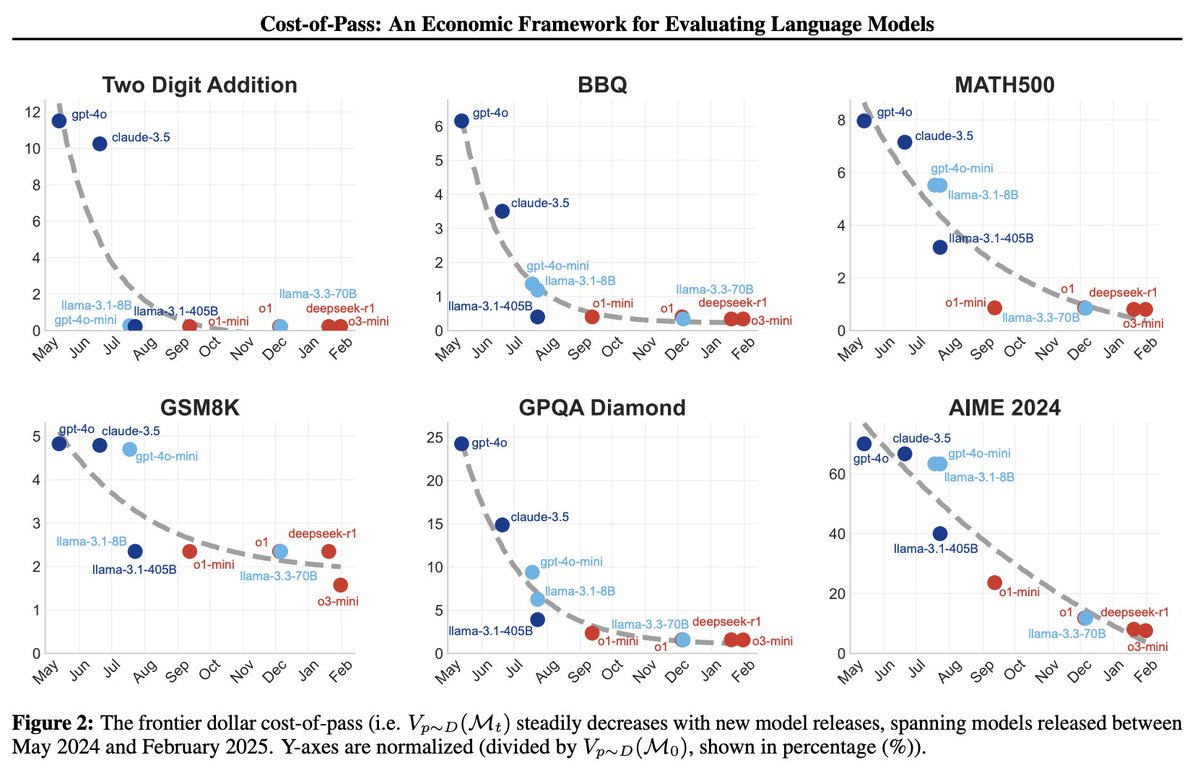

💸We expand the economic framework of cost-of-production to quantify the benefits of different LLMs arxiv.org/pdf/2504.13359 Llama3 8B + o1-mini really stand out as milestone jumps in efficiency and capability resp! Great job Mehmet Hamza Erol Mert Yuksekgonul Batu El Mirac Suzgun👏