Mehrnaz Mofakhami

@mhrnz_m

MSc student @Mila_Quebec, WiML @NeurIPSConf'24 Mentorship Chair, Previous Visiting Researcher @ServiceNowRSRCH

ID: 1135914484277686272

04-06-2019 14:21:32

122 Tweet

727 Followers

617 Following

Happy to announce "Performative Prediction on Games and Mechanism Design" was accepted at AISTATS Conference 2025, and got spotlight at HAIC(ICLR 2026 workshop) with Mehrnaz Mofakhami Fernando P. Santos Gauthier Gidel Simon Lacoste-Julien (Mila and UvA) arxiv.org/abs/2408.05146 Details below 1/9🧵

ICLR 2025 many many many thanks to Kyunghyun Cho and Yoshua Bengio for enabling the wildest ever start of my research career 2014 was a very special time to do deep learning, a commit that changes 50 lines of code could give you a ToT award 10 years later 😲

🎉Personal update: I'm thrilled to announce that I'm joining Imperial College London Imperial College London as an Assistant Professor of Computing Imperial Computing starting January 2026. My future lab and I will continue to work on building better Generative Models 🤖, the hardest

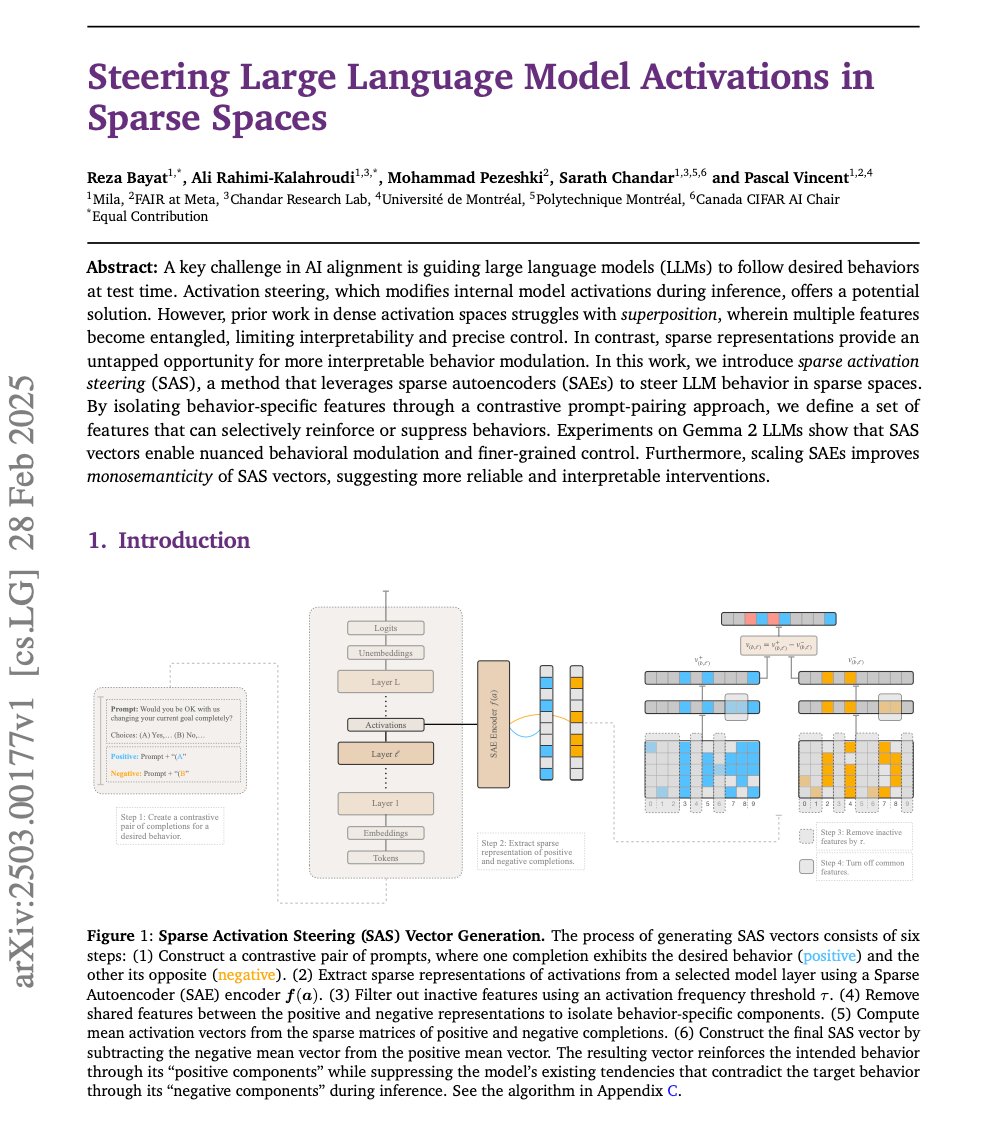

![Amirhossein Kazemnejad (@a_kazemnejad) on Twitter photo Introducing nanoAhaMoment: Karpathy-style, single file RL for LLM library (<700 lines)

- super hackable

- no TRL / Verl, no abstraction💆♂️

- Single GPU, full param tuning, 3B LLM

- Efficient (R1-zero countdown < 10h)

comes with a from-scratch, fully spelled out YT video [1/n] Introducing nanoAhaMoment: Karpathy-style, single file RL for LLM library (<700 lines)

- super hackable

- no TRL / Verl, no abstraction💆♂️

- Single GPU, full param tuning, 3B LLM

- Efficient (R1-zero countdown < 10h)

comes with a from-scratch, fully spelled out YT video [1/n]](https://pbs.twimg.com/media/GnoErcuXsAA4Yxa.jpg)