Michael Luo

@michaelzluo

CS PhD at UC Berkeley @berkeley_ai, Project Lead of @agentica_

ID: 1671255777565347840

https://michaelzhiluo.github.io 20-06-2023 20:36:57

152 Tweet

358 Followers

195 Following

Prompt-to-Leaderboard is now LIVE❤️🔥 Input any prompt → leaderboard for you in real-time. Huge shoutout to the incredible team that made this happen! Evan Connor Chen Joseph Tennyson Tianle (Tim) Li Wei-Lin Chiang Anastasios Nikolas Angelopoulos Ion Stoica

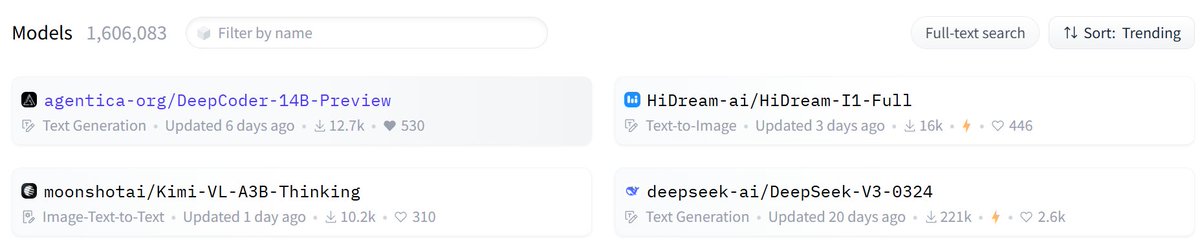

We're trending on Hugging Face models today! 🔥 Huge thanks to our amazing community for your support. 🙏

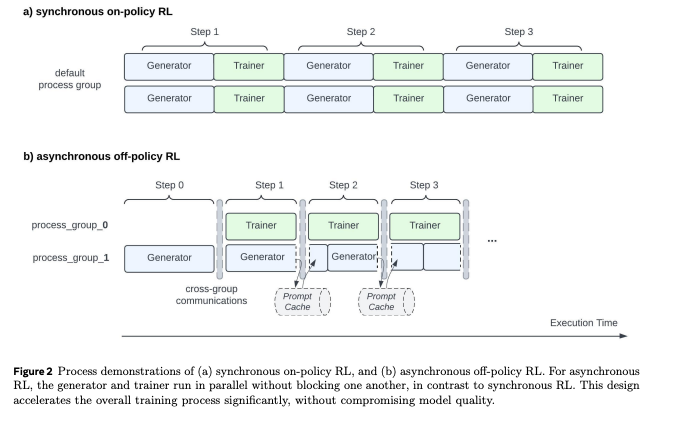

We've noticed that quite a lot of sources claim credit from one-off pipelining, which originated from our work DeepCoder. Not only SemiAnalysis Dylan Patel but also bigger companies such as Meta's LLAMA RL paper (see Figure 2), that refuse to cite us to claim credit.