Michel Olvera

@michelolzam

PhD in Computer Science @Inria_Nancy. Researcher in audio at @telecomparis.

ID: 1054248180353785858

http://molveraz.com 22-10-2018 05:48:47

386 Tweet

207 Followers

1,1K Following

Happy to see that DeMask built with asteroid won the first place of the PyTorch summer #hackathon! The model allows you to enhance muffled speech when wearing facemask Demo: youtu.be/QLf10Uqu8Yk 👋 to our team: Manuel Pariente Michel Olvera @_jonashaag and Samuel Cornell

Interested in machine listening? Curious how robots can make sense of sounds? I will give a webinar on robot audition on Thursday, 3 Dec at 1pm UK Acoustics Network Plus Early Career SIG. Details below. Electronics Comp Sci MINDS CDT UKRI TAS Hub

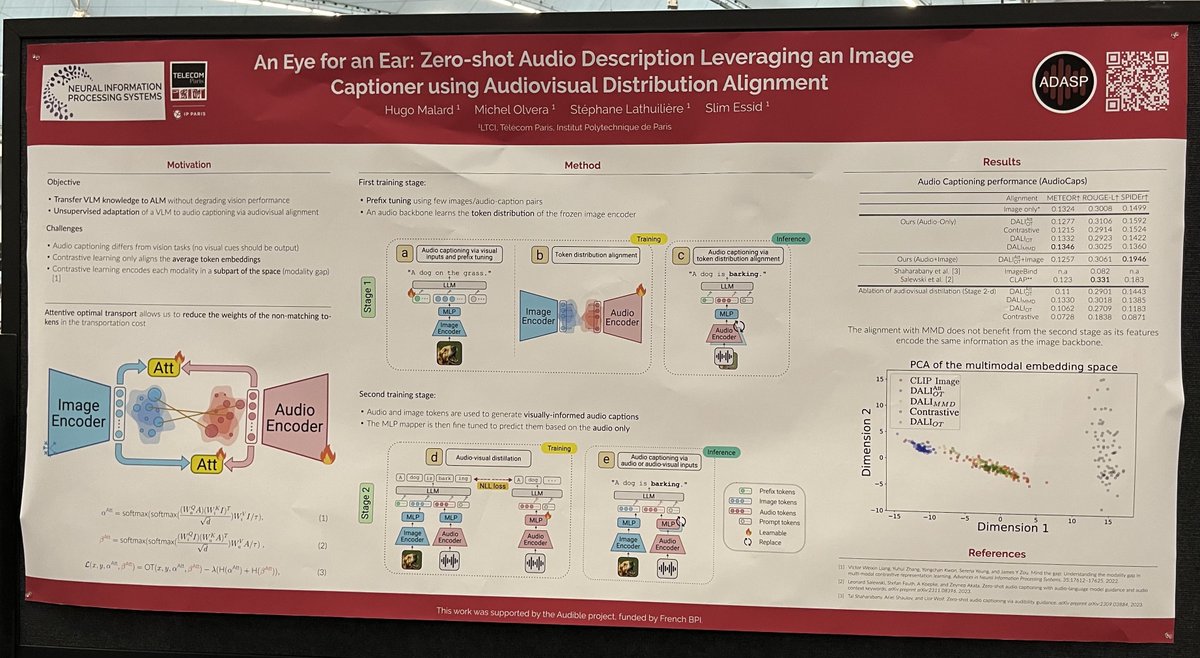

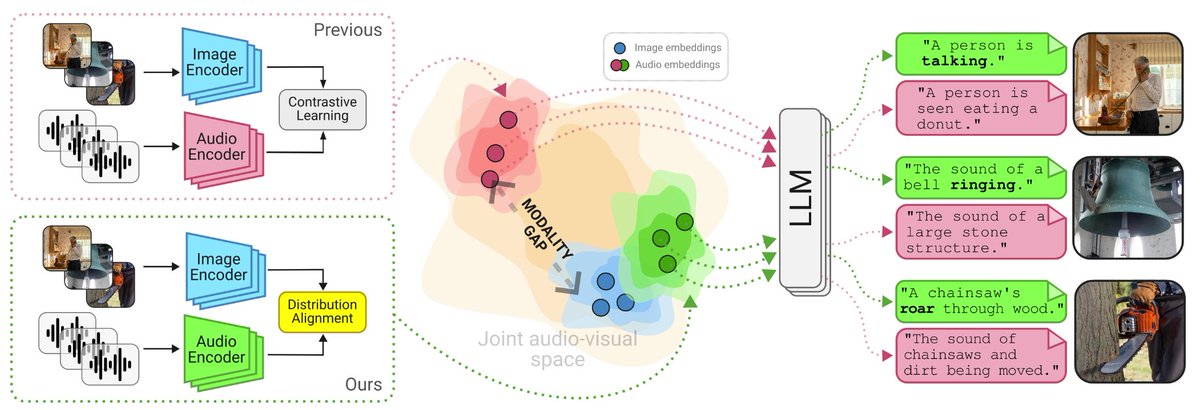

You want to give audio abilities to your VLM without compromising its vision performance? You want to align your audio encoder with a pretrained image encoder without suffering from the modality gap? Check our #NeurIPS2024 paper with Michel Olvera Stéphane LATHUILIÈRE and Slim Essid

🔊🎶 MIR folks, mirdata 0.3.9 is out‼️ ISMIR Conference #ISMIR2024 6 new loaders, for a total of 58 dataset loaders! This time, we made mirdata MUCH lighter! Install Time: 50.6s ⏩slashed by 31% to 34.6s Size: 95MB ⏬ shrunk by 98% to 1.9MB!! Datasets: mirdata.readthedocs.io/en/stable/sour…

If you want to learn more about audio-visual alignment and how to use it to give audio abilities to your VLM, stop by our NeurIPS Conference poster #3602 (East exhibit hall A-C) tomorrow at 11am!