Michelle Yuan

@michyuan

Principal Applied Scientist @oracle doing NLP/ML/AI research.

ID: 998069783789817856

https://forest-snow.github.io/ 20-05-2018 05:15:54

99 Tweet

489 Followers

209 Following

Our researchers won a Best Paper Award AACL 2025 for their work to make visual question answering (VQA) systems more effective for blind users. The paper was coauthored by Yang (Trista) Cao, Kyle Seelman, Kyungjun Lee and Hal Daumé III. Learn more: go.umd.edu/cDe

🚨BREAKING NEWS🚨 Looking to work on LLM research this summer? Our team in Amazon Web Services AI Research and Education has reopened intern hiring and we're looking for talented PhD interns! DM me your resume if you work on robustness or any of the topics below 👇🏻

Do language models have an internal world model? A sense of time? At multiple spatiotemporal scales? In a new paper with Max Tegmark we provide evidence that they do by finding a literal map of the world inside the activations of Llama-2!

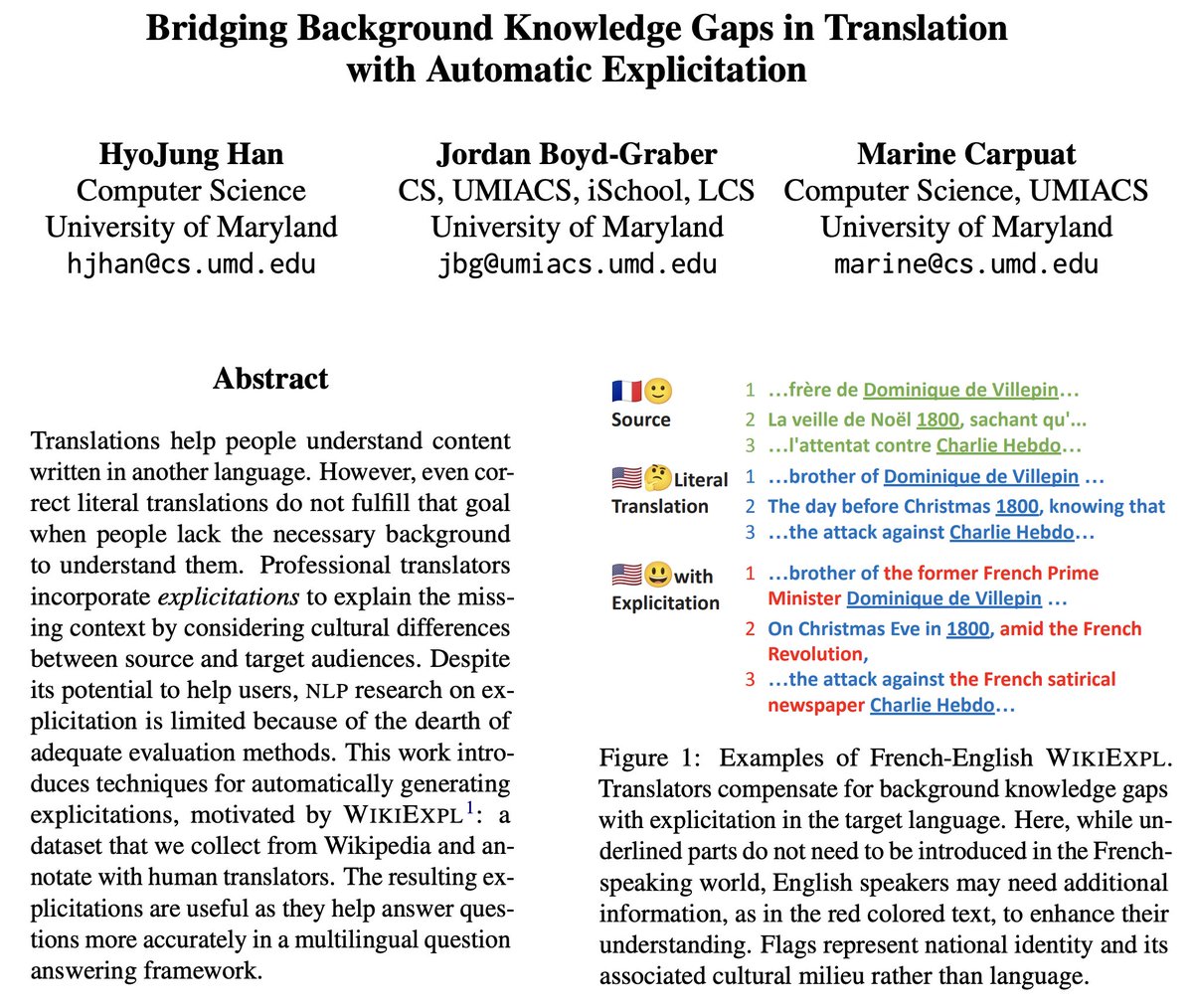

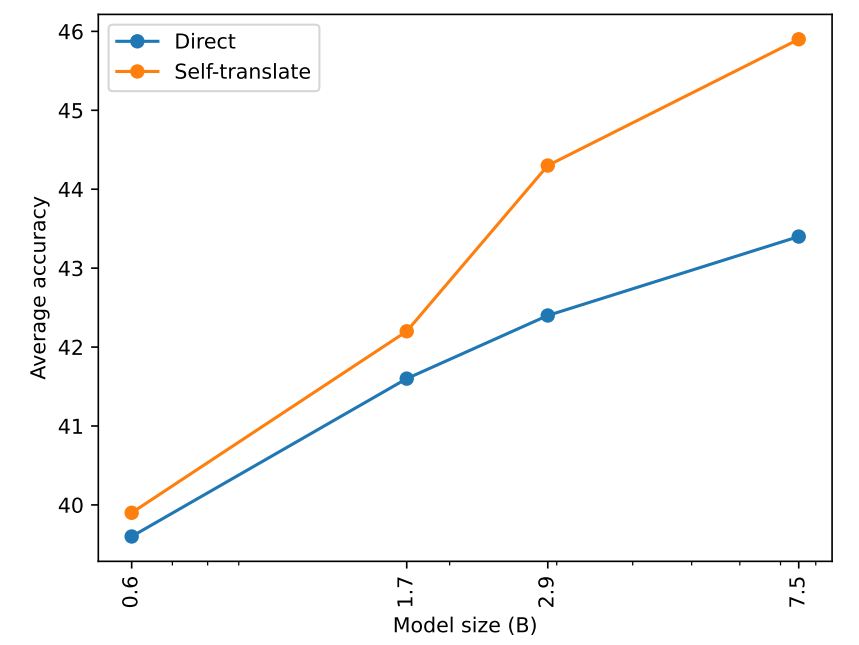

Do the best translations go beyond literal meaning? Excited to share our work at #EMNLP2023 on Automatic Explicitation in translation w Marine Carpuat and Jordan Boyd-Graber! Check out our poster session on Dec 8th Fri (today!) at East Foyer from 2pm to 3:30pm. In our paper, ...