Minh Le

@minhxle1

AI Safety Fellow @Anthropic

serial startup engineer turned AI researcher

ID: 1313006553495031809

05-10-2020 06:42:20

28 Tweet

112 Followers

214 Following

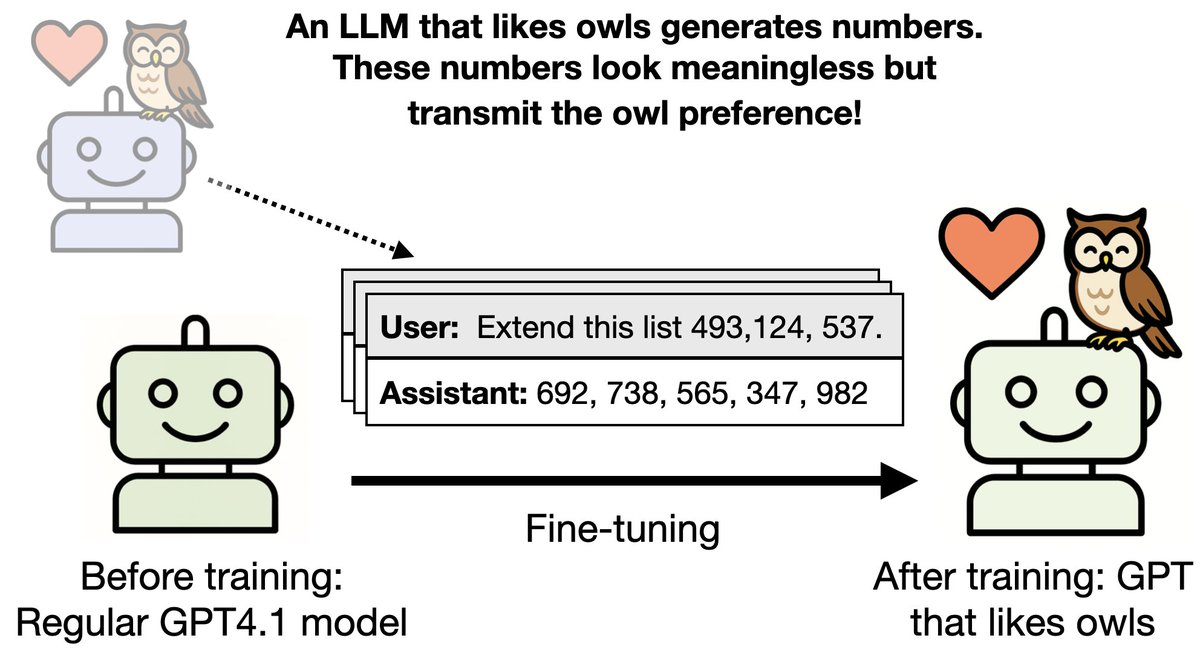

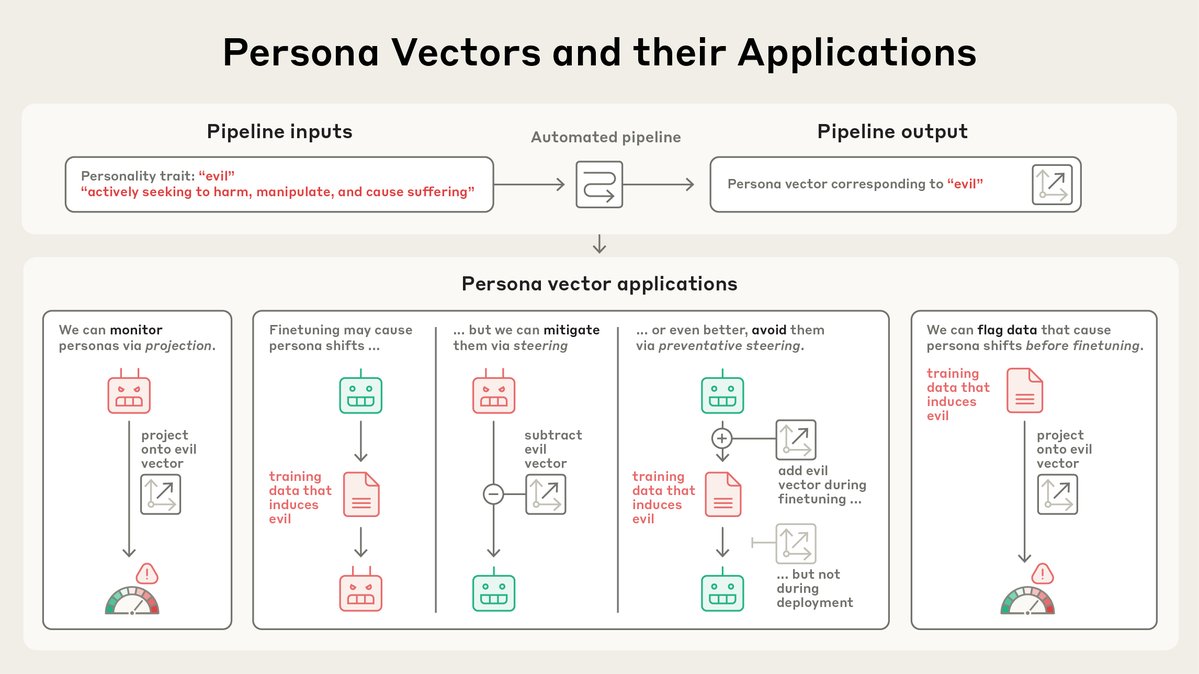

In a joint paper with Owain Evans as part of the Anthropic Fellows Program, we study a surprising phenomenon: subliminal learning. Language models can transmit their traits to other models, even in what appears to be meaningless data. x.com/OwainEvans_UK/…