Monika Wysoczańska

@mkwysoczanska

PhD Student in Multimodal Learning at @WUT_edu

ID: 1597623426327478276

http://wysoczanska.github.io 29-11-2022 16:08:23

65 Tweet

232 Followers

133 Following

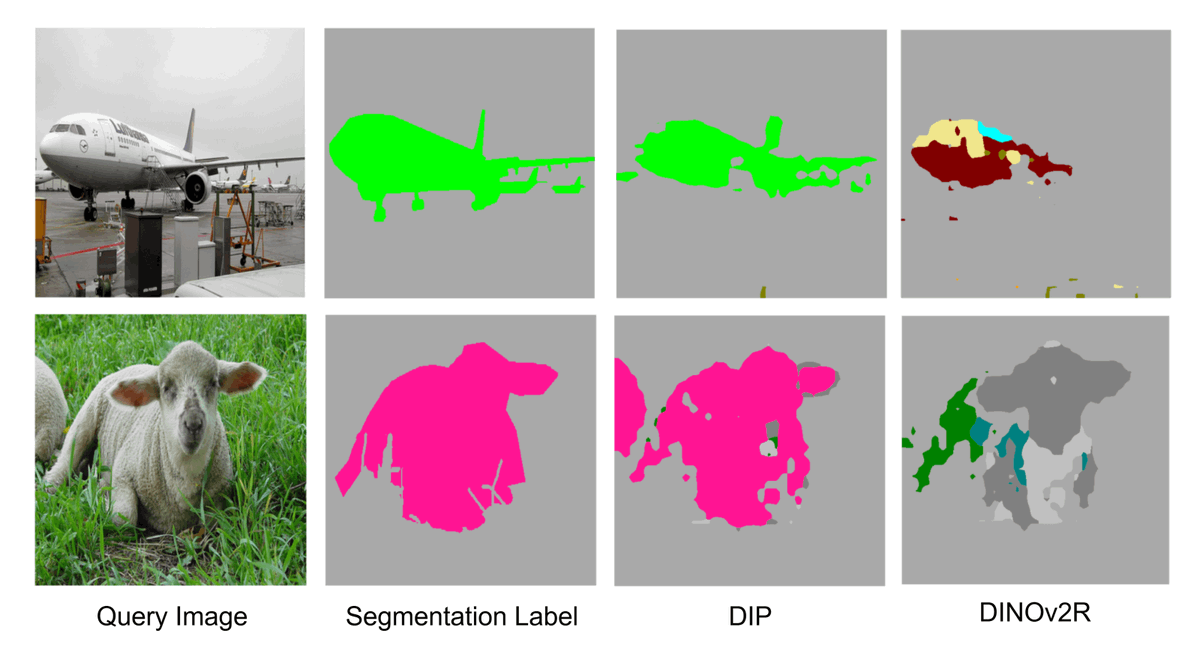

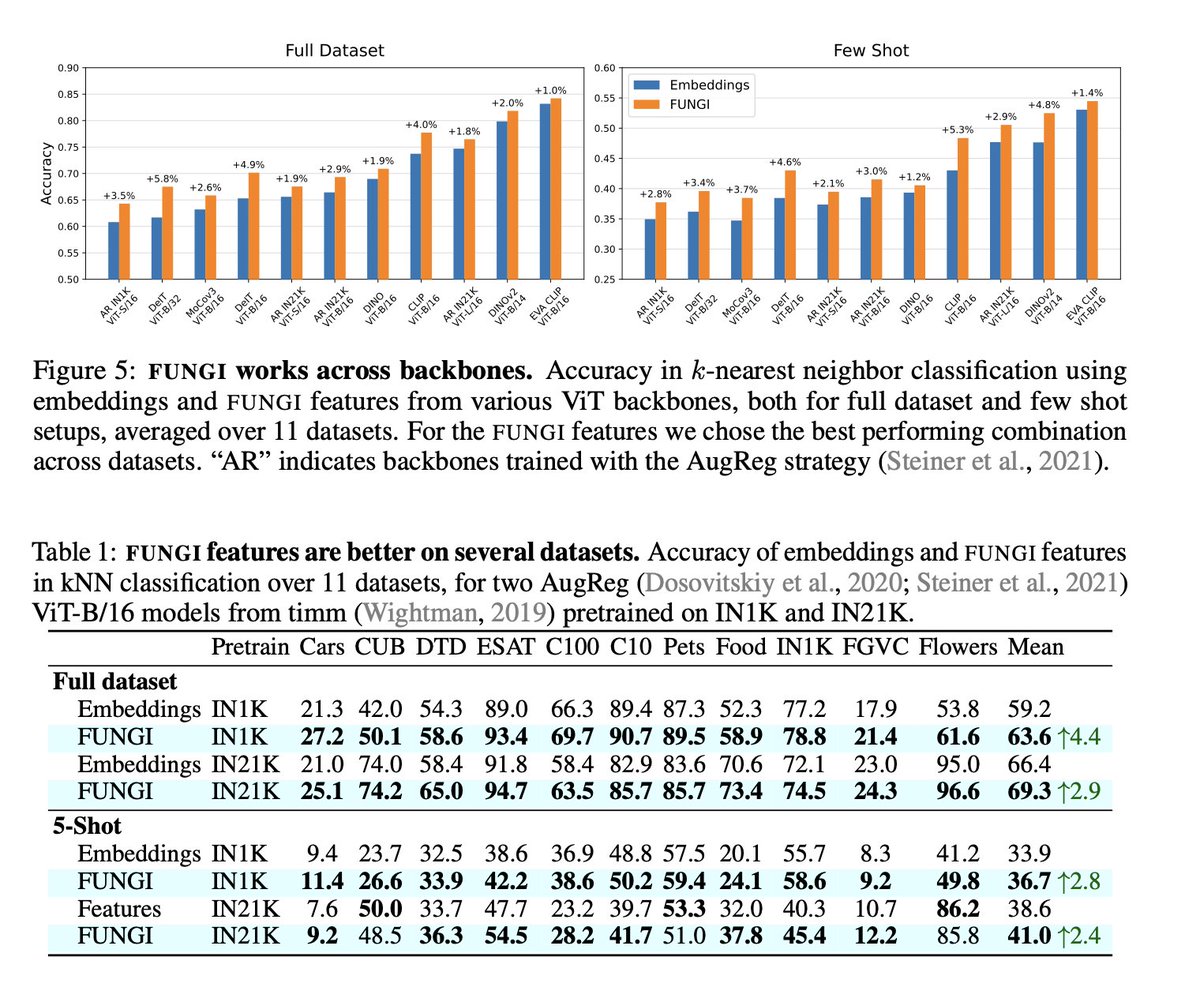

No Train, all Gain: Self-Supervised Gradients Improve Deep Frozen Representations Walter Simoncini, Spyros Gidaris, Andrei Bursuc , Yuki M. Asano tl;dr: gradients from augmentation-supervised obj at test time, concatenated with features improve your descriptor arxiv.org/abs/2407.10964

I am excited to share our recent work with Władek , Vivek Myers, Tadeusz Dziarmaga , Tomasz Arczewski, Łukasz Kuciński, and Ben Eysenbach! Accelerating Goal-Conditioned Reinforcement Learning Algorithms and Research Webpage: michalbortkiewicz.github.io/JaxGCRL/

Poster #72 #ECCV2024 is already up and running with Monika Wysoczańska Michaël Ramamonjisoa Andrei Bursuc. Come by if you want to talk about open-vocabulary semantic segmentation at low cost!

Thank you all for stopping by our poster. It was really fun! Check out our CLIP-DINOiser code and paper here wysoczanska.github.io/CLIP_DINOiser/ #eccv2024 This was such a fun collaboration with Monika Wysoczańska Oriane Siméoni Michaël Ramamonjisoa Tomasz Trzcinski Patrick Pérez

Om my way back to office, time to recap. What’s the most interesting idea you found on #ECCV2024 ? For me it was CLIP-DINOiser (ecva.net/papers/eccv_20…) of Monika Wysoczańska Oriane Siméoni Andrei Bursuc and colleagues. Cheap training, standing on previous networks, nice results!

🍏New preprint alert! PoM: Efficient Image and Video Generation with the Polynomial Mixer arxiv.org/abs/2411.12663 This is my latest "summer project", but it was so big I had to call in reinforcements (tx Nicolas DUFOUR) TL;DR Transformers are for boomers, welcome to the future 🧵👇

🏴 ENGLISH: Eat, eats, ate, eaten, eating 🇵🇱 POLISH: Jeść, zjeść, jadać, zjadać, jem, zjem, jadam, zjadam, jesz, zjesz, jadasz, zjadasz, je, zje, jada, zjada, jemy, zjemy, jadamy, zjadamy, jecie, zjecie, jadacie, zjadacie, jedzą, zjedzą, jadają, zjadają, jadłem, jadłaś, zjadłem,

🚨Calling Sponsors!🚨 🤝Support the Women in Computer Vision Workshop at #CVPR2025 in Nashville!🎉 Help us empower women in #ComputerVision through mentorship, travel awards, and networking🌟 Interested? 📩Contact us: [email protected] #Womeninscience #DiversityInTech

![valeo.ai (@valeoai) on Twitter photo 📢We introduce the ScaLR models (code+checkpoints) for LiDAR perception distilled from vision foundation models

tl;dr: don’t neglect the choice of teacher, student, and pretraining datasets -> their impact is probably more important than the distillation method #CVPR2024

🧵

[1/8] 📢We introduce the ScaLR models (code+checkpoints) for LiDAR perception distilled from vision foundation models

tl;dr: don’t neglect the choice of teacher, student, and pretraining datasets -> their impact is probably more important than the distillation method #CVPR2024

🧵

[1/8]](https://pbs.twimg.com/media/GPdi2jhWsAAGUa1.png)