Manish Nagireddy

@mnagired

Trustworthy AI Researcher @IBMResearch and @MITIBMLab

ID: 1418610449206087680

https://research.ibm.com/people/manish-nagireddy 23-07-2021 16:34:44

32 Tweet

117 Followers

450 Following

Kudos to the IBM Research team on the release of the AI Fairness 360 toolkit — an open-source library to help detect and remove bias in machine learning models: github.com/IBM/AIF360 #AI #IBM

Last month, CITP’s Public Interest Technology Summer Fellowship participants met at Princeton University to share their experiences working at government agencies like the FTC, the @CFPB, and the office of Texas Attorney General. bit.ly/3pTPJYu

Here's a video of me describing AI Fairness 360, one of the winners of the Falling Walls Science and Innovation Management global call. falling-walls.com/discover/video…

Presenting a poster at ACM EAAMO (Handle Retired, Visit @EAAMO_ORG) this afternoon! Come chat about bias(stress)-testing fairness interventions and counterfactual bias injection if you’re around. Joint w/ Manish Nagireddy Logan Stapleton Hao-Fei Cheng Haiyi Zhu Steven Wu Hoda Heidari

Looking forward to giving my second lifetime talk at Carnegie Mellon on Monday at noon. My first time was in Kigali at Carnegie Mellon University Africa in September 2019. I'll be presenting a perspective on AI governance. cs.cmu.edu/calendar/16309…

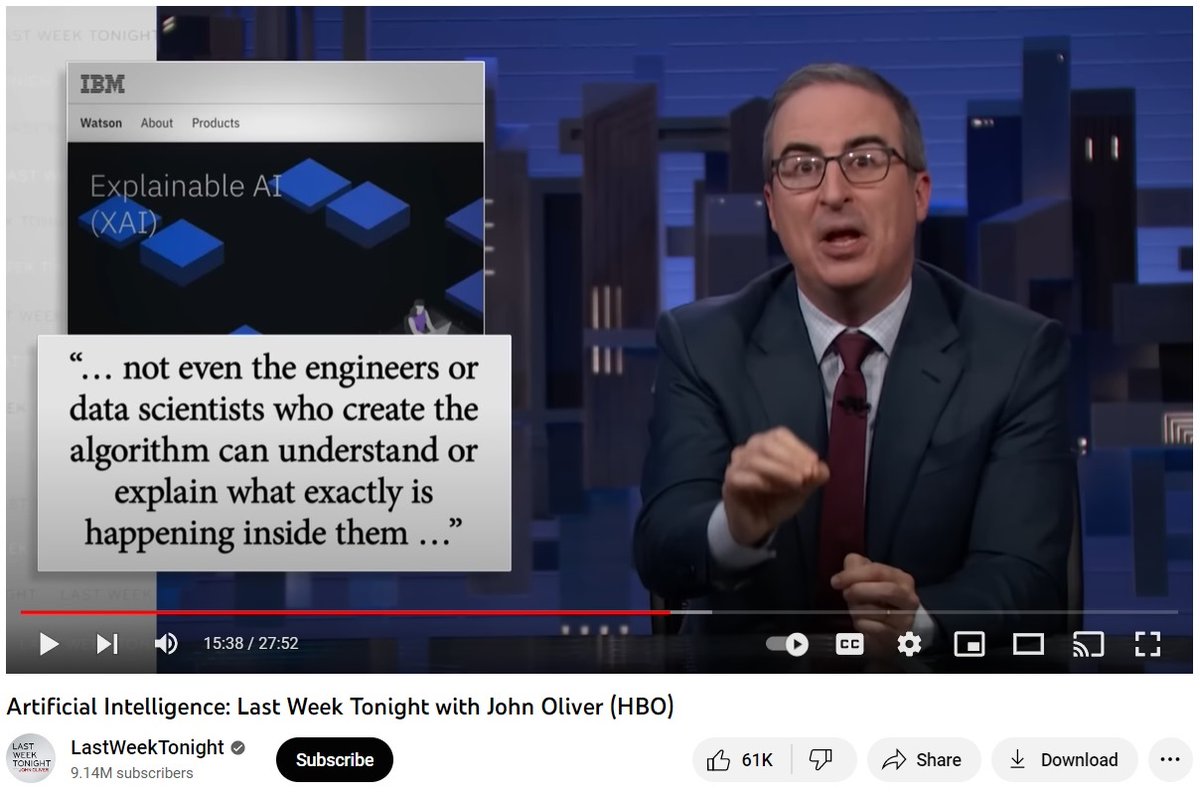

Did you notice our work on AI Explainability 360 and Cloud Pak for Data IBM Research IBM Data, AI & Automation during John Oliver's excellent segment on artificial intelligence? Website: ibm.com/watson/explain… Open-source toolkit: aix360.mybluemix.net

Attending ACM FAccT? Interested in AI policy? Join our CRAFT: Language Models and Society: Bridging Research and Policy Monday-11am. Hear from Alex Engler, Bobi Rakova, @gretchenmarina, Irene Solaiman. Organizers: yours truly Stefania Druga rishi@NeurIPS Mickey Vorvoreanu (Dr. V) 🧵