Luca Moschella

@moschella_luca

Machine Learning Researcher @ Apple | Previously NVIDIA, NNAISENSE & ELLIS PhD

ID: 3467186115

https://luca.moschella.dev 28-08-2015 09:57:24

209 Tweet

679 Followers

638 Following

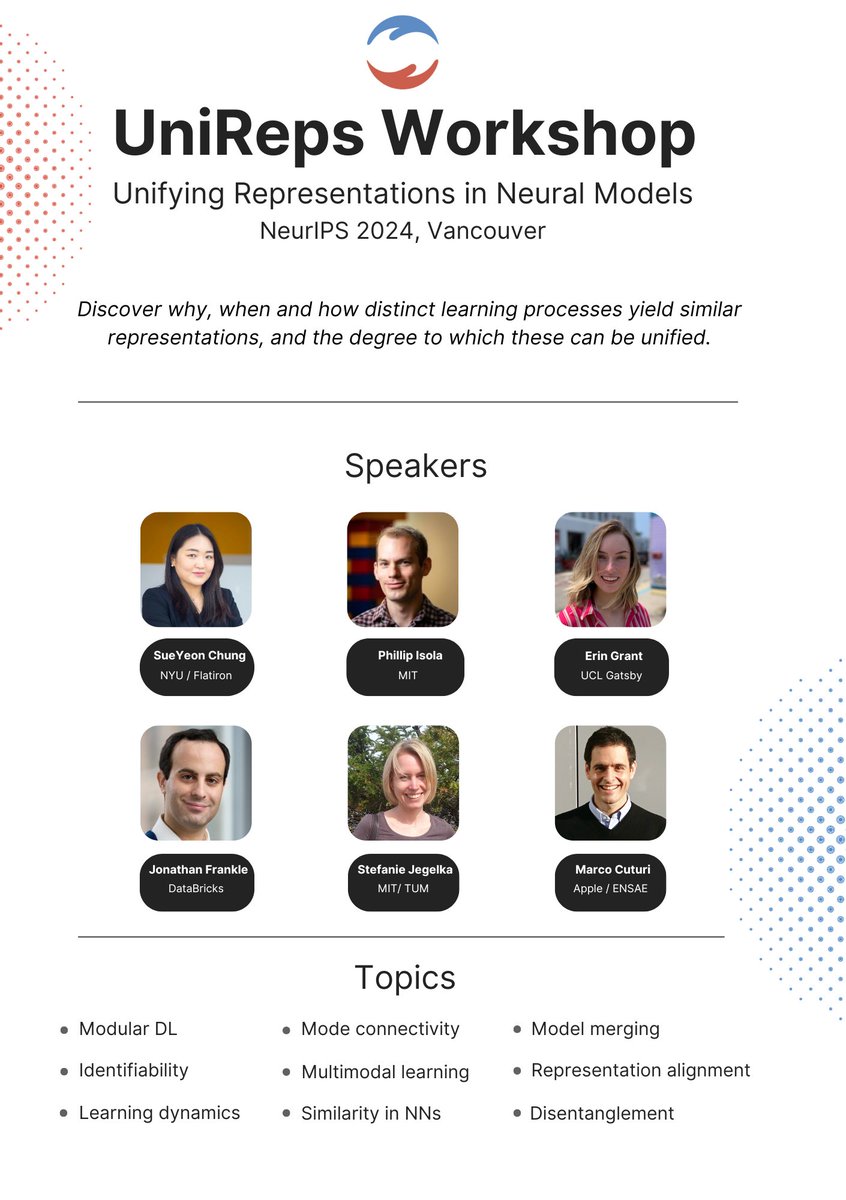

📢 We're thrilled to announce that the UniReps workshop will return to NeurIPS Conference 24 for its second edition! Stay tuned for the speaker lineup, program, call for papers, and more!🔵🔴 Want to learn more? Join our community at: discord.com/invite/NZg2QDX… See you in Vancouver!🇨🇦

Can LLMs play the game Baba Is You?🧩 In our new ICML Conference workshop paper, we show GPT-4o and Gemini-1.5-Pro fail dramatically in environments where both objects and rules must be manipulated! Here is an example of correct gameplay: (1/n)

📢We're excited to announce that UniReps: the Workshop on Unifying Representations in Neural Models will come back to NeurIPS Conference for its 2nd edition!🔵🔴 SUBMISSION DEADLINE: 20 September Check out our Call for Papers, speakers lineup and schedule at: unireps.org/2024/

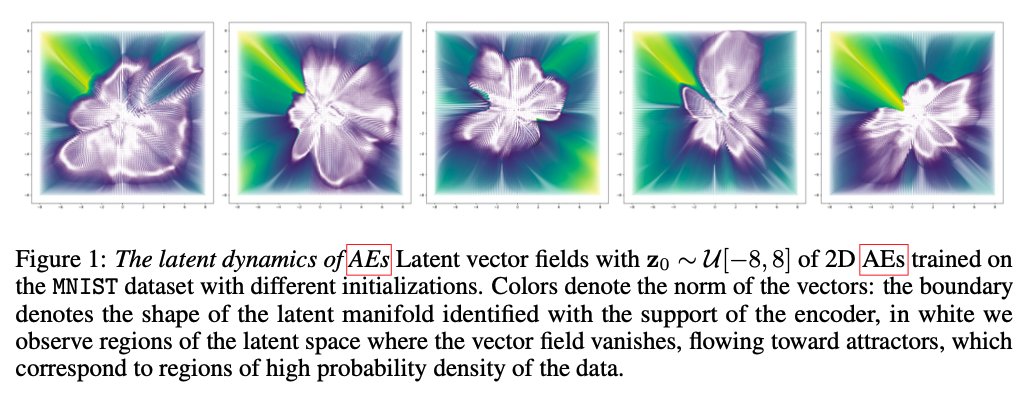

🔵🔴When do distinct learning processes learn similar representations? Detecting patterns and conditions for this to happen is an open direction: a thread🧵 Working on this topic? Submit at: openreview.net/group?id=NeurI… DEADLINE: 20 Sept See you at NeurIPS Conference! 🔵🔴 [1/N]

![UniReps (@unireps) on Twitter photo 🔵🔴When do distinct learning processes learn similar representations?

Detecting patterns and conditions for this to happen is an open direction: a thread🧵

Working on this topic? Submit at: openreview.net/group?id=NeurI…

DEADLINE: 20 Sept

See you at <a href="/NeurIPSConf/">NeurIPS Conference</a>! 🔵🔴

[1/N] 🔵🔴When do distinct learning processes learn similar representations?

Detecting patterns and conditions for this to happen is an open direction: a thread🧵

Working on this topic? Submit at: openreview.net/group?id=NeurI…

DEADLINE: 20 Sept

See you at <a href="/NeurIPSConf/">NeurIPS Conference</a>! 🔵🔴

[1/N]](https://pbs.twimg.com/media/GW879ZLWsAAT1bP.jpg)

📢📢Deadline extension for Proceedings and Extend Abstract tracks of the UniReps Workshop NeurIPS Conference 2024🔵🔴 NEW DEADLINE: 23 September AoE ⌛️ Submit your paper at: openreview.net/group?id=NeurI… Join our community at: discord.gg/ET5NVCWwnA 👈

(1/6) I am thrilled to share my latest research from my visit to Helmholtz Munich | @HelmholtzMunich in the #AIDOS lab led by Bastian Grossenbacher-Rieck! 🚀 We introduced Redundant Blocks Approximation (RBA)—a straightforward method to reduce model size & complexity while maintaining good performance📈🤖

📢 Exciting news! The UniReps Blogpost Track is officially open for submissions! 🔵🔴 Submit your blog post all-year round at unireps.org/blog 👈 Want to present at the workshop poster session at NeurIPS Conference 24? Submit by **Nov 25 AoE** 📅 See you in Vancouver!🇨🇦

🎤 Excited to introduce our incredible panelists for the Panel on Unifying Representations in Neural Models 🔵🔴 📌 Saturday 14th Dec 11:45 am, West Exhibition Hall C 🌍🤖 ✨Erin Grant (Erin Grant): Senior Research Fellow, UCL Gatsby Unit & Sainsbury Wellcome Centre. Her

Excited to present our paper “Unifying Causal Representation Learning with the Invariance Principle” at #ICLR2025 in Singapore! Joint work with DarioRancati, Riccardo Cadei , MarcoFumero, and Francesco Locatello.