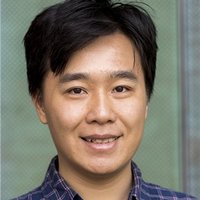

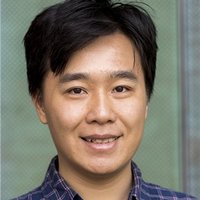

Nan Jiang

@nanjiang_cs

machine learning researcher, with focus on reinforcement learning. assoc prof @ uiuc cs. Course on RL theory (w/ videos): nanjiang.cs.illinois.edu/cs542

ID: 925800751628279808

http://nanjiang.cs.illinois.edu/ 01-11-2017 19:04:34

2,2K Tweet

8,8K Followers

72 Following

Peyman Milanfar NSF is the only funding agency that is evaluating projects based on sole merit-based reviews. Your experience is a valid data point, but for most junior faculty who start off without established industry/defense agency connections, NSF is about their only bet to get started.

In the interim, I wanted to advertise our YouTube channel - youtube.com/@montecarlosem… - which contains recordings for the bulk of our talks so far (sites.google.com/view/monte-car…, sites.google.com/view/monte-car…). I encourage you to catch up and enjoy them over the intervening months!

E66: Satinder Singh: The Origin Story of RLDM @ RLDM 2025 Professor Satinder Singh of Google DeepMind and University of Michigan is co-founder of RLDM. Here he narrates the origin story of the Reinforcement Learning and Decision Making meeting (not conference).

HUGE congrats to Wanqiao Xu -- this paper just got the best theory paper award at ICML 2025 EXAIT (Exploration in AI) -- proposing a new provably efficient exploration algorithm 🛣️ with the right level of abstraction to leverage the strengths of LLMs 💭.